Tag: Atari

Atari VCS Wireless Joystick as mouse

I bought a friend an Atari VCS Wireless Joystick as a Christmas gift thinking (incorrectly) it would work with an Atari 2600+. My only defense is that, I didn’t realize the VCS is different, the joystick looks the same and, I’m stupid for not reading the fine print. I subsequently bought my friend the correct, CX40+.

I am intrigued to see whether I can now repurpose the joystick as an alterntive mouse for a Linux machine. So far, this idea shows promise…

Tag: Bluetooth

Atari VCS Wireless Joystick as mouse

I bought a friend an Atari VCS Wireless Joystick as a Christmas gift thinking (incorrectly) it would work with an Atari 2600+. My only defense is that, I didn’t realize the VCS is different, the joystick looks the same and, I’m stupid for not reading the fine print. I subsequently bought my friend the correct, CX40+.

I am intrigued to see whether I can now repurpose the joystick as an alterntive mouse for a Linux machine. So far, this idea shows promise…

Tag: Joystick

Atari VCS Wireless Joystick as mouse

I bought a friend an Atari VCS Wireless Joystick as a Christmas gift thinking (incorrectly) it would work with an Atari 2600+. My only defense is that, I didn’t realize the VCS is different, the joystick looks the same and, I’m stupid for not reading the fine print. I subsequently bought my friend the correct, CX40+.

I am intrigued to see whether I can now repurpose the joystick as an alterntive mouse for a Linux machine. So far, this idea shows promise…

Tag: Rust

Atari VCS Wireless Joystick as mouse

I bought a friend an Atari VCS Wireless Joystick as a Christmas gift thinking (incorrectly) it would work with an Atari 2600+. My only defense is that, I didn’t realize the VCS is different, the joystick looks the same and, I’m stupid for not reading the fine print. I subsequently bought my friend the correct, CX40+.

I am intrigued to see whether I can now repurpose the joystick as an alterntive mouse for a Linux machine. So far, this idea shows promise…

Bare Metal: Pico and CYW43

The “W” signifies that the board includes wireless. Interestingly, the wireless chip is an Infineon CYW43439) which is itself a microcontroller running its own ARM Cortex chip (M3). The Pico’s USB device includes another ARM microcontroller. So, with the dual Cortex (or Hazard) chips that are user programmable, and the 8 PIOs, these devices really pack a punch.

As a result of adding the wireless (microcontroller) chip to the Pico, the Pico W’s on-board LED is accessible only through the CYW43439. Yeah, weird but it makes for an interesting solution.

Bare Metal: WS2812

This one works!

Virtual WS2812s

I’d gone cough many years and never heard of 1-Wire and, suddenly, it’s everywhere.

Addressable LEDs are hugely popular in tinkerer circles. Addressable LEDs come in myriad forms (wheels, matrices) but commonly they’re sold as long strips. The part number is WS2812 and they use 1-Wire too. Each, often multi-color (RGB) LED (often known as a pixel), is combined with an IC that enables the “addressable” behavior.

Bare Metal: DS18B20

I’ve been working through Google’s Comprehensive Rust and, for the past couple of weeks, the Bare Metal Rust standalone course that uses the (excellent) micro:bit v2 that has a Nordic Semiconductor nRF52833 (an ARM Cortex-M4; interestingly its USB interface is also implemented using an ARM Cortex M0).

There’s a wealth of Rust tutorials for microcontrollers and I bought an ESP32-C3-DevKit-RUST-1 for another tutorial and spent some time with my favorite Pi Pico and a newly-acquired Debug Probe.

Gemini Code Assist 'agent' mode without `npx mcp-remote` (2/3)

Solved!

Ugh.

Before I continue, one important detail from yesterday’s experience which I think I didn’t clarify is that, unlike the Copilot agent, it appears (!?) that Gemini agent only supports integration with MCP servers via stdio. As a result, the only way to integrate with HTTP-based MCP servers (local or remote) is to proxy traffic through stdio as mcp-remote and the Rust example herein.

The most helpful change was to take a hint from the NPM mcp-remote and create a log file. This helps because, otherwise the mcp-remote process, because it’s launched by Visual Studio Code, well Gemini Code Assist agent, isn’t trivial to debug.

Gemini Code Assist 'agent' mode without `npx mcp-remote` (1/3)

Former Microsoftie and Googler:

Good documentation Extend your agent with Model Context Protocol

Not such good documentation: Using agentic chat as a pair programmer

Definition of “good” being, I was able to follow the clear instructions and it worked first time. Well done, Microsoft!

This space is moving so quickly and I’m happy to alpha test these companies’ solutions but (a) Google’s portfolio is a mess. This week I’ve tried (and failed) to use Gemini CLI (because I don’t want to run Node.JS on my host machine and it doesn’t work in a container: issue #1437) and now this.

gRPC Firestore `Listen` in Rust

Obsessing on gRPC Firestore Listen somewhat but it’s also a good learning opportunity for me to write stuff in Rust. This doesn’t work against Google’s public endpoint (possibly for the same reason that gRPCurl doesn’t work either) but this does work against the Go server described in the other post.

I’m also documenting here because I always encounter challenges using TLS with Rust (and this documents 2 working ways to do this with gRPC) as well as references two interesting (rust) examples that use Google services.

gRPC-Web w/ FauxRPC and Rust

After recently discovering FauxRPC, I was sufficiently impressed that I decided to use it to test Ackal’s gRPC services using rust.

FauxRPC provides multi-protocol support and so, after successfully implementing the faux gRPC client tests, I was compelled to try gRPC-Web too. For no immediate benefit other than, it’s there, it’s free and it’s interesting. As an aside, the faux REST client tests worked without issue using Reqwest.

Unfortunately, my optimism hit a wall with gRPC-Web and what follows is a summary of my unresolved issue.

FauxRPC using gRPCurl, Golang and rust

Read FauxRPC + Testcontainers on Hacker News and was intrigued. I spent a little more time “evaluating” this than I’d planned because I’m forcing myself to use rust as much as possible and my ignorance (see below) caused me some challenges.

The technology is interesting and works well. The experience helped me explore Testcontainers too which I’d heard about but not explored until this week.

For my future self:

| What | What? |

|---|---|

| FauxRPC | A general-purpose tool (built using Buf’s Connect) that includes registry and stub (gRPC) services that can be (programmatically) configured (using a Protobuf descriptor) and stubs (example method responses) to help test gRPC implementations. |

| Testcontainers | Write code (e.g. rust) to create and interact (test)services (running in [Docker] containers). |

| Connect | (More than a) gRPC implementation used by FauxRPC |

| gRPCurl | A command-line gRPC tool. |

I started following along with FauxRPC’s Testcontainers example but, being unfamiliar with Connect, I wasn’t familiar with its Eliza service. The service is available on demo.connectrpc.com:443 and is described using eliza.proto as part of examples-go. Had I realized this sooner, I would have used this example rather than the Health Checking protocol.

XML-RPC in Rust and Python

A lazy Sunday afternoon and my interest was piqued by XML-RPC

Client

A very basic XML-RPC client wrapped in a Cloud Functions function:

main.py:

import functions_framework

import os

import xmlrpc.client

endpoint = os.get_env("ENDPOINT")

proxy = xmlrpc.client.ServerProxy(endpoint)

@functions_framework.http

def add(request):

print(request)

rqst = request.get_json(silent=True)

resp = proxy.add(

{"x":{

"real":rqst["x"]["real"],

"imag":rqst["x"]["imag"]

},

"y":{

"real":rqst["y"]["real"],

"imag":rqst["y"]["imag"]

}

})

return resp

requirements.txt:

functions-framework==3.*

Run it:

python3 -m venv venv

source venv/bin/activate

python3 -m pip install --requirement requirements.txt

export ENDPOINT="..."

python3 main.py

Server

Forcing myself to go Rust first and there’s an (old) xml-rpc crate.

Using Rust to generate Kubernetes CRD

For the first time, I chose Rust to solve a problem. Until this, I’ve been trying to use Rust to learn the language and to rewrite existing code. But, this problem led me to Rust because my other tools wouldn’t cut it.

The question was how to represent oneof fields in Kubernetes Custom Resource Definitions (CRDs).

CRDs use OpenAPI schema and the YAML that results can be challenging to grok.

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: deploymentconfigs.example.com

spec:

group: example.com

names:

categories: []

kind: DeploymentConfig

plural: deploymentconfigs

shortNames: []

singular: deploymentconfig

scope: Namespaced

versions:

- additionalPrinterColumns: []

name: v1alpha1

schema:

openAPIV3Schema:

description: An example schema

properties:

spec:

properties:

deployment_strategy:

oneOf:

- required:

- rolling_update

- required:

- recreate

properties:

recreate:

properties:

something:

format: uint16

minimum: 0.0

type: integer

required:

- something

type: object

rolling_update:

properties:

max_surge:

format: uint16

minimum: 0.0

type: integer

max_unavailable:

format: uint16

minimum: 0.0

type: integer

required:

- max_surge

- max_unavailable

type: object

type: object

required:

- deployment_strategy

type: object

required:

- spec

title: DeploymentConfig

type: object

served: true

storage: true

subresources: {}

I’ve developed several Kubernetes Operators using the Operator SDK in Go (which builds upon Kubebuilder).

Google Cloud Translation w/ gRPC 3 ways

General

You’ll need a Google Cloud project with Cloud Translation (translate.googleapis.com) enabled and a Service Account (and key) with suitable permissions in order to run the following.

BILLING="..." # Your Billing ID (gcloud billing accounts list)

PROJECT="..." # Your Project ID

ACCOUNT="tester"

EMAIL="${ACCOUNT}@${PROJECT}.iam.gserviceaccount.com"

ROLES=(

"roles/cloudtranslate.user"

"roles/serviceusage.serviceUsageConsumer"

)

# Create Project

gcloud projects create ${PROJECT}

# Associate Project with your Billing Account

gcloud billing accounts link ${PROJECT} \

--billing-account=${BILLING}

# Enable Cloud Translation

gcloud services enable translate.googleapis.com \

--project=${PROJECT}

# Create Service Account

gcloud iam service-accounts create ${ACCOUNT} \

--project=${PROJECT}

# Create Service Account Key

gcloud iam service-accounts keys create ${PWD}/${ACCOUNT}.json \

--iam-account=${EMAIL} \

--project=${PROJECT}

# Update Project IAM permissions

for ROLE in "${ROLES[@]}"

do

gcloud projects add-iam-policy-binding ${PROJECT} \

--member=serviceAccount:${EMAIL} \

--role=${ROLE}

done

For the code, you’ll need to install protoc and preferably have it in your path.

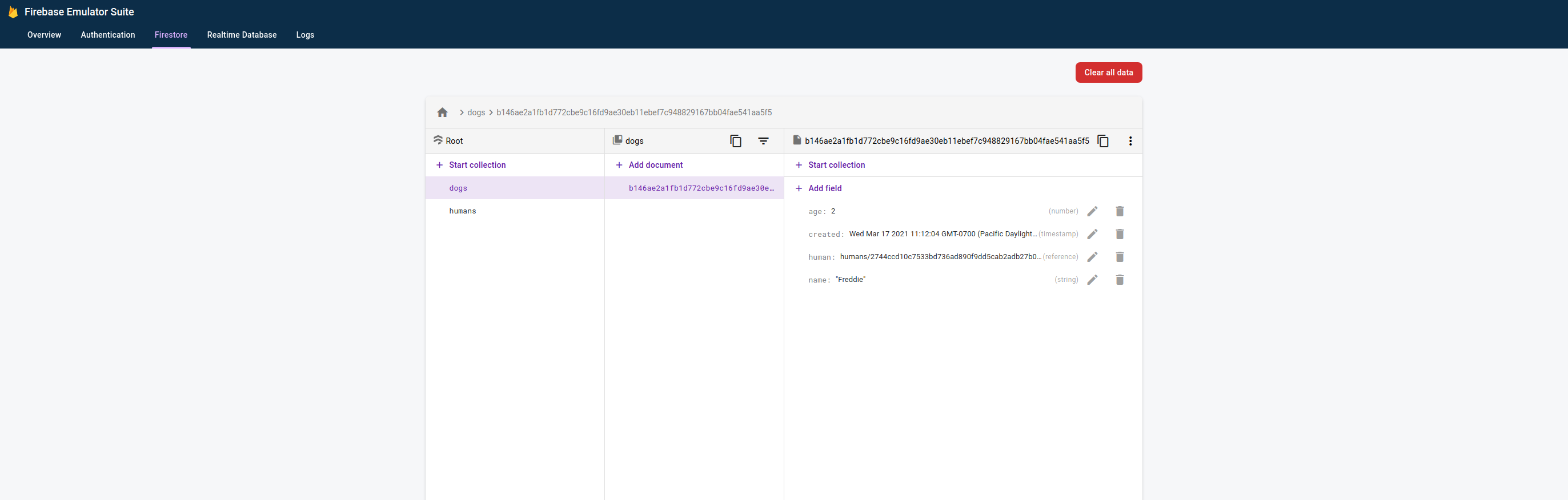

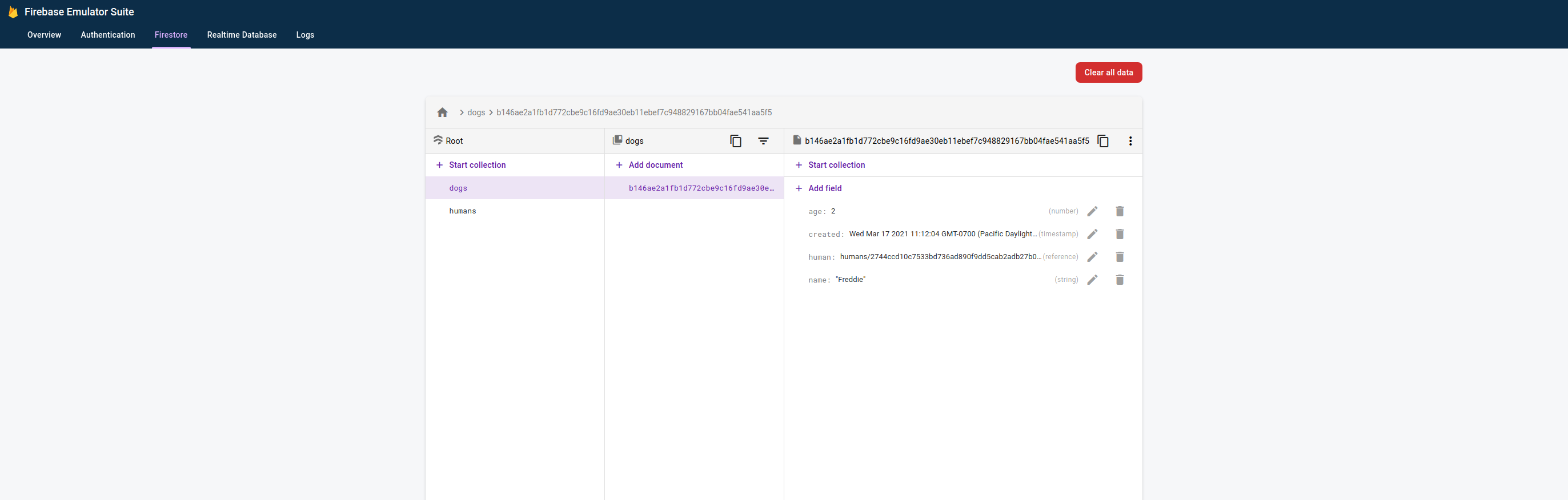

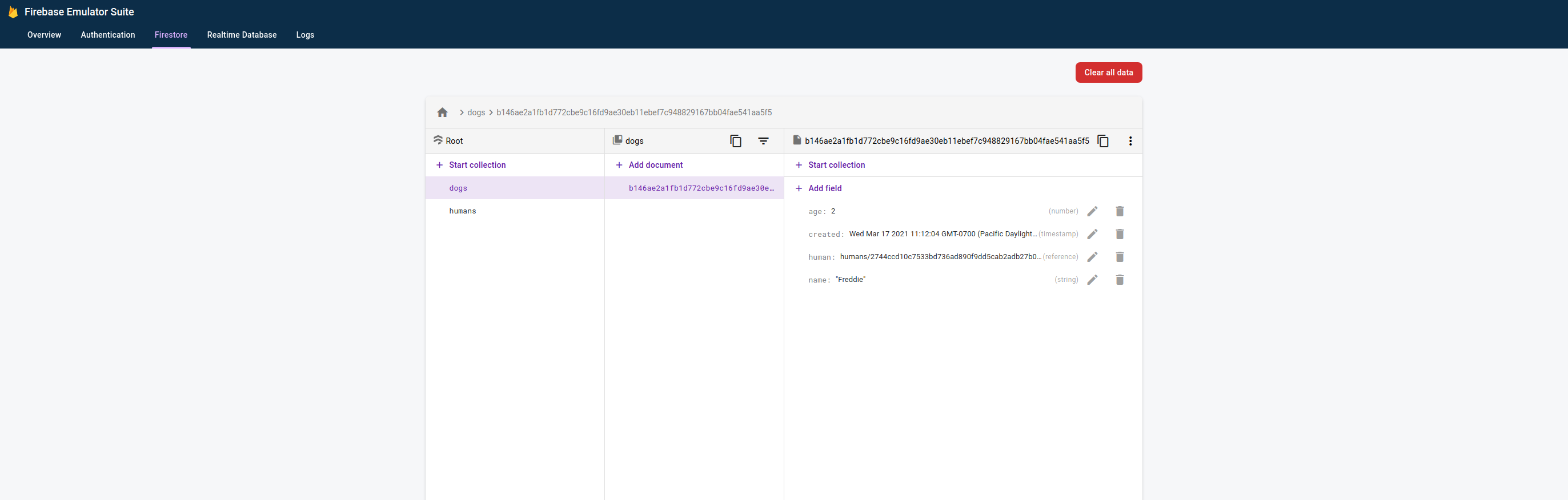

Google Cloud Events protobufs and SDKs

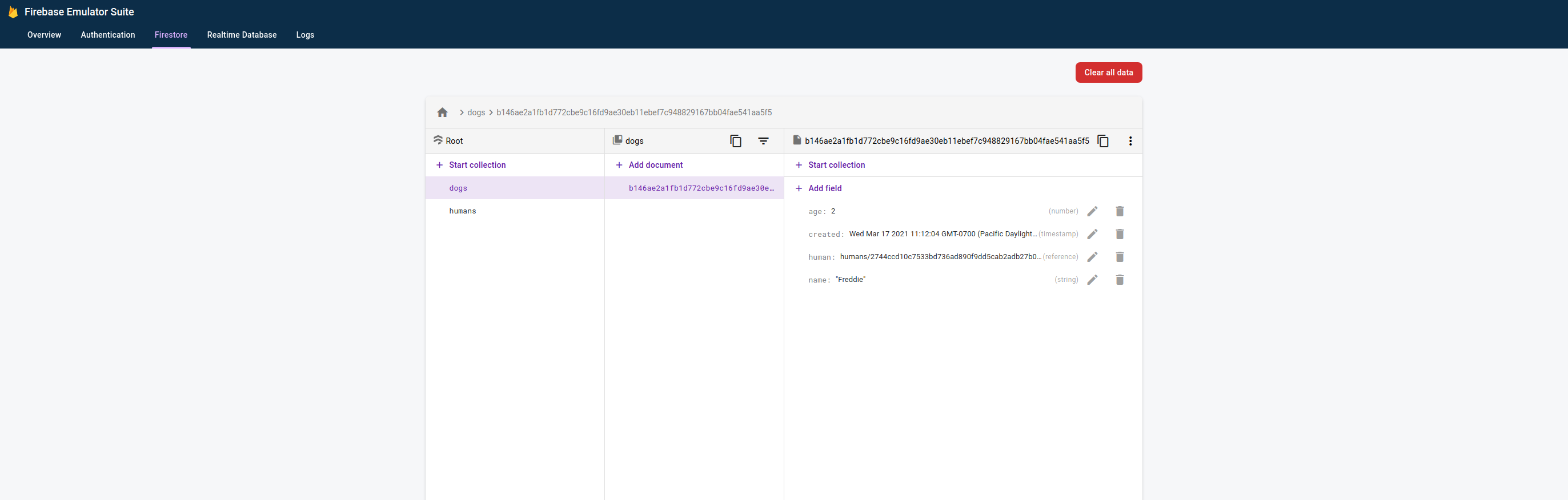

I’ve written before about Ackal’s use of Firestore and subscribing to Firestore document CRUD events:

- Routing Firestore events to GKE with Eventarc

- Cloud Firestore Triggers in Golang using Firestore triggers

I find Google’s Eventarc documentation to be confusing and, in typical Google fashion, even though open-sourced, you often need to do some legwork to find relevant sources, viz:

- Google’s Protobufs for Eventarc (using cloudevents)

google-cloudevents1 - Convenience (since you can generate these using

protoc) language-specific types generated from the above e.g.google-cloudevents-go;google-cloudevents-pythonetc.

1 – IIUC EventArc is the Google service. It carries Google Events that are CloudEvents. These are defined by protocol buffers schemas.

Prost! Tonic w/ a dash of JSON

I naively (!) began exploring JSON marshaling of Protobufs in rust. Other protobuf language SDKs include JSON marshaling making the process straightforward. I was to learn that, in rust, it’s not so simple. Unfortunately, for me, this continues to discourage my further use of rust (rust is just hard).

My goal was to marshal an arbitrary protocol buffer message that included a oneof feature. I was unable to JSON marshal the rust generated by tonic for such a message.

Navigating Koyeb's API with Rust

I wrote about Navigating Koyeb’s Golang SDK. That client is generated using the OpenAPI Generator project using Koyeb’s Swagger (now OpenAPI) REST API spec.

This post shows how to generate a Rust SDK using the Generator and provides a very basic example of using the SDK.

The Generator will create a Rust library project:

VERS="v7.2.0"

PACKAGE_NAME="koyeb-api-client-rs"

PACKAGE_VERS="1.0.0"

podman run \

--interactive --tty --rm \

--volume=${PWD}:/local \

docker.io/openapitools/openapi-generator-cli:${VERS} \

generate \

-g=rust \

-i=https://developer.koyeb.com/public.swagger.json \

-o=/local/${PACKAGE_NAME} \

--additional-properties=\

packageName=${PACKAGE_NAME},\

packageVersion=${PACKAGE_VERS}

This will create the project in ${PWD}/${PACKAGE_NAME} including the documentation at:

Kubernetes Operators

Ackal uses a Kubernetes Operator to orchestrate the lifecycle of its health checks. Ackal’s Operator is written in Go using kubebuilder.

Yesterday, my interest was piqued by a MetalBear blog post Writing a Kubernetes Operator [in Rust]. I spent some time reimplementing one of Ackal’s CRDs (Check) using kube-rs and not only refreshed my Rust knowledge but learned a bunch more about Kubernetes and Operators.

While rummaging around the Kubernetes documentation, I discovered flant’s Shell-operator and spent some time today exploring its potential.

pest: parsing in Rust

A Microsoft engineer introduced me to pest as a way to introduce service filtering in a ZeroConf plugin that I’m prototyping for Akri. It’s been fun to learn but I worry that, because I won’t use it frequently, I’m going to quickly forget what I’ve done. So, here are my notes.

Here’s the problem, I’d like to be able to provide users of the ZeroConf plugin with a string-based filter that permits them to filter the services discovered when the Akri agent browses a network.

Akri

For the past couple of weeks, I’ve been playing around with Akri, a Microsoft (DeisLabs) project for building a connected edge with Kubernetes. Kubernetes, IoT, Rust (and Golang) make this all compelling to me.

Initially, I deployed an Akri End-to-End to MicroK8s on Google Compute Engine (link) and Digital Ocean (link). But I was interested to create me own example and so have proposed a very (!) simple HTTP-based protocol.

This blog summarizes my thoughts about Akri and an explanation of the HTTP protocol implementation in the hope that this helps others.

Deploying a Rust HTTP server to DigitalOcean App Platform

DigitalOcean launched an App Platform with many Supported Languages and Frameworks. I used Golang first, then wondered how to use non-natively-supported languages, i.e. Rust.

The good news is that Docker is a supported framework and so, you can run pretty much anything.

Repo: https://github.com/DazWilkin/do-apps-rust

Rust

I’m a Rust noob. I’m always receptive to feedback on improvements to the code. I looked to mirror the Golang example. I’m using rocket and rocket-prometheus for the first time:

You will want to install rust nightly (as Rocket has a dependency that requires it) and then you can override the default toolchain for the current project using:

Minimizing WASM binaries

I’ve spent time recently playing around with WebAssembly (WASM) and waPC. Rust and WASM were born at Mozilla and there’s a natural affinity with writing WASM binaries in Rust. In the WASM examples I’ve been using for WASM Transparency, waPC and MsgPack and waPC and Protobufs.

I’ve created 3 WASM binaries: complex.wasm, simplex.wasm and fabcar.wasm and each is about 2.5MB when:

cargo build --target=wasm32-unknown-unknown --release

The Rust and WebAssembly book has an excellent section titled Shrinking .wasm. Code Size. So, let’s see what help that provides.

WASM Transparency

I’ve been playing around with a proof-of-concept combining WASM and Trillian. The hypothesis was to explore using WASM as a form of chaincode with Trillian. The project works but it’s far from being a chaincode-like solution.

Let’s start with a couple of (trivial) examples and then I’ll explain what’s going on and how it’s implemented.

2020/08/14 18:42:17 [main:loop:dynamic-invoke] Method: mul

2020/08/14 18:42:17 [random:New] Message

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Message

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [Client:Invoke] Metadata: complex.wasm

2020/08/14 18:42:17 [main:loop:dynamic-invoke] Success: result:{real:0.036980484 imag:0.3898267}

After shipping a Rust-sourced WASM solution (complex.wasm) to the WASM transparency server, the client invokes a method mul that’s exposed by it using a dynamically generated request message and outputs the response. Woo hoo! Yes, an expensive way to multiple complex numbers.

waPC and MsgPack (Rust|Golang)

As my reader will know (Hey Mom!), I’ve been noodling around with WASM and waPC. I’ve been exploring ways to pass structured messages across the host:guest boundary.

Protobufs was my first choice. @KevinHoffman created waPC and waSCC and he explained to me and that wSCC uses Message Pack.

It’s slightly surprising to me (still) that technologies like this exist with everyone else seemingly using them and I’ve not heard of them. I don’t expect to know everything but I’m surprised I’ve not stumbled upon msgpack until now.

Envoy WASM filters in Rust

A digression thanks to Sal Rashid who’s exploring WASM filters w/ Envoy.

The documentation is sparse but:

There is a Rust SDK but it’s not documented:

I found two useful posts by Rustaceans who were able to make use of it:

Here’s my simple use of the SDK’s examples.

wasme

curl -sL https://run.solo.io/wasme/install | sh

PATH=${PATH}:${HOME}/.wasme/bin

wasme --version

It may be possible to avoid creating an account on WebAssemblyHub if you’re staying local.

Rust implementation of Crate Transparency using Google Trillian

I’ve been hacking on a Rust-based transparent application for Google Trillian. As appears to be my fixation, this personality is for another package manager. This time, Rust’s Crates often found in crates.io which is Rust’s Package Registry. I discussed this project earlier this month Rust Crate Transparency && Rust SDK for Google Trillian and and earlier approach for Python’s packages with pypi-transparency.

This time, of course, I’m using Rust. And, by way of a first for me, for the gRPC server implementation (aka “personality”). I’ve been lazy thanks to the excellent gRPCurl and have been using it way of a client. Because I’m more familiar with Golang and because I’ve written (most) other Trillian personalities in Golang, I resorted to quickly implementing Crate Transparency in Golang too in order to uncover bugs with the Rust implementation. I’ll write a follow-up post on the complexity I seem to struggle with when using protobufs and gRPC [in Golang].

Rust Crate Transparency && Rust SDK for Google Trillian

I’m noodling the utility of a Transparency solution for Rust Crates. When developers push crates to Cargo, a bunch of metadata is associated with the crate. E.g. protobuf. As with Golang Modules, Python packages on PyPi etc., there appears to be utility in making tamperproof recordings of these publications. Then, other developers may confirm that a crate pulled from cates.io is highly unlikely to have been changed.

On Linux, Cargo stores downloaded crates under ${HOME}/.crates/registry. In the case of the latest version (2.12.0) of protobuf, on my machine, I have:

gRPC, Cloud Run & Endpoints

<3 Google but there’s quite often an assumption that we’re all sitting around the engineering table and, of course, we’re not.

Cloud Endpoints is a powerful offering but – IMO – it’s super confusing to understand and complex to deploy.

If you’re familiar with the motivations behind service meshes (e.g. Istio), Cloud Endpoints fits in a similar niche (“neesh” or “nitch”?). The underlying ambition is that, developers can take existing code and by adding a proxy (or sidecar), general-purpose abstractions, security, logging etc. may be added.

PyPi Transparency Client (Rust)

I’ve finally being able to hack my way through to a working Rust gRPC client (for PyPi Transparency).

It’s not very good: poorly structured, hacky etc. but it serves the purpose of giving me a foothold into Rust development so that I can evolve it as I learn the language and its practices.

There are several Rust crates (SDK) for gRPC. There’s no sanctioned SDK for Rust on grpc.io.

I chose stepancheg’s grpc-rust because it’s a pure Rust implementation (not built atop the C implementation).

Tag: Exporter

Prometheus Exporter documentation

I have a new router that runs OpenWRT and it provides a Prometheus (Node) Exporter (written in Lua)

NOTE The

prometheus-node-exporter-luaExporter package is a 4KB (!) script

As I’m sure is a common experience for others, it’s great that there’s an Exporter and a wealth of metrics exposed by it. One problem is that there’s no guidance on how to use these metrics to construct useful PromQL queries and Alertmanager alerts.

I think it would be useful for Exporters to publish a Documentation endpoints (perhaps /docs to go along with /metrics) that would include such guidance.

Prometheus Exporter for USGS Water Data service

I’m a little obsessed with creating Prometheus Exporters:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

All of these were written to scratch an itch.

In the case of the cloud platform exporters (Azure, Fly, Google, Linode, Vultr etc.), it’s an overriding anxiety that I’ll leave resources deployed on these platforms and, running an exporter that ships alerts to Pushover and Gmail, provides me a support mechanism for me.

Prometheus Exporter for Koyeb

Yet another cloud platform exporter for resource|cost management. This time for Koyeb with Koyeb Exporter.

Deploying resources to cloud platforms generally incurs cost based on the number of resources deployed, the time each resource is deployed and the cost (per period of time) that the resource is deployed. It is useful to be able to automatically measure and alert on all the resources deployed on all the platforms that you’re using and this is an intent of these exporters.

Prometheus Exporter for Azure (Container Apps)

I’ve written Prometheus Exporters for various cloud platforms. My motivation for writing these Exporters is that I want a unified mechanism to track my usage of these platform’s services. It’s easy to deploy a service on a platform and inadvertently leave it running (up a bill). The set of exporters is:

- Prometheus Exporter for Azure

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Vultr

This post describes the recently-added Azure Exporter which only provides metrics for Container Apps and Resource Groups.

Prometheus Exporters for fly.io and Vultr

I’ve been on a roll building utilities this week. I developed a Service Health dashboard for my “thing”, a Prometheus Exporter for Fly.io and today, a Prometheus Exporter for Vultr. This is motivated by the fear that I will forget a deployed Cloud resource and incur a horrible bill.

I’ve no written several Prometheus Exporters for cloud platforms:

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Fly.io

- Prometheus Exporter for Vultr

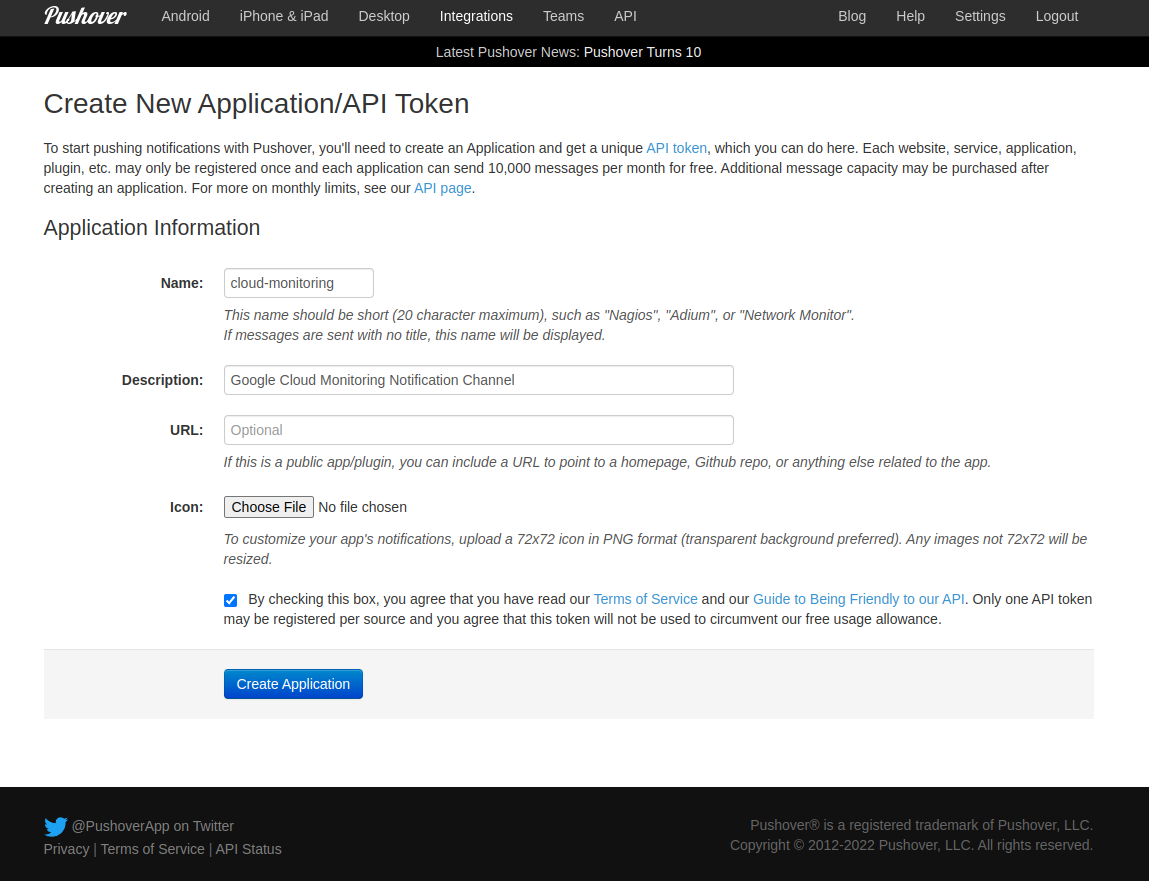

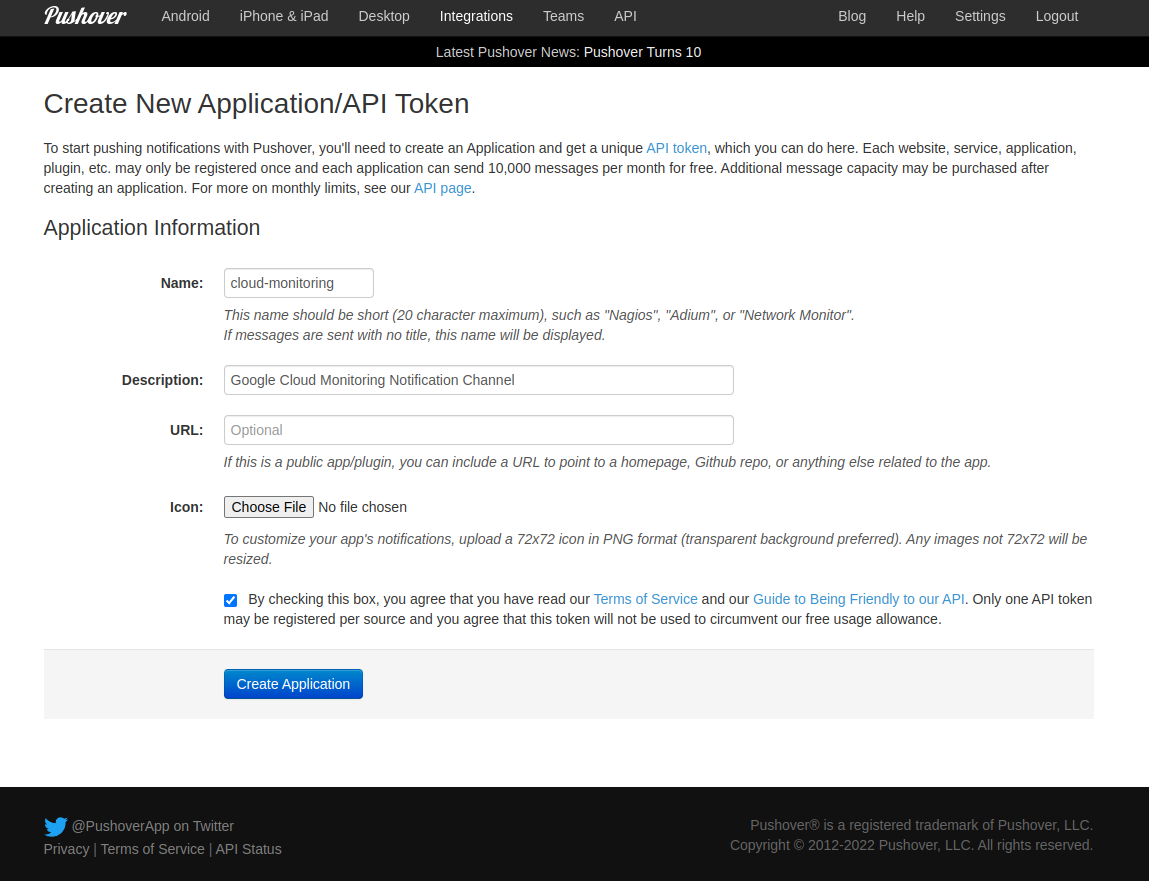

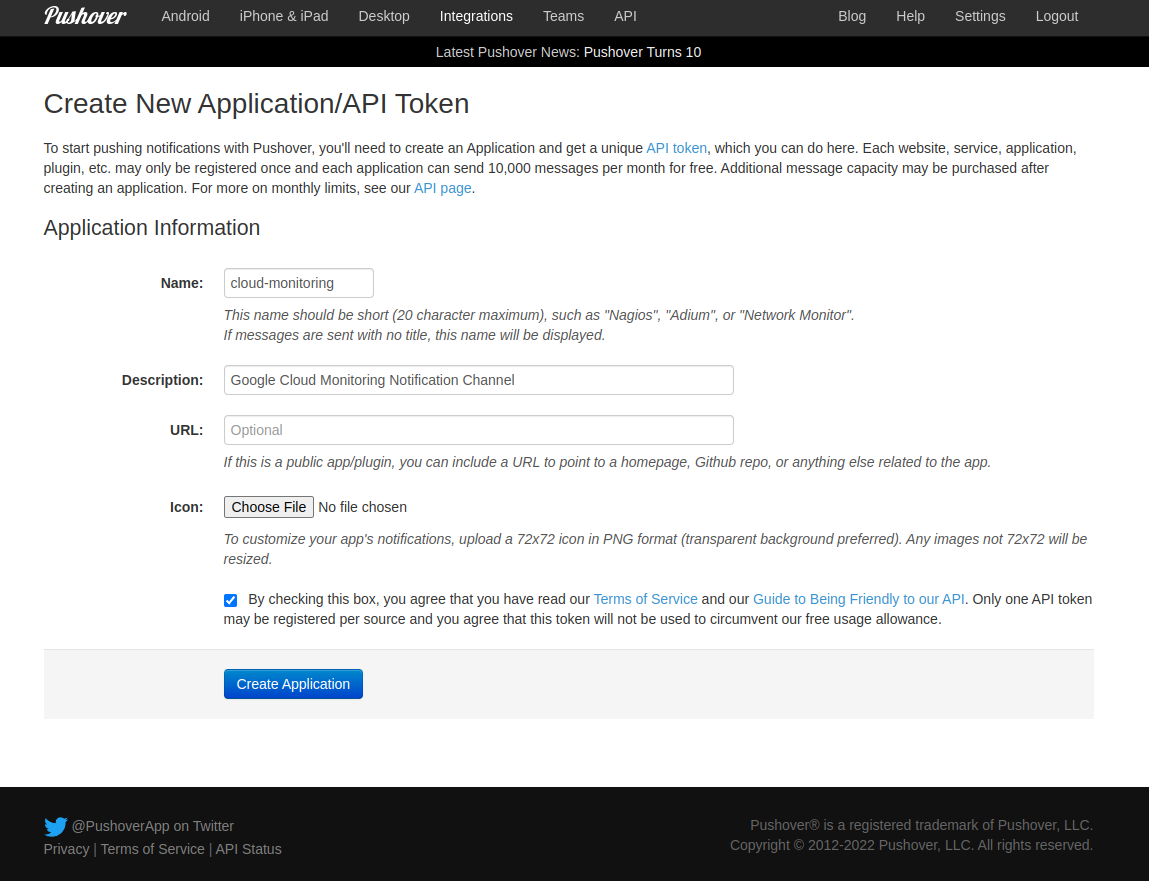

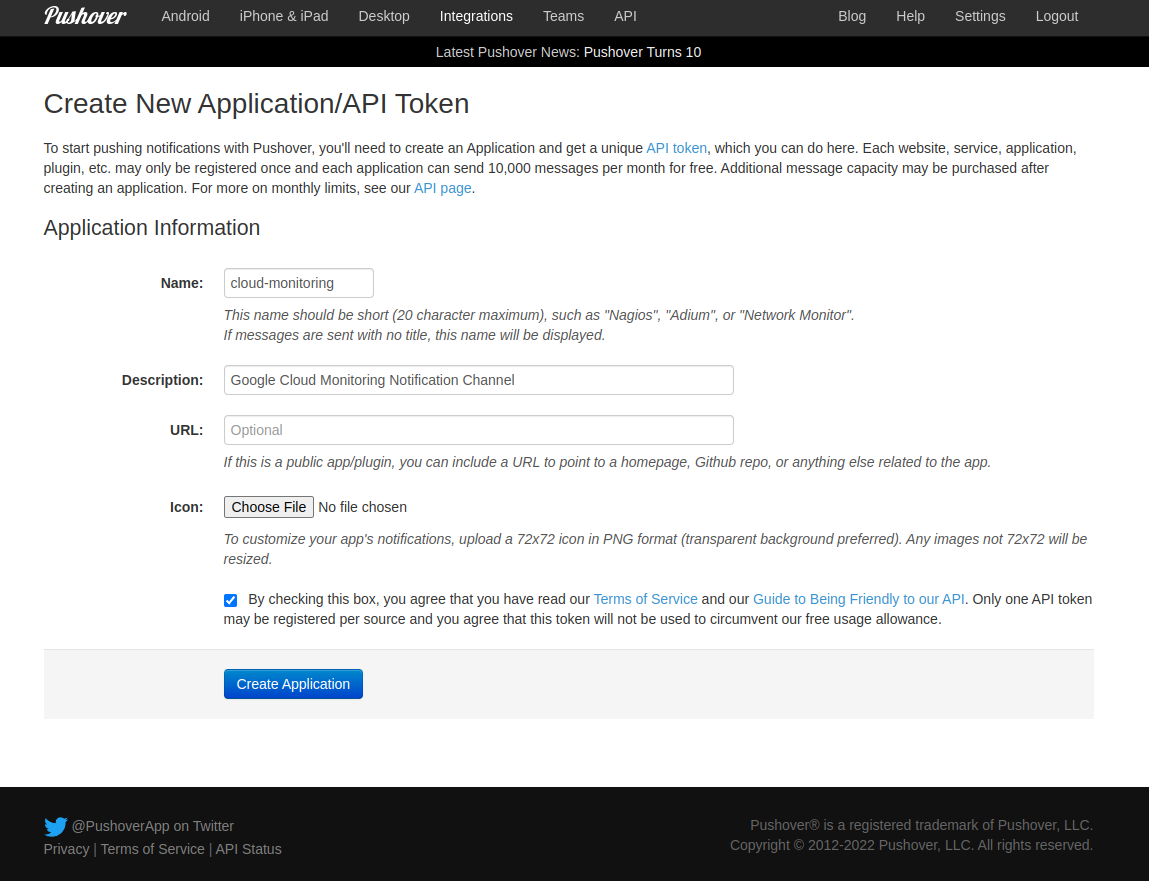

Each of them monitors resource deployments and produces resource count metrics that can be scraped by Prometheus and alerted with Alertmanager. I have Alertmanager configured to send notifications to Pushover. Last week I wrote an integration between Google Cloud Monitoring to send notifications to Pushover too.

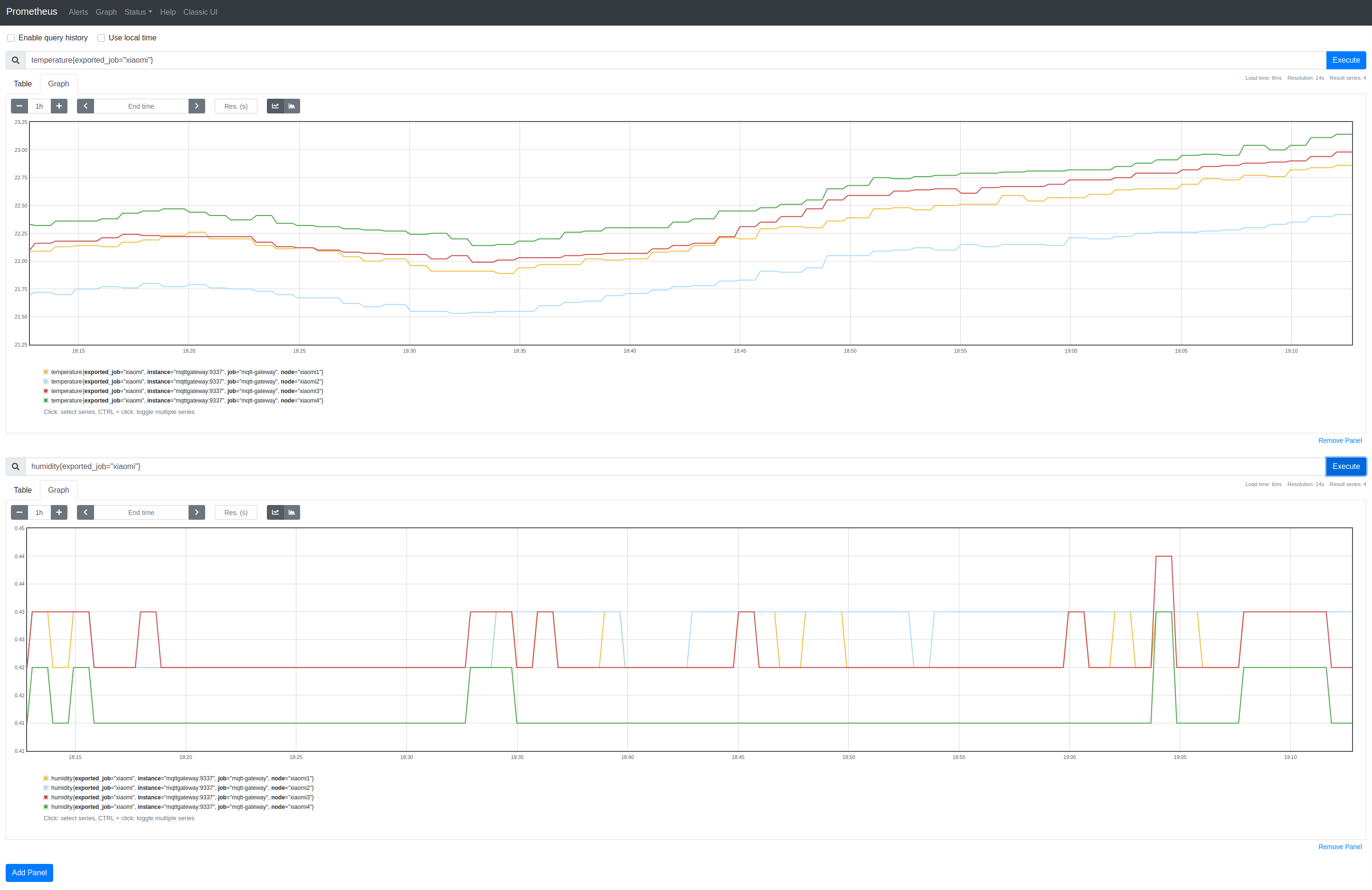

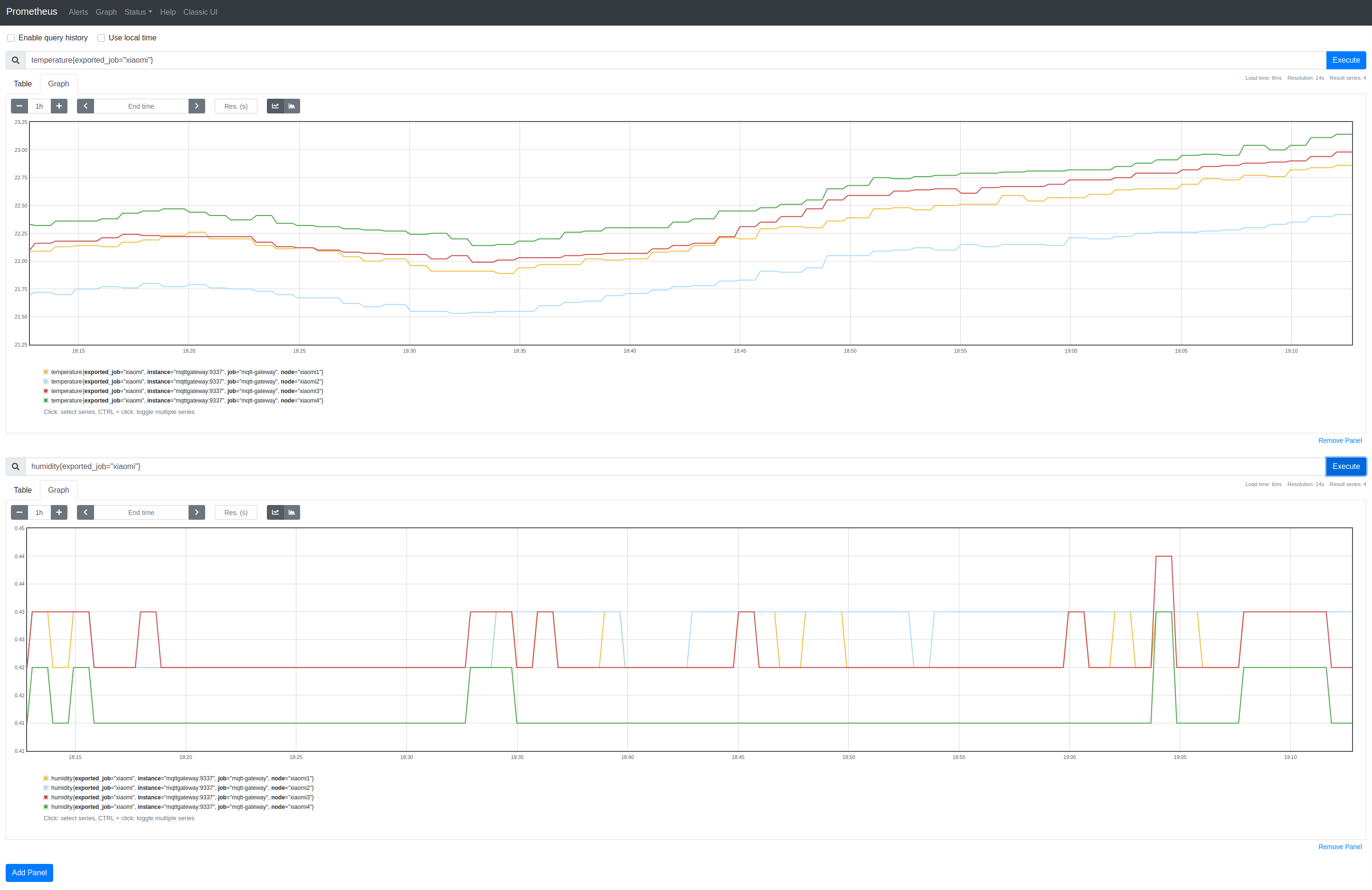

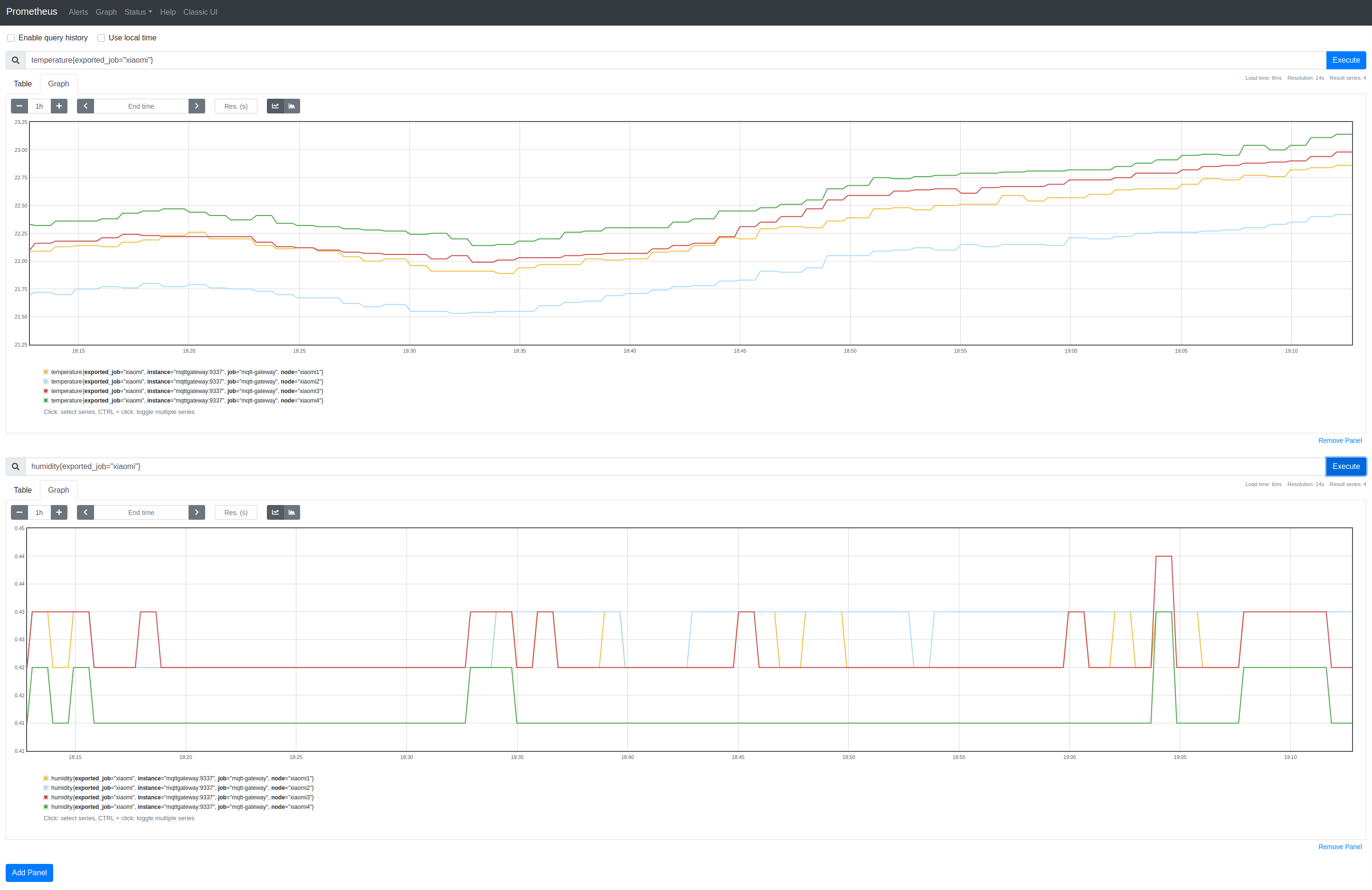

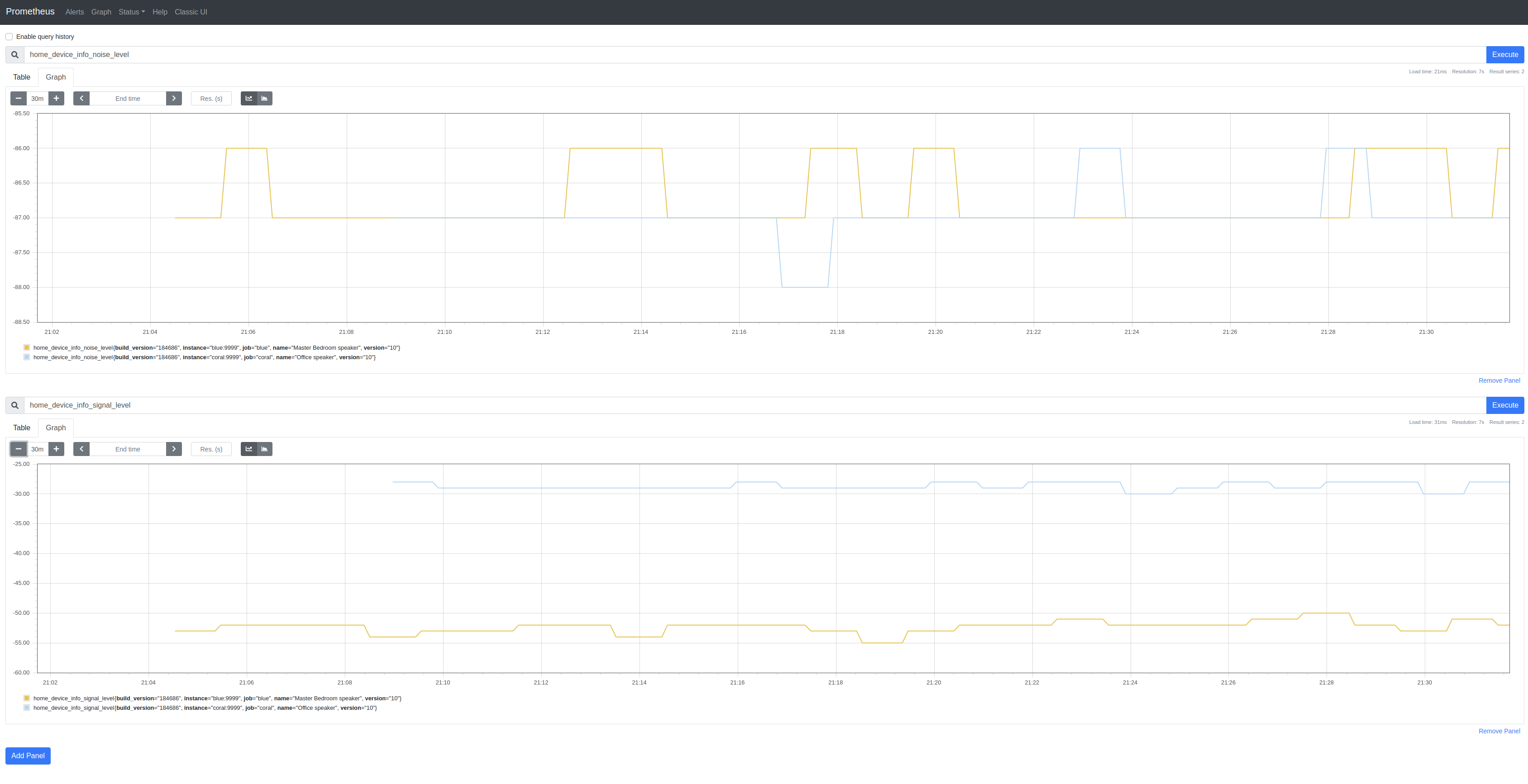

Google Home Exporter

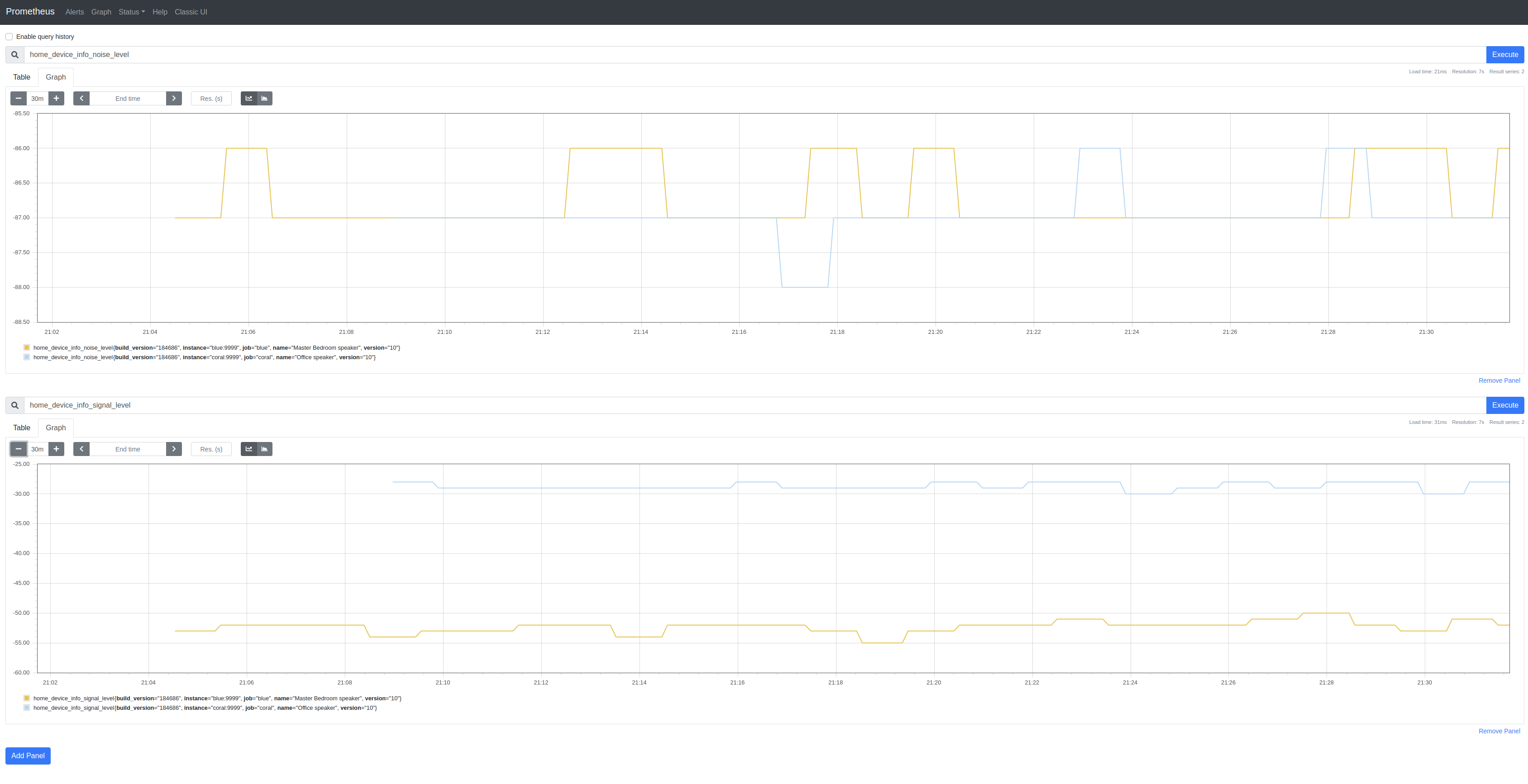

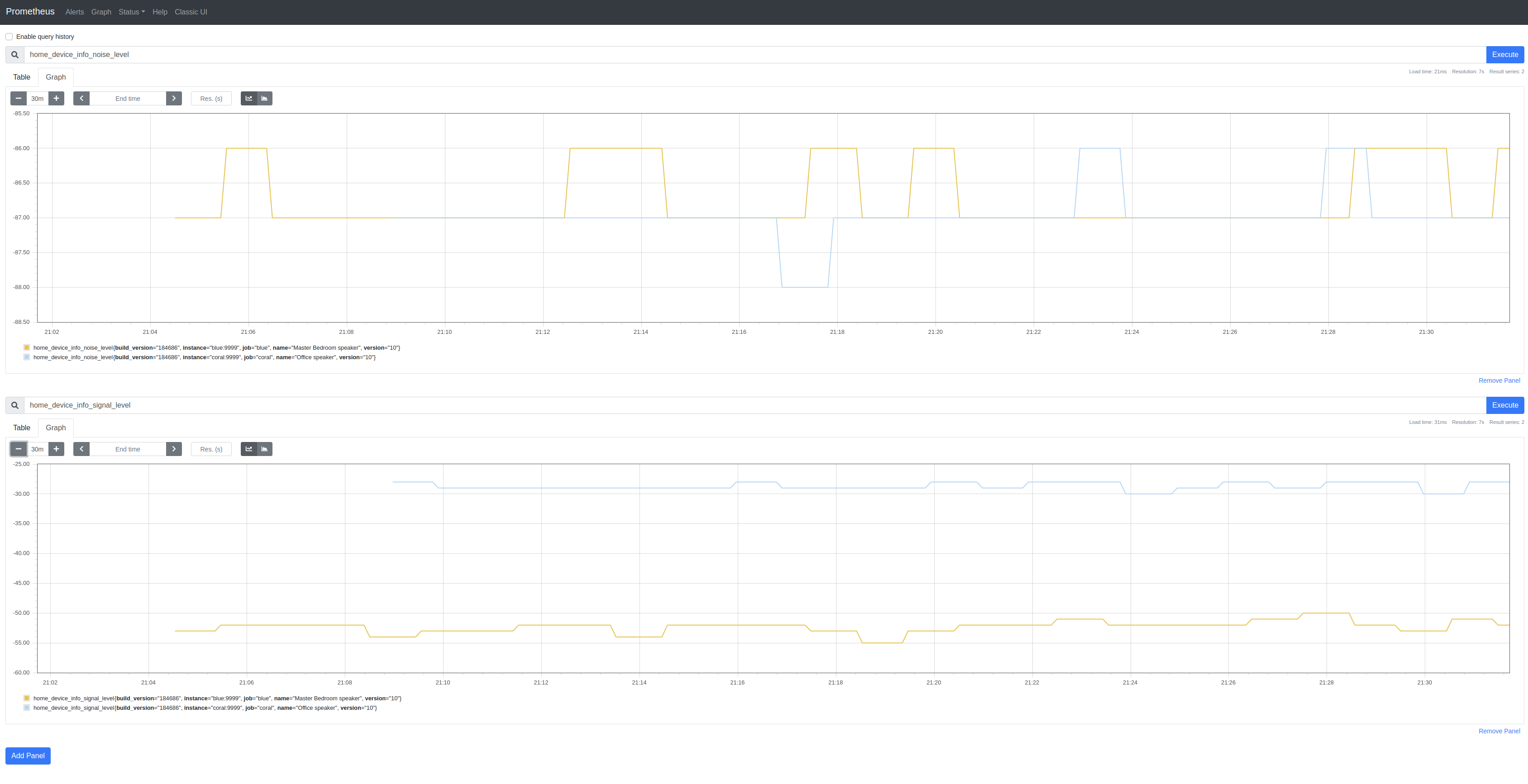

I’m obsessing over Prometheus exporters. First came Linode Exporter, then GCP Exporter and, on Sunday, I stumbled upon a reverse-engineered API for Google Home devices and so wrote a very basic Google Home SDK and a similarly basic Google Home Exporter:

The SDK only implements /setup/eureka_info and then only some of the returned properties. There’s not a lot of metric-like data to use besides SignalLevel (signal_level) and NoiseLevel (noise_level). I’m not clear on the meaning of some of the properties.

Google Cloud Platform (GCP) Exporter

Earlier this week I discussed a Linode Prometheus Exporter.

I added metrics for Digital Ocean’s Managed Kubernetes service to @metalmatze’s Digital Ocean Exporter.

This left, metrics for Google Cloud Platform (GCP) which has, for many years, been my primary cloud platform. So, today I wrote Prometheus Exporter for Google Cloud Platform.

All 3 of these exporters follow the template laid down by @metalmatze and, because each of these services has a well-written Golang SDK, it’s straightforward to implement an exporter for each of them.

Linode Prometheus Exporter

I enjoy using Prometheus and have toyed around with it for some time particularly in combination with Kubernetes. I signed up with Linode [referral] compelled by the addition of a managed Kubernetes service called Linode Kubernetes Engine (LKE). I have an anxiety that I’ll inadvertently leave resources running (unused) on a cloud platform. Instead of refreshing the relevant billing page, it struck me that Prometheus may (not yet proven) help.

The hypothesis is that a combination of a cloud-specific Prometheus exporter reporting aggregate uses of e.g. Linodes (instances), NodeBalancers, Kubernetes clusters etc., could form the basis of an alert mechanism using Prometheus’ alerting.

Tag: OpenWRT

Prometheus Exporter documentation

I have a new router that runs OpenWRT and it provides a Prometheus (Node) Exporter (written in Lua)

NOTE The

prometheus-node-exporter-luaExporter package is a 4KB (!) script

As I’m sure is a common experience for others, it’s great that there’s an Exporter and a wealth of metrics exposed by it. One problem is that there’s no guidance on how to use these metrics to construct useful PromQL queries and Alertmanager alerts.

I think it would be useful for Exporters to publish a Documentation endpoints (perhaps /docs to go along with /metrics) that would include such guidance.

Tag: Prometheus

Prometheus Exporter documentation

I have a new router that runs OpenWRT and it provides a Prometheus (Node) Exporter (written in Lua)

NOTE The

prometheus-node-exporter-luaExporter package is a 4KB (!) script

As I’m sure is a common experience for others, it’s great that there’s an Exporter and a wealth of metrics exposed by it. One problem is that there’s no guidance on how to use these metrics to construct useful PromQL queries and Alertmanager alerts.

I think it would be useful for Exporters to publish a Documentation endpoints (perhaps /docs to go along with /metrics) that would include such guidance.

Prometheus MCP Server

I was unable to find a Model Context Protocol (MCP) server implementation for Prometheus. I had a quiet weekend and so I’ve been writing one: prometheus-mcp-server.

I used the code from the MCP for gRPC Health Checking protocol that I wrote about previously as a guide.

I wrote a series of stdin and HTTP tests to have confidence that the service is working correctly but I had no MCP host.

I discovered that Visual Studio Code through its GitHub Copilot extension functions has a preview to use MCP servers i.e. function as an MCP host and access MCP servers.

Migrating Prometheus Exporters to Kubernetes

I have built Prometheus Exporters for multiple cloud platforms to track resources deployed across clouds:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Analytics

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

Additionally, I’ve written two status service exporters:

These exporters are all derived from an exemplar DigitalOcean Exporter written by metalmatze for which I maintain a fork.

Prometheus Exporter for USGS Water Data service

I’m a little obsessed with creating Prometheus Exporters:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

All of these were written to scratch an itch.

In the case of the cloud platform exporters (Azure, Fly, Google, Linode, Vultr etc.), it’s an overriding anxiety that I’ll leave resources deployed on these platforms and, running an exporter that ships alerts to Pushover and Gmail, provides me a support mechanism for me.

Prometheus Protobufs and Native Histograms

I responded to a question Prometheus metric protocol buffer in gRPC on Stackoverflow and it piqued my curiosity and got me yak shaving.

Prometheus used to support two exposition formats including Protocol Buffers, then dropped Protocol Buffer and has since re-added it (see Protobuf format). The Protobuf format has returned to support the experimental Native Histograms feature.

I’m interested in adding Native Histogram support to Ackal so thought I’d learn more about this metric.

Capturing e.g. CronJob metrics with GMP

The deployment of Kube State Metrics for Google Managed Prometheus creates both a PodMonitoring and ClusterPodMonitoring.

The PodMonitoring resource exposes metrics published on metric-self port (8081).

The ClusterPodMonitoring exposes metrics published on metric port (8080) but this doesn’t include cronjob-related metrics:

kubectl get clusterpodmonitoring/kube-state-metrics \

--output=jsonpath="{.spec.endpoints[0].metricRelabeling}" \

| jq -r .

[

{

"action": "keep",

"regex": "kube_(daemonset|deployment|replicaset|pod|namespace|node|statefulset|persistentvolume|horizontalpodautoscaler|job_created)(_.+)?",

"sourceLabels": [

"__name__"

]

}

]

NOTE The

regexdoes not includekube_cronjoband only includeskube_job_createdpatterns.

Prometheus Operator support an auth proxy for Service Discovery

CRD linting

Returning to yesterday’s failing tests, it’s unclear how to introspect the E2E tests.

kubectl get namespaces

NAME STATUS AGE

...

allns-s2os2u-0-90f56669 Active 22h

allns-s2qhuw-0-6b33d5eb Active 4m23s

kubectl get all \

--namespace=allns-s2os2u-0-90f56669

No resources found in allns-s2os2u-0-90f56669 namespace.

kubectl get all \

--namespace=allns-s2qhuw-0-6b33d5eb

NAME READY STATUS RESTARTS AGE

pod/prometheus-operator-6c96477b9c-q6qm2 1/1 Running 0 4m12s

pod/prometheus-operator-admission-webhook-68bc9f885-nq6r8 0/1 ImagePullBackOff 0 4m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus-operator ClusterIP 10.152.183.247 <none> 443/TCP 4m9s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-operator 1/1 1 1 4m12s

deployment.apps/prometheus-operator-admission-webhook 0/1 1 0 4m7s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-operator-6c96477b9c 1 1 1 4m13s

replicaset.apps/prometheus-operator-admission-webhook-68bc9f885 1 1 0 4m8s

kubectl logs deployment/prometheus-operator-admission-webhook \

--namespace=allns-s2qhuw-0-6b33d5eb

Error from server (BadRequest): container "prometheus-operator-admission-webhook" in pod "prometheus-operator-admission-webhook-68bc9f885-nq6r8" is waiting to start: trying and failing to pull image

NAME="prometheus-operator-admission-webhook"

FILTER="{.spec.template.spec.containers[?(@.name==\"${NAME}\")].image}"

kubectl get deployment/prometheus-operator-admission-webhook \

--namespace=allns-s2qjz2-0-fad82c03 \

--output=jsonpath="${FILTER}"

quay.io/prometheus-operator/admission-webhook:52d1e55af

Want:

Prometheus Operator support an auth proxy for Service Discovery

For ackalctld to be deployable to Kubernetes with Prometheus Operator, it is necessary to Enable ScrapeConfig to use (discovery|target) proxies #5966. While I’m familiar with Kubernetes, Kubernetes operators (Ackal uses one built with the Operator SDK) and Prometheus Operator, I’m unfamiliar with developing Prometheus Operator. This (and subsequent) posts will document some preliminary work on this.

Cloned Prometheus Operator

Branched scrape-config-url-proxy

I’m unsure how to effect these changes and unsure whether documentation exists.

Clearly, I will need to revise the ScrapeConfig CRD to add the proxy_url fields (one proxy_url defines a proxy for the Service Discovery endpoint; the second defines a proxy for the targets themselves) and it would be useful for this to closely mirror the existing Prometheus HTTP Service Discovery use, namely ,http_sd_config>:

Prometheus Operator `ScrapeConfig`

TL;DR Enable ScrapeConfig to use (discovery|target) proxies

I’ve developed a companion, local daemon (called ackalctld) for Ackal that provides a functionally close version of the service.

One way to deploy ackalctld is to use Kubernetes and it would be convenient if the Prometheus metrics were scrapeable by Prometheus Operator.

In order for this to work, Prometheus Operator needs to be able to scrape Google Cloud Run targets because ackalctld creates Cloud Run services for its health check clients.

Prometheus Exporter for Koyeb

Yet another cloud platform exporter for resource|cost management. This time for Koyeb with Koyeb Exporter.

Deploying resources to cloud platforms generally incurs cost based on the number of resources deployed, the time each resource is deployed and the cost (per period of time) that the resource is deployed. It is useful to be able to automatically measure and alert on all the resources deployed on all the platforms that you’re using and this is an intent of these exporters.

Robusta KRR w/ GMP

I’ve been spending time recently optimizing Ackal’s use of Google Cloud Logging and Cloud Monitoring in posts:

- Filtering metrics w/ Google Managed Prometheus

- Kubernetes metrics, metrics everywhere

- Google Metric Diagnostics and Metric Data Ingested

Yesterday, I read that Robusta has a new open source project Kubernetes Resource Recommendations (KRR) so I took some time to evaluate it.

This post describes the changes I had to make to get KRR working with Google Managed Prometheus (GMP):

Google Metric Diagnostics and Metric Data Ingested

I’ve been on an efficiency drive with Cloud Logging and Cloud Monitoring.

With regards Cloud Logging, I’m contemplating (!) eliminating almost all log storage. As it is I’ve buzz cut log storage with a _Default sink that has comprehensive sets of NOT LOG_ID(X) inclusion and exclusion filters. As I was doing so, I began to wonder why I need to pay for the storage of much logging. There’s the comfort from knowing that everything you may ever need is being logged (at least for 30 days) but there’s also the costs that that entails. I use logs exclusively for debugging which got me thinking, couldn’t I just capture logs when I’m debugging (rather thna all the time?). I’ve not taken that leap yet but I’m noodling on it.

Prometheus Exporter for Azure (Container Apps)

I’ve written Prometheus Exporters for various cloud platforms. My motivation for writing these Exporters is that I want a unified mechanism to track my usage of these platform’s services. It’s easy to deploy a service on a platform and inadvertently leave it running (up a bill). The set of exporters is:

- Prometheus Exporter for Azure

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Vultr

This post describes the recently-added Azure Exporter which only provides metrics for Container Apps and Resource Groups.

Kubernetes metrics, metrics everywhere

I’ve been tinkering with ways to “unit-test” my assumptions when using cloud platforms. I recently wrote about good posts by Google describing achieving cost savings with Cloud Monitoring and Cloud Logging:

- How to identify and reduce costs of your Google Cloud observability in Cloud Monitoring

- Cloud Logging pricing for Cloud Admins: How to approach it & save cost

With Cloud Monitoring, I’ve restricted the prometheus.googleapis.com metrics that are being ingested but realized I wanted to track the number of Pods (and Containers) deployed to a GKE cluster.

Filtering metrics w/ Google Managed Prometheus

Google has published two, very good blog posts on cost management:

- How to identify and reduce costs of your Google Cloud observability in Cloud Monitoring

- Cloud Logging pricing for Cloud Admins: How to approach it & save cost

This post is about my application cost reductions for Cloud Monitoring for Ackal.

I’m pleased with Google Cloud Managed Service for Prometheus (hereinafter GMP). I’ve a strong preference for letting service providers run components of Ackal that I consider important but non-differentiating.

Kubernetes Operators

Ackal uses a Kubernetes Operator to orchestrate the lifecycle of its health checks. Ackal’s Operator is written in Go using kubebuilder.

Yesterday, my interest was piqued by a MetalBear blog post Writing a Kubernetes Operator [in Rust]. I spent some time reimplementing one of Ackal’s CRDs (Check) using kube-rs and not only refreshed my Rust knowledge but learned a bunch more about Kubernetes and Operators.

While rummaging around the Kubernetes documentation, I discovered flant’s Shell-operator and spent some time today exploring its potential.

Authenticate PromLens to Google Managed Prometheus

I’m using Google Managed Service for Prometheus (GMP) and liking it.

Sometime ago, I tried using PromLens with GMP but GMP’s Prometheus HTTP API endpoint requires auth and I’ve battled Prometheus’ somewhat limited auth mechanism before (Scraping metrics exposed by Google Cloud Run services that require authentication).

Listening to PromCon EU 2022 videos, I learned that PromLens has been open sourced and contributed to the Prometheus project. Eventually, the functionality of PromLens should be combined into the Prometheus UI.

Prometheus Exporters for fly.io and Vultr

I’ve been on a roll building utilities this week. I developed a Service Health dashboard for my “thing”, a Prometheus Exporter for Fly.io and today, a Prometheus Exporter for Vultr. This is motivated by the fear that I will forget a deployed Cloud resource and incur a horrible bill.

I’ve no written several Prometheus Exporters for cloud platforms:

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Fly.io

- Prometheus Exporter for Vultr

Each of them monitors resource deployments and produces resource count metrics that can be scraped by Prometheus and alerted with Alertmanager. I have Alertmanager configured to send notifications to Pushover. Last week I wrote an integration between Google Cloud Monitoring to send notifications to Pushover too.

Prometheus HTTP Service Discovery of Cloud Run services

Some time ago, I wrote about using Prometheus Service Discovery w/ Consul for Cloud Run and also Scraping metrics exposed by Google Cloud Run services that require authentication. Both solutions remain viable but they didn’t address another use case for Prometheus and Cloud Run services that I have with a “thing” that I’ve been building.

In this scenario, I want to:

- Configure Prometheus to scrape Cloud Run service metrics

- Discover Cloud Run services dynamically

- Authenticate to Cloud Run using Firebase Auth ID tokens

These requirements and – one other – present several challenges:

Scraping metrics exposed by Google Cloud Run services that require authentication

I’ve written a solution (gcp-oidc-token-proxy) that can be used in conjunction with Prometheus OAuth2 to authenticate requests so that Prometheus can scrape metrics exposed by e.g. Cloud Run services that require authentication. The solution resulted from my question on Stack overflow.

Problem #1: Endpoint requires authentication

Given a Cloud Run service URL for which:

ENDPOINT="my-server-blahblah-wl.a.run.app"

# Returns 200 when authentication w/ an ID token

TOKEN="$(gcloud auth print-identity-token)"

curl \

--silent \

--request GET \

--header "Authorization: Bearer ${TOKEN}" \

--write-out "%{response_code}" \

--output /dev/null \

https://${ENDPOINT}/metrics

# Returns 403 otherwise

curl \

--silent \

--request GET \

--write-out "%{response_code}" \

--output /dev/null \

https://${ENDPOINT}/metrics

Problem #2: Prometheus OAuth2 configuration is constrained

Consul discovers Google Cloud Run

I’ve written a basic discoverer of Google Cloud Run services. This is for a project and it extends work done in some previous posts to Multiplex gRPC and Prometheus with Cloud Run and to use Consul for Prometheus service discovery.

This solution:

- Accepts a set of Google Cloud Platform (GCP) projects

- Trawls them for Cloud Run services

- Assumes that the services expose Prometheus metrics on

:443/metrics - Relabels the services

- Surfaces any discovered Cloud Run services’ metrics in Prometheus

You’ll need Docker and Docker Compose.

Multiplexing gRPC and HTTP (Prometheus) endpoints with Cloud Run

Google Cloud Run is useful but, each service is limited to exposing a single port. This caused me problems with a gRPC service that serves (non-gRPC) Prometheus metrics because customarily, you would serve gRPC on one port and the Prometheus metrics on another.

Fortunately, cmux provides a solution by providing a mechanism that multiplexes both services (gRPC and HTTP) on a single port!

TL;DR See the cmux Limitations and use:

grpcl := m.MatchWithWriters( cmux.HTTP2MatchHeaderFieldSendSettings("content-type", "application/grpc"))

Extending the example from the cmux repo:

Prometheus Service Discovery w/ Consul for Cloud Run

I’m working on a project that will programmatically create Google Cloud Run services and I want to be able to dynamically discover these services using Prometheus.

This is one solution.

NOTE Google Cloud Run is the service I’m using, but the principle described herein applies to any runtime service that you’d wish to use.

Why is this challenging? IIUC, it’s primarily because Prometheus has a limited set of plugins for service discovery, see the sections that include _sd_ in Prometheus Configuration documentation. Unfortunately, Cloud Run is not explicitly supported. The alternative appears to be to use file-based discovery but this seems ‘challenging’; it requires, for example, reloading Prometheus on file changes.

Prometheus VPA Recommendations

Phew!

I was interested in learning how to Manage Resources for Containers. On the way, I learned and discovered:

kubectl top- Vertical Pod Autoscaler

- A (valuable) digression through PodMonitor

kube-state-metrics- `kubectl-patch

- Created a Graph

- References

Kubernetes Resources

Visual Studio Code has begun to bug me (reasonably) to add resources to Kubernetes manifests.

E.g.:

resources:

limits:

cpu: "1"

memory: "512Mi"

I’ve been spending time with Deislab’s Akri and decided to determine whether Akri’s primary resources (Agent, Controller) and some of my creations HTTP Device and Discovery, were being suitably constrained.

Deploying a Rust HTTP server to DigitalOcean App Platform

DigitalOcean launched an App Platform with many Supported Languages and Frameworks. I used Golang first, then wondered how to use non-natively-supported languages, i.e. Rust.

The good news is that Docker is a supported framework and so, you can run pretty much anything.

Repo: https://github.com/DazWilkin/do-apps-rust

Rust

I’m a Rust noob. I’m always receptive to feedback on improvements to the code. I looked to mirror the Golang example. I’m using rocket and rocket-prometheus for the first time:

You will want to install rust nightly (as Rocket has a dependency that requires it) and then you can override the default toolchain for the current project using:

Google Home Exporter

I’m obsessing over Prometheus exporters. First came Linode Exporter, then GCP Exporter and, on Sunday, I stumbled upon a reverse-engineered API for Google Home devices and so wrote a very basic Google Home SDK and a similarly basic Google Home Exporter:

The SDK only implements /setup/eureka_info and then only some of the returned properties. There’s not a lot of metric-like data to use besides SignalLevel (signal_level) and NoiseLevel (noise_level). I’m not clear on the meaning of some of the properties.

Google Cloud Platform (GCP) Exporter

Earlier this week I discussed a Linode Prometheus Exporter.

I added metrics for Digital Ocean’s Managed Kubernetes service to @metalmatze’s Digital Ocean Exporter.

This left, metrics for Google Cloud Platform (GCP) which has, for many years, been my primary cloud platform. So, today I wrote Prometheus Exporter for Google Cloud Platform.

All 3 of these exporters follow the template laid down by @metalmatze and, because each of these services has a well-written Golang SDK, it’s straightforward to implement an exporter for each of them.

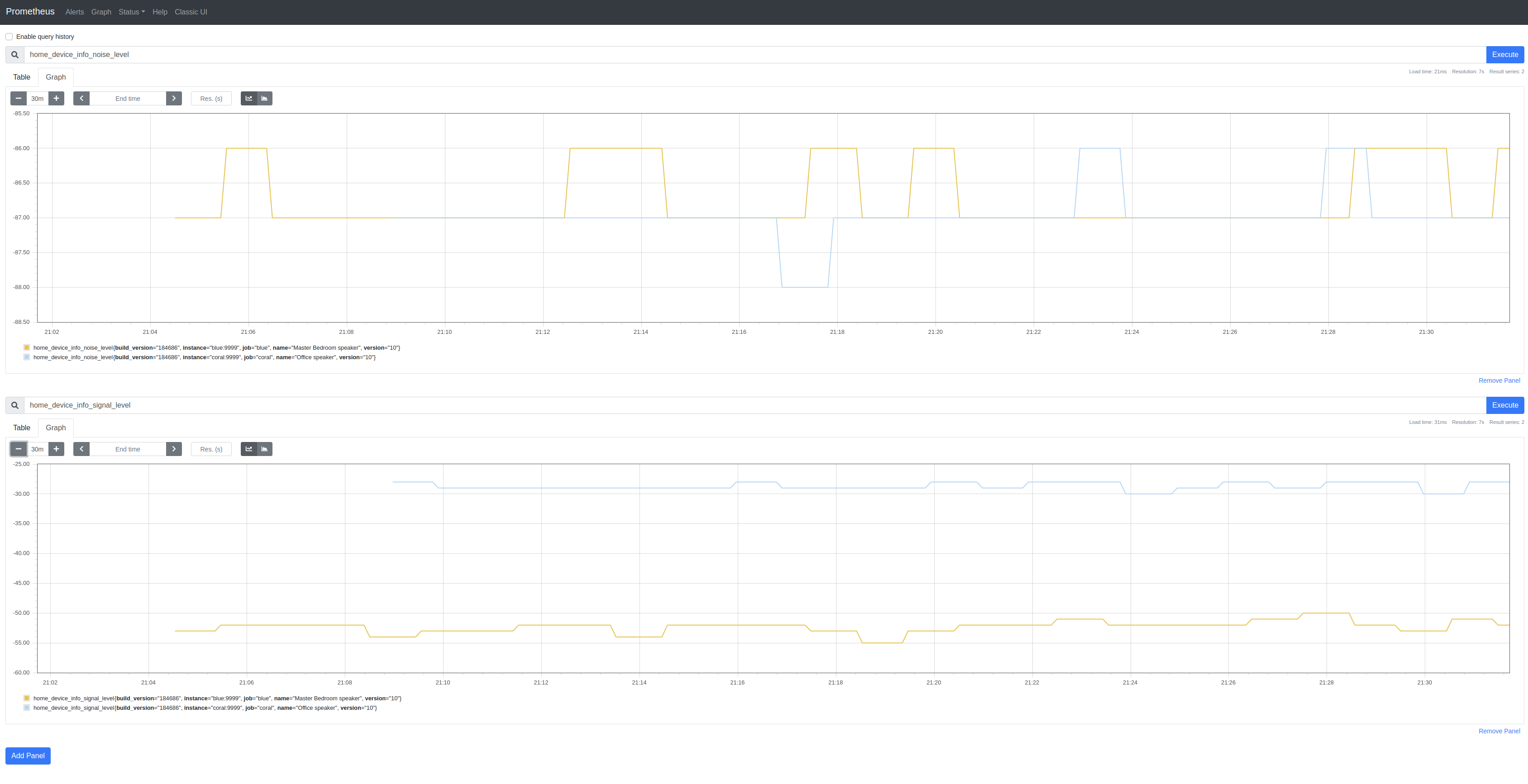

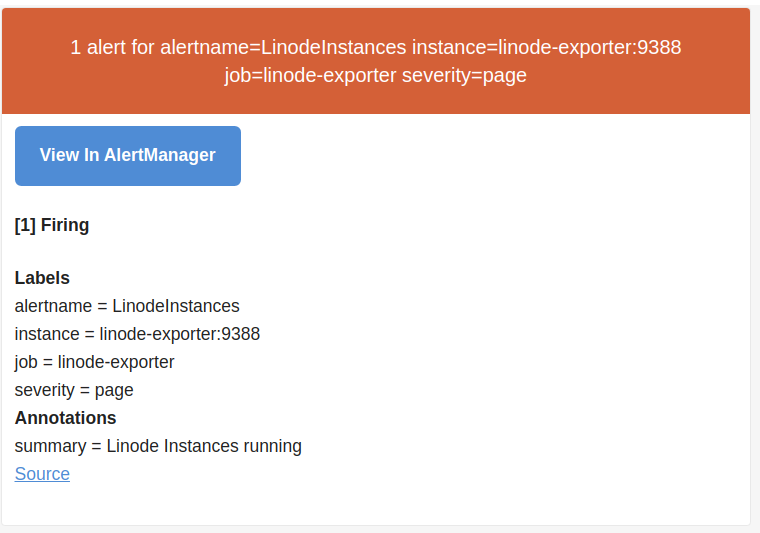

Prometheus AlertManager

Yesterday I discussed a Linode Prometheus Exporter and tantalized use of Prometheus AlertManager.

Success:

Configure

The process is straightforward although I found the Prometheus (config) documentation slightly unwieldy to navigate :-(

The overall process is documented.

Here are the steps I took:

Configure Prometheus

Added the following to prometheus.yml:

rule_files:

- "/etc/alertmanager/rules/linode.yml"

alerting:

alertmanagers:

- scheme: http

static_configs:

- targets:

- "alertmanager:9093"

Rules must be defined in separate rules files. See below for the content for linode.yml and an explanation.

Linode Prometheus Exporter

I enjoy using Prometheus and have toyed around with it for some time particularly in combination with Kubernetes. I signed up with Linode [referral] compelled by the addition of a managed Kubernetes service called Linode Kubernetes Engine (LKE). I have an anxiety that I’ll inadvertently leave resources running (unused) on a cloud platform. Instead of refreshing the relevant billing page, it struck me that Prometheus may (not yet proven) help.

The hypothesis is that a combination of a cloud-specific Prometheus exporter reporting aggregate uses of e.g. Linodes (instances), NodeBalancers, Kubernetes clusters etc., could form the basis of an alert mechanism using Prometheus’ alerting.

Tag: Jsonnet

Deploying to K3s

A simple deployment of cAdvisor to K3s and to confirm the ability to expose Ingresses using Tailscale Kubernetes Operator (TLS) and – since it’s already installed with K3s – Traefik (non-TLS).

local image = "gcr.io/cadvisor/cadvisor:latest";

local labels = {

app: "cadvisor",

};

local name = std.extVar("NAME");

local node_ip = std.extVar("NODE_IP");

local port = 8080;

local deployment = {

apiVersion: "apps/v1",

kind: "Deployment",

metadata: {

name: name,

labels: labels,

},

spec: {

replicas: 1,

selector: {

matchLabels: labels,

},

template: {

metadata: {

labels: labels,

},

spec: {

containers: [

{

name: name,

image: image,

ports: [

{

name: "http",

containerPort: 8080,

protocol: "TCP",

},

],

resources: {

limits: {

memory: "500Mi",

},

requests: {

cpu: "250m",

memory: "250Mi",

},

},

securityContext: {

allowPrivilegeEscalation: false,

privileged: false,

readOnlyRootFilesystem: true,

runAsGroup: 1000,

runAsNonRoot: true,

runAsUser: 1000,

},

},

],

},

},

},

};

local ingresses = [

{

// Tailscale Ingress TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "tailscale",

labels: labels,

},

spec: {

ingressClassName: "tailscale",

defaultBackend: {

service: {

name: name,

port: {

number: port,

},

},

},

tls: [

{

hosts: [

name,

],

},

],

},

},

{

// Traefik Ingress non-TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "traefik",

labels: labels,

},

spec: {

ingressClassName: "traefik",

rules: [

{

host: std.format(

"%(name)s.%(node_ip)s.nip.io", {

name: name,

node_ip: node_ip,

},

),

http: {

paths: [

{

path: "/",

pathType: "Prefix",

backend: {

service: {

name: name,

port: {

number: port,

},

},

},

},

],

},

},

],

},

},

];

local prometheusrule = {

// Alternatively parameterize the duration

local duration = 10,

apiVersion: "monitoring.coreos.com/v1",

kind: "PrometheusRule",

metadata: {

name: name,

labels: labels,

},

spec: {

groups: [

{

name: name,

rules: [

{

alert: "TestcAdvsiorDown",

annotations: {

summary: "Test cAdvisor instance is down or not scraping metrics.",

description: std.format(

"The cAdvisor instance {{ $labels.instance }} has not been scraping metrics for more than %(duration)s minutes.", {

duration: duration,

},

),

},

expr: "absent(cadvisor_version_info{namespace=\"k3s-test\"}) or (cadvisor_version_info{namespace=\"k3s-test\"}!=1)",

"for": std.format(

"%(duration)sm", {

duration: duration,

},

),

labels: labels {

severity: "warning",

},

},

],

},

],

},

};

local service = {

apiVersion: "v1",

kind: "Service",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: labels,

ports: [

{

name: "http",

port: port,

targetPort: port,

protocol: "TCP",

},

],

},

};

local serviceaccount = {

apiVersion: "v1",

kind: "ServiceAccount",

metadata: {

name: name,

labels: labels,

},

};

local servicemonitor = {

apiVersion: "monitoring.coreos.com/v1",

kind: "ServiceMonitor",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: {

matchLabels: labels,

},

endpoints: [

{

interval: "120s",

path: "/metrics",

port: "http",

scrapeTimeout: "30s",

},

],

},

};

// Output

{

apiVersion: "v1",

kind: "List",

items: [

deployment,

prometheusrule,

service,

serviceaccount,

servicemonitor,

] + ingresses,

}

I like to have a script that applies Jsonnet to the file:

Gemini CLI (3/3)

Update 2025-07-08

Gemini CLI supports HTTP-based MCP server integration

So, it’s possible to replace the .gemini/settings.json included in the original post with:

{

"theme": "Default",

"mcpServers": {

"ackal-mcp-server": {

"httpUrl": "http://localhost:7777/mcp",

"timeout": 5000

},

"prometheus-mcp-server": {

"httpUrl": "https://prometheus.{tailnet}/mcp",

"timeout": 5000

}

},

"selectedAuthType": "gemini-api-key"

}

This solution permits the addition of headers too for e.g. including Authorization

Original

Okay, so not “Gemini Code Assist” but sufficiently similar that I think it warrants the “3/3” appellation.

Configuring Envoy to proxy Google Cloud Run v2

I’m building an emulator for Cloud Run. As I considered the solution, I assumed (more later) that I could implement Google’s gRPC interface for Cloud Run and use Envoy to proxy HTTP/REST requests to the gRPC service using Envoy’s gRPC-JSON transcoder.

Google calls this process Transcoding HTTP/JSON to gRPC which I think it a better description.

Google’s Cloud Run v2 (v1 is no longer published to the googleapis repo) service.proto includes the following Services definition for CreateService:

Migrating Prometheus Exporters to Kubernetes

I have built Prometheus Exporters for multiple cloud platforms to track resources deployed across clouds:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Analytics

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

Additionally, I’ve written two status service exporters:

These exporters are all derived from an exemplar DigitalOcean Exporter written by metalmatze for which I maintain a fork.

Tag: K3s

Deploying to K3s

A simple deployment of cAdvisor to K3s and to confirm the ability to expose Ingresses using Tailscale Kubernetes Operator (TLS) and – since it’s already installed with K3s – Traefik (non-TLS).

local image = "gcr.io/cadvisor/cadvisor:latest";

local labels = {

app: "cadvisor",

};

local name = std.extVar("NAME");

local node_ip = std.extVar("NODE_IP");

local port = 8080;

local deployment = {

apiVersion: "apps/v1",

kind: "Deployment",

metadata: {

name: name,

labels: labels,

},

spec: {

replicas: 1,

selector: {

matchLabels: labels,

},

template: {

metadata: {

labels: labels,

},

spec: {

containers: [

{

name: name,

image: image,

ports: [

{

name: "http",

containerPort: 8080,

protocol: "TCP",

},

],

resources: {

limits: {

memory: "500Mi",

},

requests: {

cpu: "250m",

memory: "250Mi",

},

},

securityContext: {

allowPrivilegeEscalation: false,

privileged: false,

readOnlyRootFilesystem: true,

runAsGroup: 1000,

runAsNonRoot: true,

runAsUser: 1000,

},

},

],

},

},

},

};

local ingresses = [

{

// Tailscale Ingress TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "tailscale",

labels: labels,

},

spec: {

ingressClassName: "tailscale",

defaultBackend: {

service: {

name: name,

port: {

number: port,

},

},

},

tls: [

{

hosts: [

name,

],

},

],

},

},

{

// Traefik Ingress non-TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "traefik",

labels: labels,

},

spec: {

ingressClassName: "traefik",

rules: [

{

host: std.format(

"%(name)s.%(node_ip)s.nip.io", {

name: name,

node_ip: node_ip,

},

),

http: {

paths: [

{

path: "/",

pathType: "Prefix",

backend: {

service: {

name: name,

port: {

number: port,

},

},

},

},

],

},

},

],

},

},

];

local prometheusrule = {

// Alternatively parameterize the duration

local duration = 10,

apiVersion: "monitoring.coreos.com/v1",

kind: "PrometheusRule",

metadata: {

name: name,

labels: labels,

},

spec: {

groups: [

{

name: name,

rules: [

{

alert: "TestcAdvsiorDown",

annotations: {

summary: "Test cAdvisor instance is down or not scraping metrics.",

description: std.format(

"The cAdvisor instance {{ $labels.instance }} has not been scraping metrics for more than %(duration)s minutes.", {

duration: duration,

},

),

},

expr: "absent(cadvisor_version_info{namespace=\"k3s-test\"}) or (cadvisor_version_info{namespace=\"k3s-test\"}!=1)",

"for": std.format(

"%(duration)sm", {

duration: duration,

},

),

labels: labels {

severity: "warning",

},

},

],

},

],

},

};

local service = {

apiVersion: "v1",

kind: "Service",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: labels,

ports: [

{

name: "http",

port: port,

targetPort: port,

protocol: "TCP",

},

],

},

};

local serviceaccount = {

apiVersion: "v1",

kind: "ServiceAccount",

metadata: {

name: name,

labels: labels,

},

};

local servicemonitor = {

apiVersion: "monitoring.coreos.com/v1",

kind: "ServiceMonitor",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: {

matchLabels: labels,

},

endpoints: [

{

interval: "120s",

path: "/metrics",

port: "http",

scrapeTimeout: "30s",

},

],

},

};

// Output

{

apiVersion: "v1",

kind: "List",

items: [

deployment,

prometheusrule,

service,

serviceaccount,

servicemonitor,

] + ingresses,

}

I like to have a script that applies Jsonnet to the file:

Migrating to K3s

I’m migrating from MicroK8s to K3s. This post explains the installation and (re)configuration steps that I took including verification steps:

- Install K3s

- Use standalone

kubectland update${KUBECONFIG}(~/.kube/config) - Install System Upgrade Controller

- Install

kube-prometheusstack (including the Prometheus Operator) - Disable Grafana and Node Exporter

- Tweak default

scrapeInterval - Install Tailscale Operator

- Create Prometheus and Alertmanager Ingresses

- Patch Prometheus and Alertmanager

externalUrls - Tweak

Prometheusresource to allow anyserviceMonitors - Tweak

Prometheusresource to allow anyproemtheusRules

MicroK8s

Why abandon MicroK8s? I’ve been using MicroK8s for several years without issue but, after upgrading to Ubuntu 25.10 which includes a Rust replacement for sudo (and doesn’t support sudo -E), I created a problem for myself with MicroK8s and have been unable to restore a working Kubernetes cluster. I took the opportunity to reassess my distribution and have long thought to switch to K3s.

Tag: Kube-Prometheus

Deploying to K3s

A simple deployment of cAdvisor to K3s and to confirm the ability to expose Ingresses using Tailscale Kubernetes Operator (TLS) and – since it’s already installed with K3s – Traefik (non-TLS).

local image = "gcr.io/cadvisor/cadvisor:latest";

local labels = {

app: "cadvisor",

};

local name = std.extVar("NAME");

local node_ip = std.extVar("NODE_IP");

local port = 8080;

local deployment = {

apiVersion: "apps/v1",

kind: "Deployment",

metadata: {

name: name,

labels: labels,

},

spec: {

replicas: 1,

selector: {

matchLabels: labels,

},

template: {

metadata: {

labels: labels,

},

spec: {

containers: [

{

name: name,

image: image,

ports: [

{

name: "http",

containerPort: 8080,

protocol: "TCP",

},

],

resources: {

limits: {

memory: "500Mi",

},

requests: {

cpu: "250m",

memory: "250Mi",

},

},

securityContext: {

allowPrivilegeEscalation: false,

privileged: false,

readOnlyRootFilesystem: true,

runAsGroup: 1000,

runAsNonRoot: true,

runAsUser: 1000,

},

},

],

},

},

},

};

local ingresses = [

{

// Tailscale Ingress TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "tailscale",

labels: labels,

},

spec: {

ingressClassName: "tailscale",

defaultBackend: {

service: {

name: name,

port: {

number: port,

},

},

},

tls: [

{

hosts: [

name,

],

},

],

},

},

{

// Traefik Ingress non-TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "traefik",

labels: labels,

},

spec: {

ingressClassName: "traefik",

rules: [

{

host: std.format(

"%(name)s.%(node_ip)s.nip.io", {

name: name,

node_ip: node_ip,

},

),

http: {

paths: [

{

path: "/",

pathType: "Prefix",

backend: {

service: {

name: name,

port: {

number: port,

},

},

},

},

],

},

},

],

},

},

];

local prometheusrule = {

// Alternatively parameterize the duration

local duration = 10,

apiVersion: "monitoring.coreos.com/v1",

kind: "PrometheusRule",

metadata: {

name: name,

labels: labels,

},

spec: {

groups: [

{

name: name,

rules: [

{

alert: "TestcAdvsiorDown",

annotations: {

summary: "Test cAdvisor instance is down or not scraping metrics.",

description: std.format(

"The cAdvisor instance {{ $labels.instance }} has not been scraping metrics for more than %(duration)s minutes.", {

duration: duration,

},

),

},

expr: "absent(cadvisor_version_info{namespace=\"k3s-test\"}) or (cadvisor_version_info{namespace=\"k3s-test\"}!=1)",

"for": std.format(

"%(duration)sm", {

duration: duration,

},

),

labels: labels {

severity: "warning",

},

},

],

},

],

},

};

local service = {

apiVersion: "v1",

kind: "Service",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: labels,

ports: [

{

name: "http",

port: port,

targetPort: port,

protocol: "TCP",

},

],

},

};

local serviceaccount = {

apiVersion: "v1",

kind: "ServiceAccount",

metadata: {

name: name,

labels: labels,

},

};

local servicemonitor = {

apiVersion: "monitoring.coreos.com/v1",

kind: "ServiceMonitor",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: {

matchLabels: labels,

},

endpoints: [

{

interval: "120s",

path: "/metrics",

port: "http",

scrapeTimeout: "30s",

},

],

},

};

// Output

{

apiVersion: "v1",

kind: "List",

items: [

deployment,

prometheusrule,

service,

serviceaccount,

servicemonitor,

] + ingresses,

}

I like to have a script that applies Jsonnet to the file:

Migrating to K3s

I’m migrating from MicroK8s to K3s. This post explains the installation and (re)configuration steps that I took including verification steps:

- Install K3s

- Use standalone

kubectland update${KUBECONFIG}(~/.kube/config) - Install System Upgrade Controller

- Install

kube-prometheusstack (including the Prometheus Operator) - Disable Grafana and Node Exporter

- Tweak default

scrapeInterval - Install Tailscale Operator

- Create Prometheus and Alertmanager Ingresses

- Patch Prometheus and Alertmanager

externalUrls - Tweak

Prometheusresource to allow anyserviceMonitors - Tweak

Prometheusresource to allow anyproemtheusRules

MicroK8s

Why abandon MicroK8s? I’ve been using MicroK8s for several years without issue but, after upgrading to Ubuntu 25.10 which includes a Rust replacement for sudo (and doesn’t support sudo -E), I created a problem for myself with MicroK8s and have been unable to restore a working Kubernetes cluster. I took the opportunity to reassess my distribution and have long thought to switch to K3s.

Tailscale client metrics service discovery to Prometheus

I couldn’t summarize this in a title (even with an LLM’s help):

I wanted to:

- Run a Tailscale service discovery agent

- On a Tailscale node outside of the Kubernetes cluster

- Using Podman Quadlet

- Accessing it from the Kubernetes Cluster using the Tailscale’s egress proxy

- Accessing the proxy with a

kube-prometheusScrapeConfig - In order that Prometheus would scrape the container for Tailscale client metrics

Long-winded? Yes but I had an underlying need in running the Tailscale Service Discoovery remotely and this configuration helped me achieve that.

MicroK8s operability add-on

Spent time today yak-shaving which resulted in an unplanned migration from MicroK8s ‘prometheus’ add-on to the new and not fully-documented ‘observability’ add-on:

sudo microk8s.enable prometheus

Infer repository core for addon prometheus

DEPRECATION WARNING: 'prometheus' is deprecated and will soon be removed. Please use 'observability' instead.

...

The reason for the name change is unclear.

It’s unclear whether there’s a difference in the primary components that are installed too (I’d thought Grafana wasn’t included in ‘prometheus’), (Grafana) Loki and (Grafana) Tempo definitely weren’t included and I don’t want them either.

Tag: Kubernetes

Deploying to K3s

A simple deployment of cAdvisor to K3s and to confirm the ability to expose Ingresses using Tailscale Kubernetes Operator (TLS) and – since it’s already installed with K3s – Traefik (non-TLS).

local image = "gcr.io/cadvisor/cadvisor:latest";

local labels = {

app: "cadvisor",

};

local name = std.extVar("NAME");

local node_ip = std.extVar("NODE_IP");

local port = 8080;

local deployment = {

apiVersion: "apps/v1",

kind: "Deployment",

metadata: {

name: name,

labels: labels,

},

spec: {

replicas: 1,

selector: {

matchLabels: labels,

},

template: {

metadata: {

labels: labels,

},

spec: {

containers: [

{

name: name,

image: image,

ports: [

{

name: "http",

containerPort: 8080,

protocol: "TCP",

},

],

resources: {

limits: {

memory: "500Mi",

},

requests: {

cpu: "250m",

memory: "250Mi",

},

},

securityContext: {

allowPrivilegeEscalation: false,

privileged: false,

readOnlyRootFilesystem: true,

runAsGroup: 1000,

runAsNonRoot: true,

runAsUser: 1000,

},

},

],

},

},

},

};

local ingresses = [

{

// Tailscale Ingress TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "tailscale",

labels: labels,

},

spec: {

ingressClassName: "tailscale",

defaultBackend: {

service: {

name: name,

port: {

number: port,

},

},

},

tls: [

{

hosts: [

name,

],

},

],

},

},

{

// Traefik Ingress non-TLS (non-public)

apiVersion: "networking.k8s.io/v1",

kind: "Ingress",

metadata: {

name: "traefik",

labels: labels,

},

spec: {

ingressClassName: "traefik",

rules: [

{

host: std.format(

"%(name)s.%(node_ip)s.nip.io", {

name: name,

node_ip: node_ip,

},

),

http: {

paths: [

{

path: "/",

pathType: "Prefix",

backend: {

service: {

name: name,

port: {

number: port,

},

},

},

},

],

},

},

],

},

},

];

local prometheusrule = {

// Alternatively parameterize the duration

local duration = 10,

apiVersion: "monitoring.coreos.com/v1",

kind: "PrometheusRule",

metadata: {

name: name,

labels: labels,

},

spec: {

groups: [

{

name: name,

rules: [

{

alert: "TestcAdvsiorDown",

annotations: {

summary: "Test cAdvisor instance is down or not scraping metrics.",

description: std.format(

"The cAdvisor instance {{ $labels.instance }} has not been scraping metrics for more than %(duration)s minutes.", {

duration: duration,

},

),

},

expr: "absent(cadvisor_version_info{namespace=\"k3s-test\"}) or (cadvisor_version_info{namespace=\"k3s-test\"}!=1)",

"for": std.format(

"%(duration)sm", {

duration: duration,

},

),

labels: labels {

severity: "warning",

},

},

],

},

],

},

};

local service = {

apiVersion: "v1",

kind: "Service",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: labels,

ports: [

{

name: "http",

port: port,

targetPort: port,

protocol: "TCP",

},

],

},

};

local serviceaccount = {

apiVersion: "v1",

kind: "ServiceAccount",

metadata: {

name: name,

labels: labels,

},

};

local servicemonitor = {

apiVersion: "monitoring.coreos.com/v1",

kind: "ServiceMonitor",

metadata: {

name: name,

labels: labels,

},

spec: {

selector: {

matchLabels: labels,

},

endpoints: [

{

interval: "120s",

path: "/metrics",

port: "http",

scrapeTimeout: "30s",

},

],

},

};

// Output

{

apiVersion: "v1",

kind: "List",

items: [

deployment,

prometheusrule,

service,

serviceaccount,

servicemonitor,

] + ingresses,

}

I like to have a script that applies Jsonnet to the file:

Migrating to K3s

I’m migrating from MicroK8s to K3s. This post explains the installation and (re)configuration steps that I took including verification steps:

- Install K3s

- Use standalone

kubectland update${KUBECONFIG}(~/.kube/config) - Install System Upgrade Controller

- Install

kube-prometheusstack (including the Prometheus Operator) - Disable Grafana and Node Exporter

- Tweak default

scrapeInterval - Install Tailscale Operator

- Create Prometheus and Alertmanager Ingresses

- Patch Prometheus and Alertmanager

externalUrls - Tweak

Prometheusresource to allow anyserviceMonitors - Tweak

Prometheusresource to allow anyproemtheusRules

MicroK8s

Why abandon MicroK8s? I’ve been using MicroK8s for several years without issue but, after upgrading to Ubuntu 25.10 which includes a Rust replacement for sudo (and doesn’t support sudo -E), I created a problem for myself with MicroK8s and have been unable to restore a working Kubernetes cluster. I took the opportunity to reassess my distribution and have long thought to switch to K3s.

Tailscale client metrics service discovery to Prometheus

I couldn’t summarize this in a title (even with an LLM’s help):

I wanted to:

- Run a Tailscale service discovery agent

- On a Tailscale node outside of the Kubernetes cluster

- Using Podman Quadlet

- Accessing it from the Kubernetes Cluster using the Tailscale’s egress proxy

- Accessing the proxy with a

kube-prometheusScrapeConfig - In order that Prometheus would scrape the container for Tailscale client metrics

Long-winded? Yes but I had an underlying need in running the Tailscale Service Discoovery remotely and this configuration helped me achieve that.

Migrating Prometheus Exporters to Kubernetes

I have built Prometheus Exporters for multiple cloud platforms to track resources deployed across clouds:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Analytics

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

Additionally, I’ve written two status service exporters:

These exporters are all derived from an exemplar DigitalOcean Exporter written by metalmatze for which I maintain a fork.

Using Rust to generate Kubernetes CRD

For the first time, I chose Rust to solve a problem. Until this, I’ve been trying to use Rust to learn the language and to rewrite existing code. But, this problem led me to Rust because my other tools wouldn’t cut it.

The question was how to represent oneof fields in Kubernetes Custom Resource Definitions (CRDs).

CRDs use OpenAPI schema and the YAML that results can be challenging to grok.

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: deploymentconfigs.example.com

spec:

group: example.com

names:

categories: []

kind: DeploymentConfig

plural: deploymentconfigs

shortNames: []

singular: deploymentconfig

scope: Namespaced

versions:

- additionalPrinterColumns: []

name: v1alpha1

schema:

openAPIV3Schema:

description: An example schema

properties:

spec:

properties:

deployment_strategy:

oneOf:

- required:

- rolling_update

- required:

- recreate

properties:

recreate:

properties:

something:

format: uint16

minimum: 0.0

type: integer

required:

- something

type: object

rolling_update:

properties:

max_surge:

format: uint16

minimum: 0.0

type: integer

max_unavailable:

format: uint16

minimum: 0.0

type: integer

required:

- max_surge

- max_unavailable

type: object

type: object

required:

- deployment_strategy

type: object

required:

- spec

title: DeploymentConfig

type: object

served: true

storage: true

subresources: {}