Below you will find pages that utilize the taxonomy term “Golang”

Prometheus Exporter for USGS Water Data service

I’m a little obsessed with creating Prometheus Exporters:

- Prometheus Exporter for Azure

- Prometheus Exporter for crt.sh

- Prometheus Exporter for Fly.io

- Prometheus Exporter for GoatCounter

- Prometheus Exporter for Google Cloud

- Prometheus Exporter for Koyeb

- Prometheus Exporter for Linode

- Prometheus Exporter for PorkBun

- Prometheus Exporter for updown.io

- Prometheus Exporter for Vultr

All of these were written to scratch an itch.

In the case of the cloud platform exporters (Azure, Fly, Google, Linode, Vultr etc.), it’s an overriding anxiety that I’ll leave resources deployed on these platforms and, running an exporter that ships alerts to Pushover and Gmail, provides me a support mechanism for me.

FauxRPC using gRPCurl, Golang and rust

Read FauxRPC + Testcontainers on Hacker News and was intrigued. I spent a little more time “evaluating” this than I’d planned because I’m forcing myself to use rust as much as possible and my ignorance (see below) caused me some challenges.

The technology is interesting and works well. The experience helped me explore Testcontainers too which I’d heard about but not explored until this week.

For my future self:

| What | What? |

|---|---|

| FauxRPC | A general-purpose tool (built using Buf’s Connect) that includes registry and stub (gRPC) services that can be (programmatically) configured (using a Protobuf descriptor) and stubs (example method responses) to help test gRPC implementations. |

| Testcontainers | Write code (e.g. rust) to create and interact (test)services (running in [Docker] containers). |

| Connect | (More than a) gRPC implementation used by FauxRPC |

| gRPCurl | A command-line gRPC tool. |

I started following along with FauxRPC’s Testcontainers example but, being unfamiliar with Connect, I wasn’t familiar with its Eliza service. The service is available on demo.connectrpc.com:443 and is described using eliza.proto as part of examples-go. Had I realized this sooner, I would have used this example rather than the Health Checking protocol.

Using Delve to debug Go containers on Kubernetes

An interesting question on Stack overflow prompted me to understand how to use Visual Studio Code and Delve to remotely debug a Golang app running on Kubernetes (MicroK8s).

The OP is using Gin which was also new to me so the question gave me an opportunity to try out several things.

Sources

A simple healthz handler:

package main

import (

"flag"

"log/slog"

"net/http"

"github.com/gin-gonic/gin"

)

var (

addr = flag.String("addr", "0.0.0.0:8080", "HTTP server endpoint")

)

func healthz(c *gin.Context) {

c.String(http.StatusOK, "ok")

}

func main() {

flag.Parse()

router := gin.Default()

router.GET("/fib", handler())

router.GET("healthz", healthz)

slog.Info("Server starting")

slog.Info("Server error",

"err", router.Run(*addr),

)

}

Containerfile:

Google Cloud Translation w/ gRPC 3 ways

General

You’ll need a Google Cloud project with Cloud Translation (translate.googleapis.com) enabled and a Service Account (and key) with suitable permissions in order to run the following.

BILLING="..." # Your Billing ID (gcloud billing accounts list)

PROJECT="..." # Your Project ID

ACCOUNT="tester"

EMAIL="${ACCOUNT}@${PROJECT}.iam.gserviceaccount.com"

ROLES=(

"roles/cloudtranslate.user"

"roles/serviceusage.serviceUsageConsumer"

)

# Create Project

gcloud projects create ${PROJECT}

# Associate Project with your Billing Account

gcloud billing accounts link ${PROJECT} \

--billing-account=${BILLING}

# Enable Cloud Translation

gcloud services enable translate.googleapis.com \

--project=${PROJECT}

# Create Service Account

gcloud iam service-accounts create ${ACCOUNT} \

--project=${PROJECT}

# Create Service Account Key

gcloud iam service-accounts keys create ${PWD}/${ACCOUNT}.json \

--iam-account=${EMAIL} \

--project=${PROJECT}

# Update Project IAM permissions

for ROLE in "${ROLES[@]}"

do

gcloud projects add-iam-policy-binding ${PROJECT} \

--member=serviceAccount:${EMAIL} \

--role=${ROLE}

done

For the code, you’ll need to install protoc and preferably have it in your path.

Google Cloud Events protobufs and SDKs

I’ve written before about Ackal’s use of Firestore and subscribing to Firestore document CRUD events:

- Routing Firestore events to GKE with Eventarc

- Cloud Firestore Triggers in Golang using Firestore triggers

I find Google’s Eventarc documentation to be confusing and, in typical Google fashion, even though open-sourced, you often need to do some legwork to find relevant sources, viz:

- Google’s Protobufs for Eventarc (using cloudevents)

google-cloudevents1 - Convenience (since you can generate these using

protoc) language-specific types generated from the above e.g.google-cloudevents-go;google-cloudevents-pythonetc.

1 – IIUC EventArc is the Google service. It carries Google Events that are CloudEvents. These are defined by protocol buffers schemas.

Prost! Tonic w/ a dash of JSON

I naively (!) began exploring JSON marshaling of Protobufs in rust. Other protobuf language SDKs include JSON marshaling making the process straightforward. I was to learn that, in rust, it’s not so simple. Unfortunately, for me, this continues to discourage my further use of rust (rust is just hard).

My goal was to marshal an arbitrary protocol buffer message that included a oneof feature. I was unable to JSON marshal the rust generated by tonic for such a message.

Navigating Koyeb's Golang SDK

Ackal deploys gRPC Health Checking clients in locations around the World in order to health check services that are representative of customer need.

Koyeb offers multiple locations and I spent time today writing a client for Ackal to integrate with Koyeb using the Golang client for the Koyeb API.

The SDK is generated from Koyeb’s OpenAPI (nee Swagger) endpoint using openapi-generator-cli. This is a smart, programmatic solution to ensuring that the SDK always matches the API definition but I found the result is idiosyncratic and therefore a little gnarly.

Access Google Services using gRPC

Google publishes interface definitions of Google APIs (services) that support REST and gRPC in a repo called Google APIs. Google’s SDKs uses gRPC to access these services but, how to do this using e.g. gRPCurl?

I wanted to debug Cloud Profiler and its agent makes UpdateProfile RPCs to cloudprofiler.googleapis.com. Cloud Profiler is more challenging service to debug because (a) it’s publicly “write-only”; and (b) it has complex messages. UpdateProfile sends UpdateProfileRequest messages that include Profile messages that include profile_bytes which are gzip compressed serialized protos of pprof’s Profile.

Maintaining Container Images

As I contemplate moving my “thing” into production, I’m anticipating aspects of the application that need maintenance and how this can be automated.

I’d been negligent in the maintenance of some of my container images.

I’m using mostly Go and some Rust as the basis of static(ally-compiled) binaries that run in these containers but not every container has a base image of scratch. scratch is the only base image that doesn’t change and thus the only base image that doesn’t require that container images buit FROM it, be maintained.

Delegate domain-wide authority using Golang

I’d not used Google’s Domain-wide Delegation from Golang and struggled to find example code.

Google provides Java and Python samples.

Google has a myriad packages implementing its OAuth security and it’s always daunting trying to determine which one to use.

As it happens, I backed into the solution through client.Options

ctx := context.Background()

// Google Workspace APIS don't use IAM do use OAuth scopes

// Scopes used here must be reflected in the scopes on the

// Google Workspace Domain-wide Delegate client

scopes := []string{ ... }

// Delegates on behalf of this Google Workspace user

subject := "a@google-workspace-email.com"

creds, _ := google.FindDefaultCredentialsWithParams(

ctx,

google.CredentialsParams{

Scopes: scopes,

Subject: subject,

},

)

opts := option.WithCredentials(creds)

service, _ := admin.NewService(ctx, opts)

In this case NewService applies to Google’s Golang Admin SDK API although the pattern of NewService(ctx) or NewService(ctx, opts) where opts is a option.ClientOption is consistent across Google’s Golang libraries.

Basic programmatic access to GitHub Issues

It’s been a while!

I’ve been spending time writing Bash scripts and a web site but neither has been sufficiently creative that I’ve felt worth a blog post.

As I’ve been finalizing the web site, I needed an Issue Tracker and decided to leverage GitHub(’s Issues).

As a former Googler, I’m familiar with Google’s (excellent) internal issue tracking tool (Buganizer) and it’s public manifestation Issue Tracker. Google documents Issue Tracker and its Issue type which I’ve mercilessly plagiarized in my implementation.

Golang Structured Logging w/ Google Cloud Logging (2)

UPDATE There’s an issue with my naive implementation of

RenderValuesHookas described in this post. I summarized the problem in this issue where I’ve outlined (hopefully) a more robust solution.

Recently, I described how to configure Golang logging so that user-defined key-values applied to the logs are parsed when ingested by Google Cloud Logging.

Here’s an example of what we’re trying to achieve. This is an example Cloud Logging log entry that incorporates user-defined labels (see dog:freddie and foo:bar) and a readily-querable jsonPayload:

Golang Structured Logging w/ Google Cloud Logging

I’ve multiple components in an app and these are deployed across multiple Google Cloud Platform (GCP) services: Kubernetes Engine, Cloud Functions, Cloud Run, etc. Almost everything is written in Golang and I started the project using go-logr.

logr is in two parts: a Logger that you use to write log entries; a LogSink (adaptor) that consumes log entries and outputs them to a specific log implementation.

Initially, I defaulted to using stdr which is a LogSink for Go’s standard logging implementation. Something similar to the module’s example:

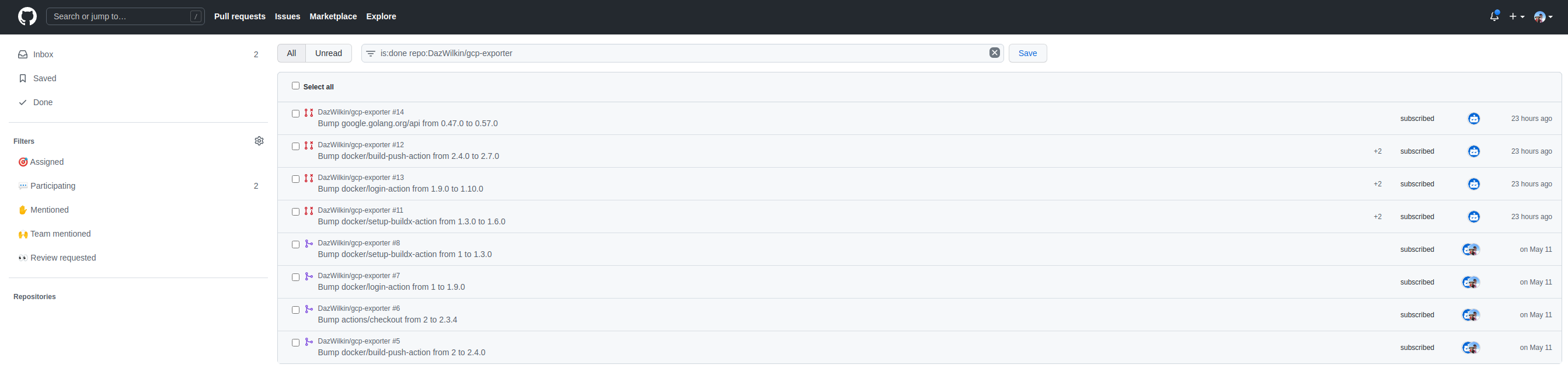

GitHub help with dependency management

This is very useful:

I am building an application that comprises multiple repos. I continue to procrastinate on whether using multiple repos vs. a monorepo was a good idea but, an issue that I have (had) is the need to ensure that the repos’ contents are using current|latest modules. GitHub can help.

Most of the application is written in Golang with a smattering of Rust and some JavaScript.

gRPC Interceptors and in-memory gRPC connections

For… reasons, I wanted to pre-filter gRPC requests to check for authorization. Authorization is implemented as a ‘micro-service’ and I wanted the authorization server to run in the same process as the gRPC client.

TL;DR:

- Shiju’s “Writing gRPC Interceptors in Go” is great

- This Stack overflow answer ostensibly for writing unit tests for gRPC got me an in-process server

What follows stands on these folks’ shoulders…

A key motivator for me to write blog posts is that helps me ensure that I understand things. Writing this post, I realized I’d not researched gRPC Interceptors and, as luck would have it, I found some interesting content, not on grpc.io but on the grpc-ecosystem repo, specifically Go gRPC middleware. But, I refer again to Shiju’s clear and helpful “Writing gRPC Interceptors in Go”

Stripe

It’s been almost a month since my last post. I’ve been occupied learning Stripe and integrating it into an application that I’m developing. The app benefits from a billing mechanism for prospective customers and, as far as I can tell, Stripe is the solution. I’d be interested in hearing perspectives on alternatives.

As with any platform, there’s good and bad and I’ll summarize my perspective on Stripe here. It’s been some time since I developed in JavaScript and this lack of familiarity has meant that the solution took longer than I wanted to develop. That said, before this component, I developed integration with Firebase Authentication and that required JavaScript’ing too and that was much easier (and more enjoyable).

Struggling with Golang structs

Julia’s post Blog about what you’ve struggled with resonates because I’ve been struggling with Golang structs in a project. Not the definitions of structs but seemingly needing to reproduce them across the project. I realize that each instance of these resources differs from the others but I’m particularly concerned by having to duplicate method implementations on them.

I’m kinda hoping that I see the solution to my problem by writing it out. If you’re reading this, I didn’t :-(

Firestore Golang Timestamps & Merging

I’m using Google’s Golang SDK for Firestore. The experience is excellent and I’m quickly becoming a fan of Firestore. However, as a Golang Firestore developer, I’m feeling less loved and some of the concepts in the database were causing me a conundrum.

I’m still not entirely certain that I have Timestamps nailed but… I learned an important lesson on the auto-creation of Timestamps in documents and how to retain these values.

Cloud Firestore Triggers in Golang

I was pleased to discover that Google provides a non-Node.JS mechanism to subscribe to and act upon Firestore triggers, Google Cloud Firestore Triggers. I’ve nothing against Node.JS but, for the project i’m developing, everything else is written in Golang. It’s good to keep it all in one language.

I’m perplexed that Cloud Functions still (!) only supports Go 1.13 (03-Sep-2019). Even Go 1.14 (25-Feb-2020) was released pre-pandemic and we’re now running on 1.16. Come on Google!

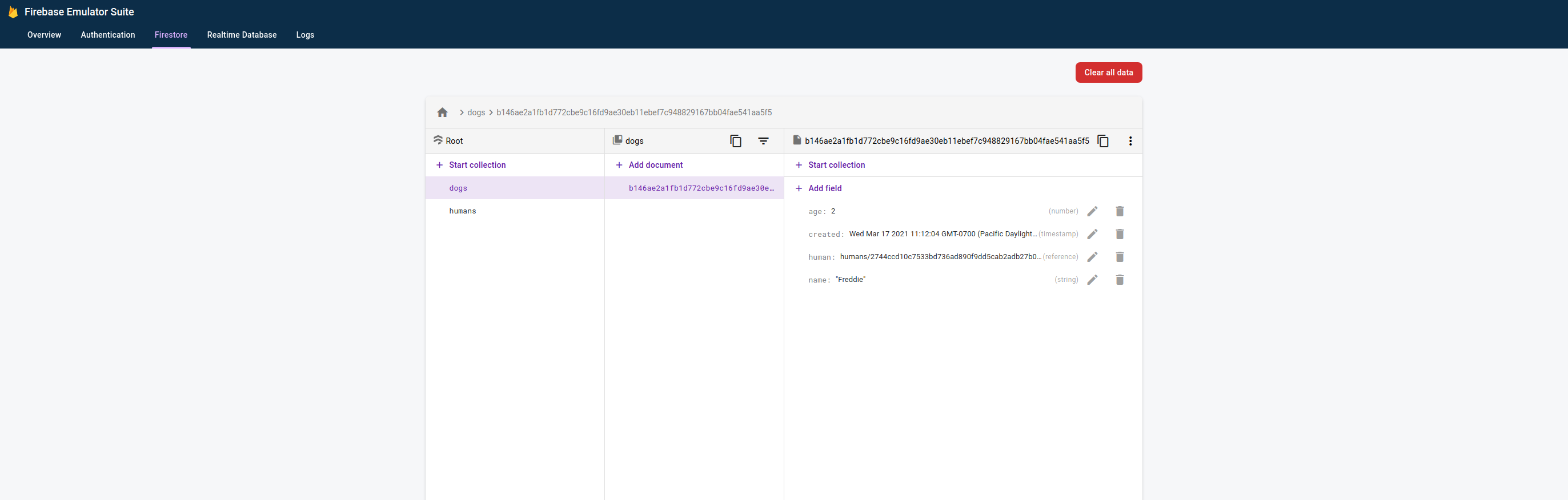

Using Golang with the Firestore Emulator

This works great but it wasn’t clearly documented for non-Firebase users. I assume it will work, as well, for any of the client libraries (not just Golang).

Assuming you have some (Golang) code (in this case using the Google Cloud Client Library) that interacts with a Firestore database. Something of the form:

package main

import (

"context"

"crypto/sha256"

"fmt"

"log"

"os"

"time"

"cloud.google.com/go/firestore"

)

func hash(s string) string {

h := sha256.New()

h.Write([]byte(s))

return fmt.Sprintf("%x", h.Sum(nil))

}

type Dog struct {

Name string `firestore:"name"`

Age int `firestore:"age"`

Human *firestore.DocumentRef `firestore:"human"`

Created time.Time `firestore:"created"`

}

func NewDog(name string, age int, human *firestore.DocumentRef) Dog {

return Dog{

Name: name,

Age: age,

Human: human,

Created: time.Now(),

}

}

func (d *Dog) ID() string {

return hash(d.Name)

}

type Human struct {

Name string `firestore:"name"`

}

func (h *Human) ID() string {

return hash(h.Name)

}

func main() {

ctx := context.Background()

project := os.Getenv("PROJECT")

client, err := firestore.NewClient(ctx, project)

if value := os.Getenv("FIRESTORE_EMULATOR_HOST"); value != "" {

log.Printf("Using Firestore Emulator: %s", value)

}

if err != nil {

log.Fatal(err)

}

defer client.Close()

me := Human{

Name: "me",

}

meDocRef := client.Collection("humans").Doc(me.ID())

if _, err := meDocRef.Set(ctx, me); err != nil {

log.Fatal(err)

}

freddie := NewDog("Freddie", 2, meDocRef)

freddieDocRef := client.Collection("dogs").Doc(freddie.ID())

if _, err := freddieDocRef.Set(ctx, freddie); err != nil {

log.Fatal(err)

}

}

Then you can interact instead with the Firestore Emulator.

Programmatically deploying Cloud Run services (Golang|Python)

Phew! Programmitcally deploying Cloud Run services should be easy but it didn’t find it so.

My issues were that the Cloud Run Admin (!) API is poorly documented and it uses non-standard endpoints (thanks Sal!). Here, for others who may struggle with this, is how I got this to work.

Goal

Programmatically (have Golang, Python, want Rust) deploy services to Cloud Run.

i.e. achieve this:

gcloud run deploy ${NAME} \

--image=${IMAGE} \

--platform=managed \

--no-allow-unauthenticated \

--region=${REGION} \

--project=${PROJECT}

TRICK

--log-httpis your friend

Dapr

It’s a good name, I read it as “dapper” but I frequently type “darp” :-(

Was interested to read that Dapr is now v1.0 and decided to check it out. I was initially confused between Dapr and service mesh functionality. But, having used Dapr, it appears to be more focused in aiding the development of (cloud-native) (distributed) apps by providing developers with abstractions for e.g. service discovery, eventing, observability whereas service meshes feel (!) more oriented towards simplifying the deployment of existing apps. Both use the concept of proxies, deployed alongside app components (as sidecars on Kubernetes) to provide their functionality to apps.

Kubernetes Webhooks

I spent some time last week writing my first admission webhook for Kubernetes. I wrote the handler in Golang because I’m most familiar with Golang and because, as Kubernetes’ native language, I was more confident that the necessary SDKs would exist and that the documentation would likely use Golang by default. I struggled to find useful documentation and so this post is to help you (and me!) remember how to do this next time!

Akri

For the past couple of weeks, I’ve been playing around with Akri, a Microsoft (DeisLabs) project for building a connected edge with Kubernetes. Kubernetes, IoT, Rust (and Golang) make this all compelling to me.

Initially, I deployed an Akri End-to-End to MicroK8s on Google Compute Engine (link) and Digital Ocean (link). But I was interested to create me own example and so have proposed a very (!) simple HTTP-based protocol.

This blog summarizes my thoughts about Akri and an explanation of the HTTP protocol implementation in the hope that this helps others.

GitHub Actions && GitHub Container Registry

You know when you start something and then regret it!? I think I’ll be sticking with Google Cloud Build; GitHub Actions appears functional and useful but I found the documentation to be confusing and limited and struggled to get a simple container image build|push working.

I’ve long used Docker Hub but am planning to use it less as a result of the planned changes. I want to see Docker succeed and to do so it needs to find a way to make money but, there are free alternatives including the new GitHub Container Registry and the very very cheap Google Container Registry.

Trillian Map Mode

Chatting with one of Google’s Trillian team, I was encouraged to explore Trillian’s Map Mode. The following is the result of some spelunking through this unfamiliar cave. I can’t provide any guarantee that this usage is correct or sufficient.

Here’s the repo: https://github.com/DazWilkin/go-trillian-map

I’ve written about Trillian Log Mode elsewhere.

I uncovered use of Trillian Map Mode through Trillian’s integration tests. I’m unclear on the distinction between TrillianMapClient and TrillianMapWriteClient but the latter served most of my needs.

WASM Transparency

I’ve been playing around with a proof-of-concept combining WASM and Trillian. The hypothesis was to explore using WASM as a form of chaincode with Trillian. The project works but it’s far from being a chaincode-like solution.

Let’s start with a couple of (trivial) examples and then I’ll explain what’s going on and how it’s implemented.

2020/08/14 18:42:17 [main:loop:dynamic-invoke] Method: mul

2020/08/14 18:42:17 [random:New] Message

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Message

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [random:New] Float32

2020/08/14 18:42:17 [Client:Invoke] Metadata: complex.wasm

2020/08/14 18:42:17 [main:loop:dynamic-invoke] Success: result:{real:0.036980484 imag:0.3898267}

After shipping a Rust-sourced WASM solution (complex.wasm) to the WASM transparency server, the client invokes a method mul that’s exposed by it using a dynamically generated request message and outputs the response. Woo hoo! Yes, an expensive way to multiple complex numbers.

waPC and MsgPack (Rust|Golang)

As my reader will know (Hey Mom!), I’ve been noodling around with WASM and waPC. I’ve been exploring ways to pass structured messages across the host:guest boundary.

Protobufs was my first choice. @KevinHoffman created waPC and waSCC and he explained to me and that wSCC uses Message Pack.

It’s slightly surprising to me (still) that technologies like this exist with everyone else seemingly using them and I’ve not heard of them. I don’t expect to know everything but I’m surprised I’ve not stumbled upon msgpack until now.

Golang Protobuf APIv2

Google has a new Golang Protobuf API, APIv2 (google.golang.org/protobuf) superseding APIv1 (github.com/golang/protobuf). If your code is importing github.com/golang/protobuf, you’re using APIv2. Otherwise, you should consult with the docs because Google reimplemented APIv1 atop APIv2. One challenge this caused me, as someone who does not use protobufs and gRPC on a daily basis, is that gRPC (code-generation) is being removed from the (Golang) protoc-gen-go, the Golang plugin that generates gRPC service bindings.

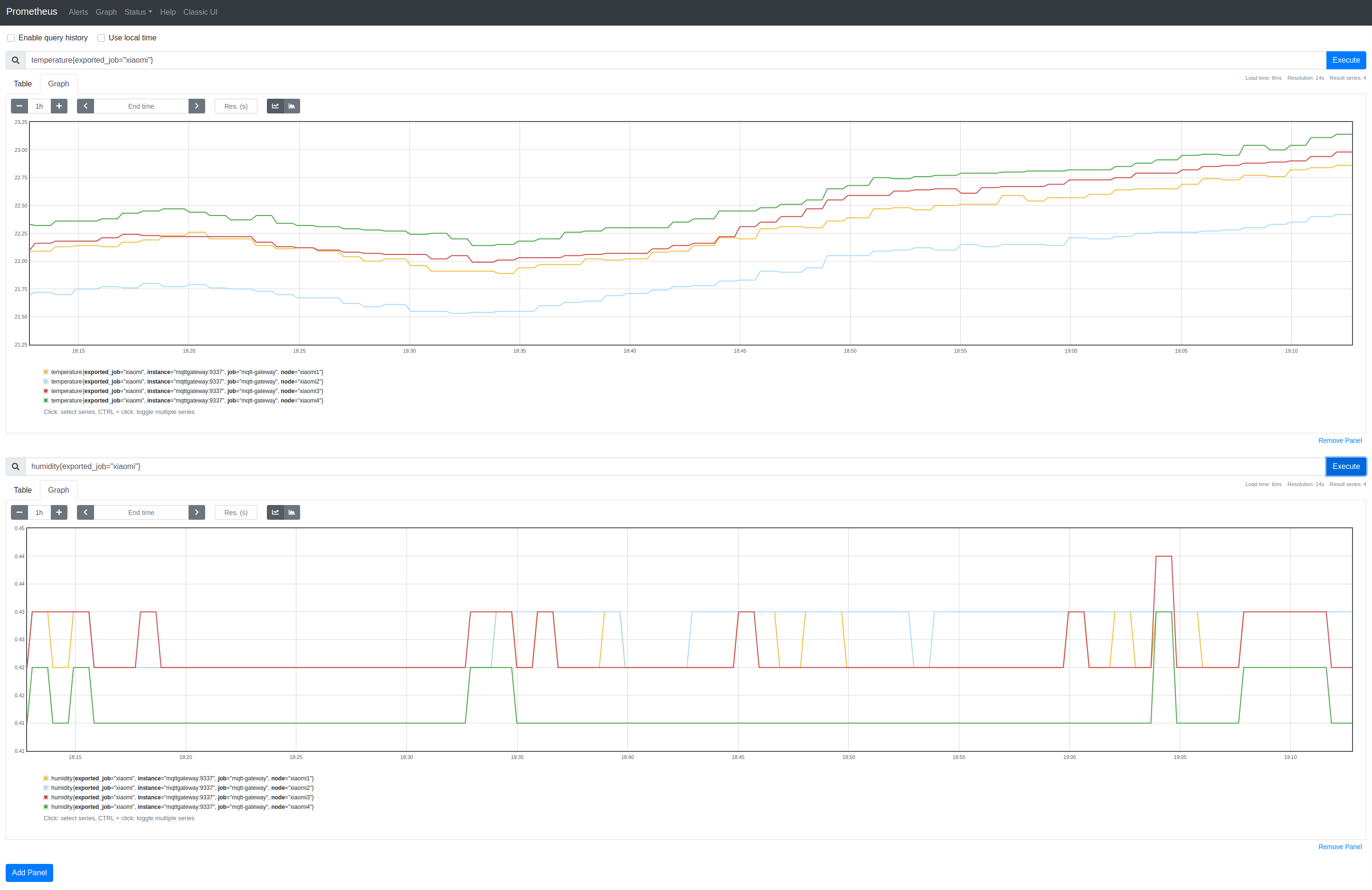

Golang Xiaomi Bluetooth Temperature|Humidity (LYWSD03MMC) 2nd Gen

Well, this became more of an adventure that I’d originally wanted but, after learning some BLE and, with the help of others (Thanks Jonatha, JsBergbau), I’ve sample code that connects to 4 Xiaomi 2nd gen. Thermometers, subscribes to readings and publishes the data to MQTT. From there, I’m scraping it using Inuits MQTTGateway into Prometheus.

Repo: https://github.com/DazWilkin/gomijia2

Thanks|Credit:

- Jonathan McDowell for gomijia and help

- JsBergbau for help

Background

I’ve been playing around with ESPHome and blogged around my very positive experience ESPHome, MQTT, Prometheus and almost Cloud IoT. I’ve ordered a couple of ESP32-DevKitC and hope this will enable me to connect to Google Cloud IoT.

gRPC, Cloud Run & Endpoints

<3 Google but there’s quite often an assumption that we’re all sitting around the engineering table and, of course, we’re not.

Cloud Endpoints is a powerful offering but – IMO – it’s super confusing to understand and complex to deploy.

If you’re familiar with the motivations behind service meshes (e.g. Istio), Cloud Endpoints fits in a similar niche (“neesh” or “nitch”?). The underlying ambition is that, developers can take existing code and by adding a proxy (or sidecar), general-purpose abstractions, security, logging etc. may be added.

Golang gRPC Cloud Run

Update: 2020-03-24: Since writing this post, I’ve contributed Golang and Rust samples to Google’s project. I recommend you start there.

Google explained how to run gRPC servers with Cloud Run. The examples are good but only Python and Node.JS:

Missing Golang…. until now ;-)

I had problems with 1.14 and so I’m using 1.13.

Project structure

I’ll tidy up my repo but the code may be found:

Google's New Golang SDK for Protobufs

Google has released a new Golang SDK for protobuf. In the [announcement], a useful tool to redact properties is described. If like me, this is somewhat novel to you, here’s a mashup using Google’s Protocol Buffer Basics w/ redaction.

To be very clear, as it’s an important distinction:

| Version | Repo | Docs |

|---|---|---|

| v2 | google.golang.org/protobuf | Docs |

| v1 | github.com/golang/protobuf | Docs |

Project

Here’s my project structure:

.

├── protoc-3.11.4-linux-x86_64

│ ├── bin

│ │ └── protoc

│ ├── include

│ │ └── google

│ └── readme.txt

└── src

├── go.mod

├── go.sum

├── main.go

├── protos

│ ├── addressbook.pb.go

│ └── addressbook.proto

└── README.md

You may structure as you wish.

OriginStamp: Verifying Proofs

Recently, I wrote about some initial adventures with OriginStamp

Using OriginStamp’s UI or API, submitting a hash results in transactions being submitted to Bitcoin, Ethereum and a German newspaper.

Using the API, it’s possible to query OriginStamp’s service for a proof. This post explains how to verify such a proof.

The diligent reader among you (Hey Mom!) will recall that I submitted a hash for the message:

Frederik Jack is a bubbly Border Collie

The SHA-256 hash of this message is:

FreeTSA & Digitorus' Timestamp SDK

I wrote recently about some exploration of Timestamping with OriginStamp. Since writing that post, I had some supportive feedback from the helpful folks at OriginStamp and plan to continue exploring that solution.

Meanwhile, OriginStamp exposed me to timestamping and trusted timestamping and I discovered freeTSA.org.

What’s the point? These services provide authoritative proof of the existence of a digital asset before some point in time; OriginStamp provides a richer service and uses multiple timestamp authorities including Bitcoin, Ethereum and rather interestingly a German Newspaper’s Trusted Timestamp.

OriginStamp Python|Golang SDK Examples

A friend mentioned OriginStamp to me.

NB There are 2 sites: originstamp.com and originstamp.org.

It’s an interesting project.

It’s a solution for providing auditable proof that you had a(ccess to) some digital thing before a certain date. OriginStamp provides user-|developer-friendly means to submit files|hashes (of your content) and have these bundled into transactions that are submitted to e.g. bitcoin.

I won’t attempt to duplicate the narrative here, review OriginStamp’s site and some of its content.

Cloud Build wishlist: Mountable Golang Modules Proxy

I think it would be valuable if Google were to provide volumes in Cloud Build of package registries (e.g. Go Modules; PyPi; Maven; NPM etc.).

Google provides a mirror of a subset of Docker Hub. This confers several benefits: Google’s imprimatur; speed (latency); bandwidth; and convenience.

The same benefits would apply to package registries.

In the meantime, there’s a hacky way to gain some of the benefits of these when using Cloud Build.

In the following example, I’ll show an approach using Golang Modules and Google’s module proxy aka proxy.golang.org.

Setting up a GCE Instance as an Inlets Exit Node

The prolific Alex Ellis has a new project, Inlets.

Here’s a quick tutorial using Google Compute Platform’s (GCP) Compute Engine (GCE).

NB I’m using one of Google’s “Always free” f1-micro instances but you may still pay for network *gress and storage

Assumptions

I’m assuming you’ve a Google account, have used GCP and have a billing account established, i.e. the following returns at least one billing account:

gcloud beta billing accounts list

If you’ve only one billing account and it’s the one you wish to use, then you can:

Google Fit

I’ve spent a few days exploring [Google Fit SDK] as I try to wean myself from my obsession with metrics (of all forms). A quick Googling got me to Robert’s Exporter Google Fit Daily Steps, Weight and Distance to a Google Sheet. This works and is probably where I should have stopped… avoiding the rabbit hole that I’ve been down…

I threw together a simple Golang implementation of the SDK using Google’s Golang API Client Library. Thanks to Robert’s example, I was able to infer some of the complexity this API particularly in its use of data types, data sources and datasets. Having used Stackdriver in my previous life, Google Fit’s structure bears more than a passing resemblance to Stackdriver’s data model and its use of resource types and metric types.

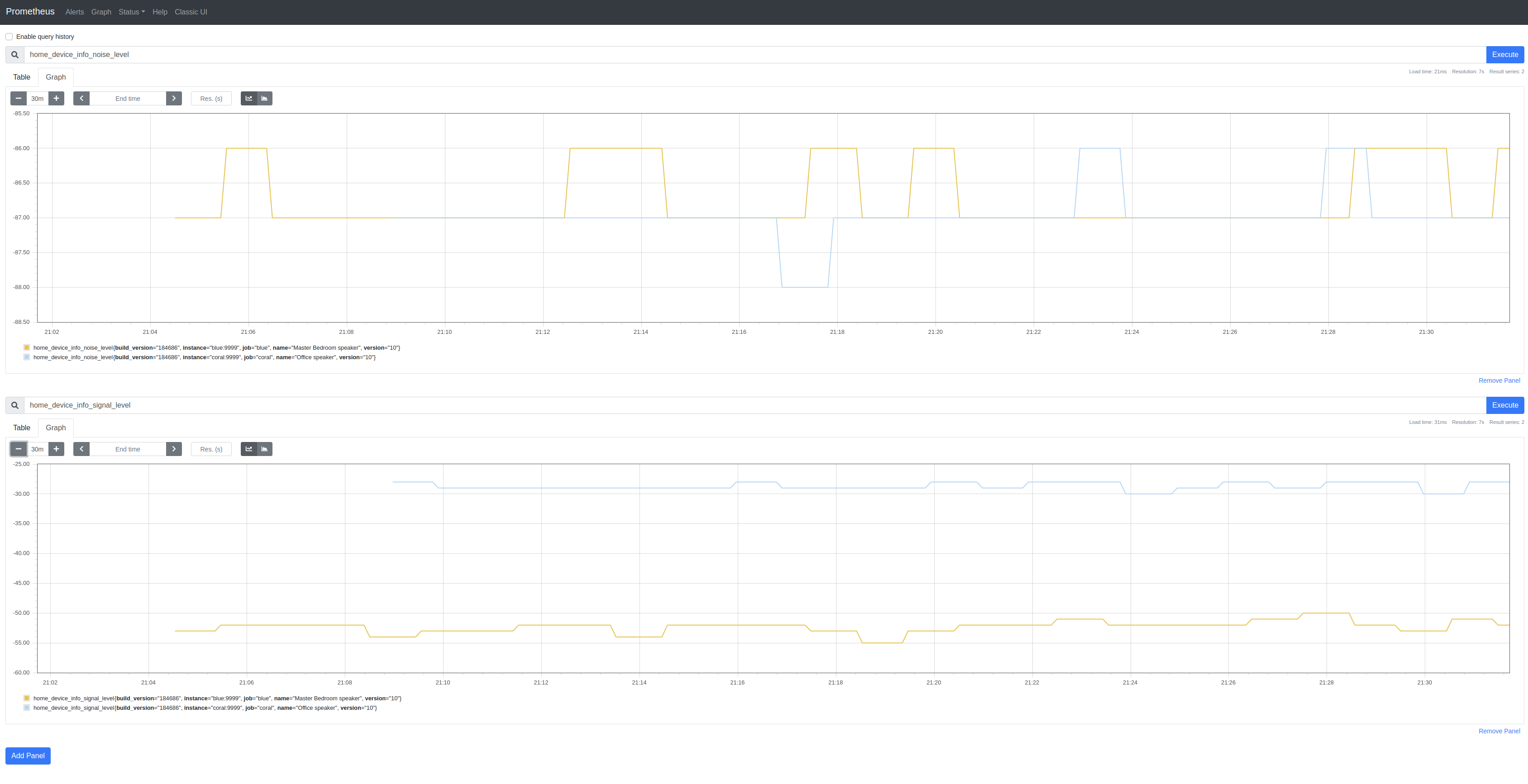

Google Home Exporter

I’m obsessing over Prometheus exporters. First came Linode Exporter, then GCP Exporter and, on Sunday, I stumbled upon a reverse-engineered API for Google Home devices and so wrote a very basic Google Home SDK and a similarly basic Google Home Exporter:

The SDK only implements /setup/eureka_info and then only some of the returned properties. There’s not a lot of metric-like data to use besides SignalLevel (signal_level) and NoiseLevel (noise_level). I’m not clear on the meaning of some of the properties.

Google Cloud Platform (GCP) Exporter

Earlier this week I discussed a Linode Prometheus Exporter.

I added metrics for Digital Ocean’s Managed Kubernetes service to @metalmatze’s Digital Ocean Exporter.

This left, metrics for Google Cloud Platform (GCP) which has, for many years, been my primary cloud platform. So, today I wrote Prometheus Exporter for Google Cloud Platform.

All 3 of these exporters follow the template laid down by @metalmatze and, because each of these services has a well-written Golang SDK, it’s straightforward to implement an exporter for each of them.

Linode Prometheus Exporter

I enjoy using Prometheus and have toyed around with it for some time particularly in combination with Kubernetes. I signed up with Linode [referral] compelled by the addition of a managed Kubernetes service called Linode Kubernetes Engine (LKE). I have an anxiety that I’ll inadvertently leave resources running (unused) on a cloud platform. Instead of refreshing the relevant billing page, it struck me that Prometheus may (not yet proven) help.

The hypothesis is that a combination of a cloud-specific Prometheus exporter reporting aggregate uses of e.g. Linodes (instances), NodeBalancers, Kubernetes clusters etc., could form the basis of an alert mechanism using Prometheus’ alerting.

PyPi Transparency

I’ve been noodling around with another Trillian personality.

Another in a theme that interests me in providing tamperproof logs for the packages in the popular package management registries.

The Golang team recently announced Go Module Mirror which is built atop Trillian. It seems to me that all the package registries (Go Modules, npm, Maven, NuGet etc.) would benefit from tamperproof logs hosted by a trusted 3rd-party.

As you may have guessed, PyPi Transparency is a log for PyPi packages. PyPi is comprehensive, definitive and trusted but, as with Go Module Mirror, it doesn’t hurt to provide a backup of some of its data. In the case of this solution, Trillian hosts a log of self-calculated SHA-256 hashes for Python packages that are added to it.

Cloud Functions Simple(st) HTTP Multi-host Proxy

Tweaked yesterday’s solution so that it will randomly select one from several hosts with which it’s configured.

package proxy

import (

"log"

"math/rand"

"net/http"

"net/url"

"os"

"strings"

"time"

)

func robin() {

hostsList := os.Getenv("PROXY_HOST")

if hostsList == "" {

log.Fatal("'PROXY_HOST' environment variable should contain comma-separated list of hosts")

}

// Comma-separated lists of hosts

hosts := strings.Split(hostsList, ",")

urls := make([]*url.URL, len(hosts))

for i, host := range hosts {

var origin = Endpoint{

Host: host,

Port: os.Getenv("PROXY_PORT"),

}

url, err := origin.URL()

if err != nil {

log.Fatal(err)

}

urls[i] = url

}

s := rand.NewSource(time.Now().UnixNano())

q := rand.New(s)

Handler = func(w http.ResponseWriter, r *http.Request) {

// Pick one of the URLs at random

url := urls[q.Int31n(int32(len(urls)))]

log.Printf("[Handler] Forwarding: %s", url.String())

// Forward to it

reverseproxy(url, w, r)

}

}

This requires a minor tweak to the deployment to escape the commas within the PROXY_HOST string to disambiguate these for gcloud:

Cloud Functions Simple(st) HTTP Proxy

I’m investigating the use of LetsEncrypt for gRPC services. I found this straightforward post by Scott Devoid and am going to try this approach.

Before I can do that, I need to be able to publish services (make them Internet-accessible) and would like to try to continue to use GCP for free.

Some time ago, I wrote about using the excellent Microk8s on GCP. Using an f1-micro, I’m hoping (!) to stay within the Compute Engine free tier. I’ll also try to be diligent and delete the instance when it’s not needed. This gives me a runtime platform and I can expose services to the Instance’s (Node)Ports but, I’d prefer to not be billed for a simple proxy.

pypi-transparency

The goal of pypi-transparency is very similar to the underlying motivation for the Golang team’s Checksum Database (also built with Trillian).

Even though, PyPi provides hashes of the content of packages it hosts, the developer must trust that PyPi’s data is consistent. One ambition with pypi-transparency is to provide a companion, tamperproof log of PyPi package files in order to provide a double-check of these hashes.

It is important to understand what this does (and does not) provide. There’s no validation of a package’s content. The only calculation is that, on first observation, a SHA-256 hash is computed of the package’s content and the hash is recorded. If the package is subsequently altered, it’s very probable that the hash will change and this provides a signal to the user that the package’s contents has changed. Because pypi-transparency uses a tamperproof log, it’s very difficult to update the hash recorded in the tamperproof log, to reflect this change. Corrolary: pypi-transparency will record the hashes of packages that include malicious code.