Prometheus Protobufs and Native Histograms

- 7 minutes read - 1462 wordsI responded to a question Prometheus metric protocol buffer in gRPC on Stackoverflow and it piqued my curiosity and got me yak shaving.

Prometheus used to support two exposition formats including Protocol Buffers, then dropped Protocol Buffer and has since re-added it (see Protobuf format). The Protobuf format has returned to support the experimental Native Histograms feature.

I’m interested in adding Native Histogram support to Ackal so thought I’d learn more about this metric.

You can learn more from these YouTube videos:

- Julius Volz - Native Histograms in Prometheus;

- PromCon EU 2022: Native Histograms in Prometheus;

- PromCon EU 2022: PromQL for Native Histograms

It’s straightforward to run a Prometheus server that will scrape targets that export Protobuf format:

podman run \

--interactive --tty --rm \

--publish=9090:9090/tcp \

prom/prometheus:v2.50.1 \

--config.file=/etc/prometheus/prometheus.yml \

--enable-feature=native-histograms \

--web.enable-lifecycle

But, I think (!?) you also need to publish some metrics using them.

package main

import (

"log/slog"

"math/rand/v2"

"net/http"

"time"

"github.com/prometheus/client_golang/prometheus"

"github.com/prometheus/client_golang/prometheus/promauto"

"github.com/prometheus/client_golang/prometheus/promhttp"

)

const (

)

var (

foo = promauto.NewHistogram(prometheus.HistogramOpts{

Name: "foo",

Help: "The foo metric",

NativeHistogramBucketFactor: 1.1,

})

)

func main() {

go func() {

for {

num := rand.Float64() * 100

slog.Info("go", "num", num)

foo.Observe(num)

time.Sleep(1 * time.Second)

}

}()

registry := prometheus.NewRegistry()

registry.MustRegister(foo)

http.Handle("/metrics", promhttp.HandlerFor(r, promhttp.HandlerOpts{

Registry: registry,

}))

http.ListenAndServe(":2112", nil)

}

Browsing the endpoint (http://localhost:2112/metrics):

# HELP foo The foo metric

# TYPE foo histogram

foo_bucket{le="+Inf"} 10

foo_sum 523.0613213986003

foo_count 10

# HELP promhttp_metric_handler_errors_total Total number of internal errors encountered by the promhttp metric handler.

# TYPE promhttp_metric_handler_errors_total counter

promhttp_metric_handler_errors_total{cause="encoding"} 0

promhttp_metric_handler_errors_total{cause="gathering"} 0

But, this is the Text-based (not Protobuf) format.

It took some hunting but I discovered this kube-state-metrics issue Expose metrics in Protobuf format #1603 and:

GET /metrics HTTP/1.1

Host: localhost:8080

User-Agent: Go-http-client/1.1

Accept: application/vnd.google.protobuf;proto=io.prometheus.client.MetricFamily;encoding=delimited;q=0.7,text/plain;version=0.0.4;q=0.3

I don’t fully understand the Accept header value (q=0.7,version=0.0.4,q=0.3) but otherwise this suggests (!?) that the caller expects (accepts) responses with either application/vnd.google.protobuf (Protobuf format) or text/plain (Text format). And, for the Protobuf format that the proto is io.prometheus.client.MetricFamily which makes sense as this Message type is defined in Prometheus’ repo metrics.proto

Using the above Golang exporter, instead of the browser’s Accept: text/plain, we can use curl and specify application/vnd.google.protobuf:

curl \

--silent \

--verbose \

--header "Accept: application/vnd.google.protobuf;proto=io.prometheus.client.MetricFamily;encoding=delimited" \

localhost:2112/metrics

* Trying 127.0.0.1:2112...

* Connected to localhost (127.0.0.1) port 2112 (#0)

> GET /metrics HTTP/1.1

> Host: localhost:2112

> User-Agent: curl/7.81.0

> Accept: application/vnd.google.protobuf;proto=io.prometheus.client.MetricFamily;encoding=delimited

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Content-Type: application/vnd.google.protobuf; proto=io.prometheus.client.MetricFamily; encoding=delimited; escaping=values

< Date: Thu, 07 Mar 2024 19:58:04 GMT

< Content-Length: 416

<

* Failure writing output to destination

* Closing connection 0

This succeeds (200) but curl prohibits writing binary to standard out. You can --output response.bin or > response.bin or just pipe the result into xxd:

... | xxd

00000000: cc01 0a03 666f 6f12 0e54 6865 2066 6f6f ....foo..The foo

00000010: 206d 6574 7269 6318 0422 b201 3aaf 0108 metric.."..:...

00000020: e204 1143 331d 5b3e c1dd 4028 0631 0000 ...C3.[>..@(.1..

00000030: 0000 0000 f037 3800 6204 0819 1001 6204 .....78.b.....b.

00000040: 080a 100f 6204 0808 102b 6802 6800 6801 ....b....+h.h.h.

00000050: 6806 6805 6802 6800 6801 6802 6802 6803 h.h.h.h.h.h.h.h.

00000060: 6804 6803 6802 6801 6802 6802 6801 6801 h.h.h.h.h.h.h.h.

00000070: 6802 6802 6802 6801 6800 6806 6803 6806 h.h.h.h.h.h.h.h.

00000080: 6807 6802 6802 6805 680c 6802 6805 6804 h.h.h.h.h.h.h.h.

00000090: 6804 6804 6802 6800 6802 6801 6803 680a h.h.h.h.h.h.h.h.

000000a0: 6803 680a 6801 6806 6806 6809 6802 6810 h.h.h.h.h.h.h.h.

000000b0: 6802 6805 681e 6808 6805 680a 6803 6839 h.h.h.h.h.h.h.h9

000000c0: 7a0c 08e2 b4a8 af06 108e abdc a901 d201 z...............

000000d0: 0a24 7072 6f6d 6874 7470 5f6d 6574 7269 .$promhttp_metri

000000e0: 635f 6861 6e64 6c65 725f 6572 726f 7273 c_handler_errors

000000f0: 5f74 6f74 616c 124b 546f 7461 6c20 6e75 _total.KTotal nu

00000100: 6d62 6572 206f 6620 696e 7465 726e 616c mber of internal

00000110: 2065 7272 6f72 7320 656e 636f 756e 7465 errors encounte

00000120: 7265 6420 6279 2074 6865 2070 726f 6d68 red by the promh

00000130: 7474 7020 6d65 7472 6963 2068 616e 646c ttp metric handl

00000140: 6572 2e18 0022 2c0a 110a 0563 6175 7365 er...",....cause

00000150: 1208 656e 636f 6469 6e67 1a17 0900 0000 ..encoding......

00000160: 0000 0000 001a 0c08 e2b4 a8af 0610 d68d ................

00000170: e0a9 0122 2d0a 120a 0563 6175 7365 1209 ..."-....cause..

00000180: 6761 7468 6572 696e 671a 1709 0000 0000 gathering.......

00000190: 0000 0000 1a0c 08e2 b4a8 af06 10ad e1df ................

000001a0: a901

At this point, I naively (as it turned out) tried to pipe the results into protoc --decode but it fails.

... | protoc --decode=io.prometheus.client.MetricFamily metrics.proto

I think (!?) this is because the response is a chunked series of protobuf-encoded MetricFamily messages.

If this were a gRPC implementation, I suspect the response would either be a stream of MetricFamily or a unary repeated MetricFamily but, absent HTTP/2, HTTP/1.1 uses chunking and individual messages one after the other.

I learned subsequently that these are varint-delimited:

[SIZE-1][MESSAGE-1]

[SIZE-2][MESSAGE-2]

[SIZE-3][MESSAGE-3]

...

There’s even a proposal protodelim: add delimited-message serialization support package and a Golang implementation protodelim (see later).

The issue Expose metrics in Protobuf format #1603 references using prom2json which is another tool I was unaware of. This works:

go install github.com/prometheus/prom2json/cmd/prom2json@latest

prom2json http://localhost:2112/metrics | jq -r .

[

{

"name": "foo",

"help": "The foo metric",

"type": "HISTOGRAM",

"metrics": [

{

"count": "10",

"sum": "523.0613213986003"

}

]

}

]

NOTE I was unable to get this working by piping the

curloutput intoprom2json. The nuance of this approach is being able to explicitly requestapplication/vnd.google.protobuf.

I cloned and debugged prom2json. It uses google_protobuf_extensions (you guessed it, another tool I was unaware of!).

Debugging the code helped me confirm the approach of receiving the response body, looking for a varint message size, and then unmarshaling it into MetricFamily.

However, the code is functionally richer than I need in this case, so I simplified it:

package main

import (

"flag"

"fmt"

"io"

"log/slog"

"mime"

"net/http"

"os"

dto "github.com/prometheus/client_model/go"

"google.golang.org/protobuf/encoding/protodelim"

)

const (

protoMediaType string = "application/vnd.google.protobuf"

protoPackage string = "io.prometheus.client"

protoMessage string = "MetricFamily"

encoding string = "delimited"

)

var (

metricsPath = flag.String("metrics", "http://localhost:2112/metrics", "URL of the Prometheus Exporter's metrics endpoint")

)

var (

proto string = fmt.Sprintf("%s.%s", protoPackage, protoMessage)

accept string = fmt.Sprintf("%s;proto=%s;encoding=%s", protoMediaType, proto, encoding)

)

type Reader struct {

r io.Reader

}

func NewReader(r io.Reader) Reader {

return Reader{

r: r,

}

}

func (r Reader) Read(b []byte) (int, error) {

return r.r.Read(b)

}

func (r Reader) ReadByte() (byte, error) {

var buf [1]byte

_, err := r.r.Read(buf[:])

return buf[0], err

}

func main() {

logger := slog.New(slog.NewJSONHandler(os.Stdout, nil))

flag.Parse()

rqst, err := http.NewRequest(http.MethodGet, *metricsPath, nil)

if err != nil {

logger.Error("unable to create new request", "err", err)

os.Exit(1)

}

rqst.Header.Add("Accept", accept)

client := http.Client{}

resp, err := client.Do(rqst)

if err != nil {

logger.Error("unable to do request", "err", err)

os.Exit(1)

}

if resp.StatusCode != http.StatusOK {

logger.Error("request failed")

os.Exit(1)

}

defer resp.Body.Close()

mediatype, params, err := mime.ParseMediaType(resp.Header.Get("Content-Type"))

if err != nil {

logger.Error("unable to parse 'Content-Type' header", "err", err)

os.Exit(1)

}

if mediatype != protoMediaType {

logger.Error("unexpected 'Content-Type' media type",

"want", protoMediaType,

"got", mediatype,

)

os.Exit(1)

}

if params["proto"] != proto {

logger.Error("unexpected 'Content-Type' param",

"want", proto,

"got", params["proto"],

)

os.Exit(1)

}

if params["encoding"] != encoding {

logger.Error("unexpected 'Content-Type encoding",

"want", encoding,

"got", params["encoding"],

)

os.Exit(1)

}

for {

m := &dto.MetricFamily{}

r := NewReader(resp.Body)

opts := protodelim.UnmarshalOptions{

MaxSize: -1,

}

err = opts.UnmarshalFrom(r, m)

if err == io.EOF {

break

}

if err != nil {

logger.Info("Unable to unmarshal message", "err", err)

continue

}

logger.Info("Message", "m", m)

}

}

Yields:

{

"time":"2024-03-07T00:00:00.00000000-08:00",

"level":"INFO",

"msg":"Message","m":{

"name":"foo",

"help":"The foo metric",

"type":4,

"metric":[{

"histogram":{

"sample_count":10,

"sample_sum":523.0613213986003,

"created_timestamp":{

"seconds":1709769600,

"nanos":00000000

},

"schema":3,

"zero_threshold":0.0,

"zero_count":0,

"positive_span":[{

"offset":23,

"length":1

},{

"offset":12,

"length":1

},{

"offset":5,

"length":12

}],

"positive_delta": [1,0,0,0,-1,1,0,-1,0,1,-1,0,2,-1]

}

}]

}

}

The JSON schema represented by MetricFamily is not the same as that used by the Prometheus HTTP API:

curl \

--silent \

--get \

--data-urlencode "query=foo{}" \

http://localhost:9090/api/v1/query \

| jq -r .

{

"status": "success",

"data": {

"resultType": "vector",

"result": [

{

"metric": {

"__name__": "foo",

"instance": "localhost:2112",

"job": "foo"

},

"histogram": [

1709769600.000,

{

"count": "10",

"sum": "523.0613213986003",

"buckets": [...]

}

]

}

]

}

}

If you want to scrape the Native Histogram exporter with Prometheus, you’ll need to create a prometheus.yml and restart the server configured to use it:

prometheus.yml:

scrape_configs:

- job_name: "foo"

static_configs:

- targets: ["localhost:2112"]

metric_relabel_configs:

- source_labels: [__name__]

regex: ^foo

action: keep

And:

podman run \

--interactive --tty --rm \

--net=host \

--volume=${PWD}/prometheus.yml:/etc/prometheus/prometheus.yml \

prom/prometheus:v2.50.1 \

--config.file=/etc/prometheus/prometheus.yml \

--enable-feature=native-histograms \

--web.enable-lifecycle

NOTE For the containerized Prometheus to access the local Go process, it’s easiest to bind the container to the host network (

--net=host) voiding the need for--publish=9090:9090/tcp.

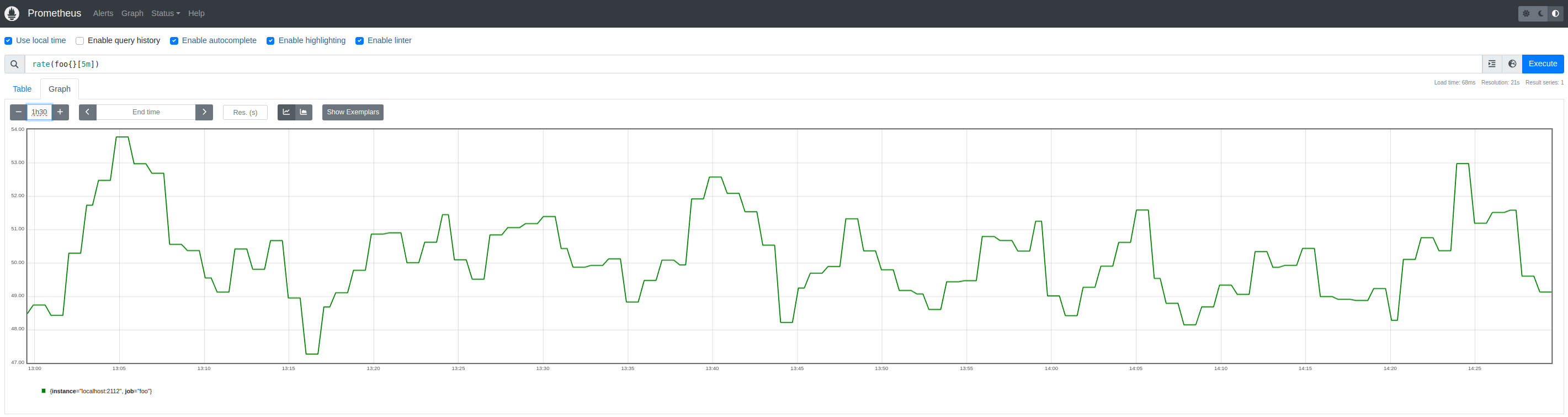

It is easier to interact with Native Histograms from the Prometheus Web UI:

rate(foo{}[5m])

That’s all!