Prometheus HTTP Service Discovery of Cloud Run services

- 9 minutes read - 1740 wordsSome time ago, I wrote about using Prometheus Service Discovery w/ Consul for Cloud Run and also Scraping metrics exposed by Google Cloud Run services that require authentication. Both solutions remain viable but they didn’t address another use case for Prometheus and Cloud Run services that I have with a “thing” that I’ve been building.

In this scenario, I want to:

- Configure Prometheus to scrape Cloud Run service metrics

- Discover Cloud Run services dynamically

- Authenticate to Cloud Run using Firebase Auth ID tokens

These requirements and – one other – present several challenges:

- Prometheus Service Discovery alternatives (e.g.

fileand the newhttp) are statically configured - My Service Discovery endpoint and the services discovered require authentication

- ID token expires and need to be refreshed (deterministically)

- My “thing” expects users to uniquely identify requests

I’ll explore how I solved these challenges in detail below but, for those who are impatient, the solution is:

- Configure Prometheus to use HTTP-based Service Discovery using a proxy

- The proxy adds dynamically-generated headers to the proxied requests including

Authorization - A Cloud Run discovery service that authenticates then enumerates a user’s Cloud Run services and returns Prometheus targets

- Cloud Run target services that authenticate and serve Prometheus metrics

IMPORTANT For simplicity, the proxy listens on an insecure (non-TLS) connection (uses

http). The proxy bumps outgoing (proxied) requests to secure (TLS) connections (useshttps). This is primarily for simplicity. The proxy will be run locally (on the same host in many cases) and there’s confidence in its security. Using TLS with the proxy would require management of x509 certificates (possibly self-signed). But, because the proxy needs to add e.g.Authorizationheader to the proxied request, it would either need to terminate the TLS connection or it would need to operate MITM and neither are good choices.

Let’s work from the end-user’s perspective forwards:

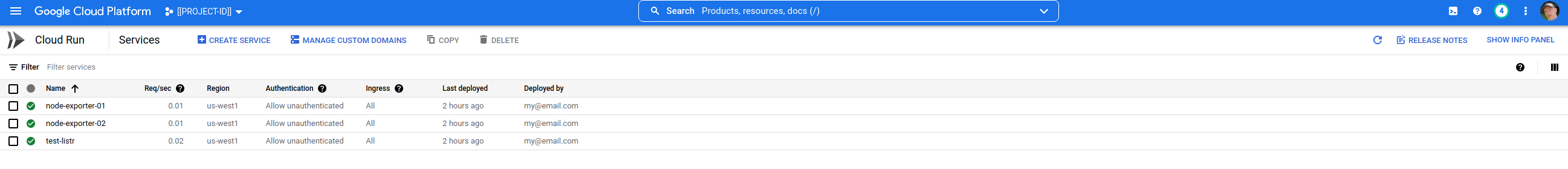

It doesn’t look very interesting but the two endpoints node-exporter-XX are Cloud Run services (for testing, they’re both Node Exporters) that are published as allow unauthenticated but (in production) are services that authenticate requests for valid Firebase ID tokens.

The service listr uses Google’s Cloud Run SDK to enumerate Cloud Run services. It also expects and validates a Firebase ID token provided with an Authorization header.

Prometheus

The Prometheus configuration is straightforward:

global:

scrape_interval: 1m

evaluation_interval: 1m

scrape_configs:

- job_name: "http-proxy"

scheme: http

proxy_url: http://0.0.0.0:5555

http_sd_configs:

- url: http://test-listr-plsm3l32oa-uw.a.run.app

proxy_url: http://0.0.0.0:5555

NOTE The

proxyis referenced twice. Prometheus’ scrape requests must go through it (to be authenticated) and the scrapes of the list of HTTP targets must go through it too (to be authenticated).

NOTE As explained above, the proxy listens on an insecure (non-TLS) connection and so URL references in

prometheus.ymlusehttpnothttps. Because the proxy upgrades the outgoing request tohttps, in this example, althoughhttp://test-listr-plsm3l32oa-uw.a.run.appis specified, the actual call will be made (correctly) againsthttps://test-listr-plsm3l32oa-uw.a.run.app.

Proxy

Because the proxy listens on an insecure (non-TLS) connection but, Cloud Run (only) provides secure (TLS) connections, the first tasks of the proxy is to bump the connection to https. The proxy then creates a NewRequest using the incoming (proxied) request’s method, body and the upgraded (http://–>https:// URL).

The motivation for the proxy is that it injects the Authorization header into Cloud Run requests to authenticate them. In my implementation, the proxy uses the ID token produced by Firebase Auth to authenticate users’ requests. One user can have zero or more (!) Cloud Run services and the implementation ensures that one user can only access its own Cloud Run services. This is achieved (described below) by having the listr (a Cloud Run service that lists the user’s Cloud Run services) and the Cloud Run services returned by listr, verify the user’s ID token. So, the proxy acquires the user’s ID token and adds this as a Bearer as an Authorization header.

ASIDE The OIDC Token Proxy also authenticates Prometheus scrapes of Cloud Run services but it relies on locally stored credentials (from a Service Account) to generate the appropriate JWT to authenticate a single (!) Cloud Run service. Unfortunately, the JWT audience is service-specific with Cloud Run and this means that the OIDC Token Proxy can only authenticate a single Cloud Run service (target).

In my solution, there are additional headers added by the proxy that are used by the backend to validate users. The proxy adds these too.

Now the proxy can make (Do) the request. If this errors, this is returned to the caller (Prometheus). Otherwise, the proxy copies the response into the caller’s response.

func main() {

proxy := func() http.HandlerFunc {

return func(w http.ResponseWriter, rqst *http.Request) {

// Upgrade request

rqst.URL.Scheme = "https"

// URL being proxied

url := rqst.URL.String()

pRqst, err := http.NewRequest(rqst.Method, url, rqst.Body)

if err != nil {

msg := "unable to create proxied request"

http.Error(w, msg, http.StatusInternalServerError)

return

}

// Add Authorization header

// Bearer acqusition is defined elsewhere

pRqst.Header.Add("Authorization", getBearer())

// Create Transport

// Use something thant InsecureSkipVerify in production

transport := &http.Transport{

TLSClientConfig: &tls.Config{

InsecureSkipVerify: true,

},

}

client := &http.Client{

Transport: transport,

}

// Invoke proxied request

pResp, err := client.Do(pRqst)

if err != nil {

msg := "unable to execute proxied request"

http.Error(w, msg, http.StatusInternalServerError)

return

}

defer pResp.Body.Close()

// Copy the headers

func(dst, src http.Header) {

for k, vv := range src {

for _, v := range vv {

dst.Add(k, v)

}

}

}(w.Header(), pResp.Header)

// Use the proxied response code as the caller's response code

w.WriteHeader(pResp.StatusCode)

// Copy the body

io.Copy(w, pResp.Body)

}

}()

srv := &http.Server{

Addr: *endpoint,

Handler: proxy,

}

log.Error(

srv.ListenAndServe(),

"server error",

)

}

To reiterate because it’s slightly confusing. The proxy is used for 2 purposes in my application and as shown in this post. In both cases, it is used to add Authorization headers to requests that include the user’s ID token in order to authenticate these requests. The proxy is used to make requests to the listr service. The listr service returns the user’s set of Cloud Run services. It is then applied to each of these targets when Prometheus scrapes them for their metrics.

Cloud Run services

Authentication

Authentication (and authoriation) are handled by the Cloud Run services using an interceptor that’s applied to all incoming requests and is implemented as an http.Handler. For this reason, the services must allow unauthenticated traffic (because the service itself is performing the authentication and authorization).

I’ve removed extraneous code and error-handling to focus on the intent VerifyIDToken and act upon the result:

func(w http.ResponseWriter, req *http.Request) {

// Gets first if any Authorization header

authValue := req.Header.Get("Authorization")

// Extracts TOKEN from "Bearer TOKEN"

splitValues := strings.Split(authValue, "Bearer ")

// ID token

idToken := splitValues[1]

token, err := authClient.VerifyIDToken(context.Background(), idToken)

if err != nil {

w.WriteHeader(http.StatusUnauthorized)

return

}

// Invoke next handler

next.ServeHTTP(w, req)

}

NOTE In my app, once a user has been authenticated (see above), there’s another interceptor that determines whether the user is authorized.

Prometheus Service Discovery targets

The Prometheus documentation covers HTTP-based Service Discovery in two places:

Prometheus expects to receive JSON-formatted (static config) targets in response to its request to the handler:

[

{

"targets": [ "<host>", ... ],

"labels": {

"<labelname>": "<labelvalue>", ...

}

},

...

]

NOTE

targetsis a list (of hosts) whilelabelsis a map (of key:value pairs). There’s freedom to associate a set of targets with the same set of labels.

This can be modeled:

// StaticConfigs is a type that represents file format of Prometheus File|HTTP Service Dicovery config

type StaticConfigs []StaticConfig

// NewStaticConfigs is a function that returns a new Configs

func NewStaticConfigs() StaticConfigs {

return []StaticConfig{}

}

// Add is a method that adds a StaticConfig to StaticConfig

func (c *StaticConfigs) Add(x StaticConfig) {

*c = append(*c, x)

}

// JSON is a method that converts Configs to JSON

func (c *StaticConfigs) JSON() ([]byte, error) {

return json.Marshal(c)

}

// StaticConfig is a type that represents a single entry within the Prometheus File Service discovery config

type StaticConfig struct {

Targets []string `json:"targets" yaml:"targets"`

Labels map[string]string `json:"labels" yaml:"labels"`

}

NOTE These types (and methods) must be available as part of Prometheus too and it may be better to import them from Prometheus rather than recreate them here.

One noteworthy fact is that the Cloud Run service has region-specific endpoints. Generally to interact with Cloud Run services through the SDKs, it’s necessary to first determine which region the service is in. See Service endpoint.

However (!) there is an undocumented method projects.locations.services.list that can be used to enumerate all the services within a project. This list method is used by gcloud and it achieves the enumeration across all regions using - as a wildcard for the locations components of the method’s parent string value (projects/{ProjectID}/locations/-).

This is the method that I’m using to retrieve the list of targets that are then converted to StaticConfig’s and Add‘ed to StaticConfigs per above before converting these to JSON to be returned by the HTTP handler:

// List is a method that lists Cloud Run services that match the labelSelector

func (c *Client) List(labelSelector string) ([]*run.Service, error) {

rqst := c.Service.Projects.Locations.Services.List(c.Parent()).LabelSelector(labelSelector)

resp, err := rqst.Do()

if err != nil {

return nil, err

}

return resp.Items, nil

}

// Parent is a method that returns a wildcarded (!) parent

// gcloud uses an undocumented list method that uses "-" to represent all locations

func (c *Client) Parent() string {

return fmt.Sprintf("projects/%s/locations/-", c.Project)

}

Prometheus metrics

Finally, the designated (labelSelector) services are returned by the discovery service to Prometheus where they are scraped and available for querying|graphing etc.

Calls to these metrics endpoint services are authenticated using the handler described previously.

Conclusion

Prometheus’ HTTP Service Discovery mechanism is a useful and general-purpose way to generate scrape targets dynamically for Prometheus. In addition to HTTP Service Discovery, I implemented File-based Service Discovery with the addition of a Save method to StaticConfigs.

If I had no need to include authentciation and other headers, I could have avoided using the proxy. But Prometheus’ generic (i.e. File, HTTP) service discovery mechanisms don’t provide any mechanism for extending their functionality to achieve this, I am using a proxy. The proxy runs in the client’s environment and is able to access a user’s credentials and construct the additional headers that are added to the Prometheus service discovery requests.

The result is that users are able to securely (authenticated and authorized) use metrics from their Cloud Run services.