Dapr

- 12 minutes read - 2463 wordsIt’s a good name, I read it as “dapper” but I frequently type “darp” :-(

Was interested to read that Dapr is now v1.0 and decided to check it out. I was initially confused between Dapr and service mesh functionality. But, having used Dapr, it appears to be more focused in aiding the development of (cloud-native) (distributed) apps by providing developers with abstractions for e.g. service discovery, eventing, observability whereas service meshes feel (!) more oriented towards simplifying the deployment of existing apps. Both use the concept of proxies, deployed alongside app components (as sidecars on Kubernetes) to provide their functionality to apps.

I started with the hello-kubernetes quickstart. You’ll either need to clone this repo or replaces references ./deploy with https://raw.githubusercontent.com/dapr/quickstarts/master/hello-kubernetes/deploy in the kubectl apply --filename=./deploy commands below.

I like MicroK8s and used it in the following. I’m also trying to be more disciplined in using non-default namespaces in my clusters to ensure that apps work outside of the default namespace and, more importantly, because non-default namespaces can be deleted thereby cleaning up.

Dapr requires a more recent version of Helm (version?) than is shipped with MicroK8s 1.20.0 and so I found it easiest to use Helm in a container.

NAMESPACE="dapr-system"

kubectl create namespace ${NAMESPACE}

And then, something of the form:

alias helm3="docker run \

--interactive --tty --rm \

--volume=${HOME}/.kube:/root/.kube \

--volume=${PWD}/.helm:/root/.helm \

--volume=${PWD}/.config/helm:/root/.config/helm \

--volume=${PWD}/.cache/helm:/root/.cache/helm \

alpine/helm:3.5.2"

NOTE Curiously, I’ve been having issues with Dapr not functioning and, when e.g. checking

dapr-sidecar-injectorlogs, receiving TLS handshake errors (always on10.0.0.226!?). Unfortunately,this issue only arises when you try to deploy a Dapr app (e.g. a Deployment with the Dapr annotations). Uninstalling and reinstalling Dapr appears to overcome (!?) this issue.kubectl logs deployment/dapr-sidecar-injector \ --namespace=${NAMESPACE} 2021/02/20 19:21:18 http: TLS handshake error from 10.0.0.226:46102: remote error: tls: bad certificateWhat’s also curious is that error is always for

10.0.0.226and there’s nothing running on that IP:kubectl get endpoints \ --namespace=${NAMESPACE}

helm3 repo list

helm3 repo add dapr https://dapr.github.io/helm-charts/

helm3 repo update

helm3 install dapr dapr/dapr --namespace=${NAMESPACE} --wait

NOTE The above command mounts your

KUBECONFIGfile and 3 Helm-specific directorties (.helm,.config/helm,.cache/helm) into the container. These permit Helm to deploy to your cluster and to persist its state (e.g. repos) across invocations.

Yields:

NAME: dapr

LAST DEPLOYED: Thu Feb 19 12:00:00 2021

NAMESPACE: dapr-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing Dapr: High-performance, lightweight serverless runtime for cloud and edge

Your release is named dapr.

To get started with Dapr, we recommend using our quickstarts:

https://github.com/dapr/quickstarts

For more information on running Dapr, visit:

https://dapr.io

Then you can:

kubectl get all --namespace=${NAMESPACE}

NAME READY STATUS

pod/dapr-dashboard-65cc964bdd-ckv8h 1/1 Running

pod/dapr-operator-754ffcd-cx7sk 1/1 Running

pod/dapr-placement-server-0 1/1 Running

pod/dapr-sentry-f4fd59d65-v89jk 1/1 Running

pod/dapr-sidecar-injector-5cd4454dcc-h5445 1/1 Running

NAME TYPE PORT(S)

service/dapr-api ClusterIP 80/TCP

service/dapr-dashboard ClusterIP 8080/TCP

service/dapr-placement-server ClusterIP 50005/TCP,8201/TCP

service/dapr-sentry ClusterIP 80/TCP

service/dapr-sidecar-injector ClusterIP 443/TCP

NAME READY UP-TO-DATE AVAIL

deployment.apps/dapr-dashboard 1/1 1 1

deployment.apps/dapr-operator 1/1 1 1

deployment.apps/dapr-sentry 1/1 1 1

deployment.apps/dapr-sidecar-injector 1/1 1 1

NAME DESIRED

replicaset.apps/dapr-dashboard-65cc964bdd 1

replicaset.apps/dapr-operator-754ffcd 1

replicaset.apps/dapr-sentry-f4fd59d65 1

replicaset.apps/dapr-sidecar-injector-5cd4454dcc 1

NAME READY

statefulset.apps/dapr-placement-server 1/1

NAME

configuration.dapr.io/daprsystem

Then install Redis:

helm3 repo add bitnami https://charts.bitnami.com/bitnami

helm3 repo update

helm3 install redis bitnami/redis --namespace=${NAMESPACE}

NOTE The instructions were unclear but I installed Redis into the same namespace (

${NAMESPACE}) as Dapr. It’s likely this isn’t required.

Yields:

NAME: redis

LAST DEPLOYED: Thu Feb 19 12:00:00 2021

NAMESPACE: dapr-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

Redis(TM) can be accessed via port 6379 on the following DNS names from within your cluster:

redis-master.dapr-system.svc.cluster.local for read/write operations

redis-slave.dapr-system.svc.cluster.local for read-only operations

To get your password run:

export REDIS_PASSWORD=$(\

kubectl get secret redis \

--namespace=dapr-system \

--output=jsonpath="{.data.redis-password}" \

| base64 --decode)

To connect to your Redis(TM) server:

1. Run a Redis(TM) pod that you can use as a client:

kubectl run redis-client --rm --tty -i --restart='Never' \

--namespace dapr-system \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image docker.io/bitnami/redis:6.0.10-debian-10-r19 \

-- bash

2. Connect using the Redis(TM) CLI:

redis-cli -h redis-master -a $REDIS_PASSWORD

redis-cli -h redis-slave -a $REDIS_PASSWORD

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward service/redis-master\

--namespace=dapr-system 6379:6379 &

redis-cli -h 127.0.0.1 -p 6379 -a $REDIS_PASSWORD

The Helm install for Redis creates a Secret (redis) containing the Redis password. You need only update the redis-master’s DNS in the hello-kubernetes quickstart’s redis.yaml file in the deploy directory. You can:

sed \

"s|redis-master:6379|redis-master.${NAMESPACE}.svc.cluster.local:6379|g" \

./deploy/redis.yaml \

| kubectl apply --filename=- \

--namespace=${NAMESPACE}

This manifest creates a Dapr Component CRD called statestore:

kubectl get component/statestore \

--namespace=${NAMESPACE} \

--output=yaml

Yields:

apiVersion: dapr.io/v1alpha1

auth:

secretStore: kubernetes

kind: Component

metadata:

name: statestore

namespace: dapr-system

spec:

metadata:

- name: redisHost

value: redis-master.dapr-system.svc.cluster.local:6379

- name: redisPassword

secretKeyRef:

key: redis-password

name: redis

type: state.redis

version: v1

Another CRD that’s created by Dapr is Configurations:

kubectl get configuration/daprsystem \

--namespace=${NAMESPACE} \

--output=yaml

Yields:

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

annotations:

meta.helm.sh/release-name: dapr

meta.helm.sh/release-namespace: dapr-system

labels:

app.kubernetes.io/managed-by: Helm

name: daprsystem

namespace: dapr-system

spec:

metric:

enabled: true

mtls:

allowedClockSkew: 15m

enabled: true

workloadCertTTL: 24h

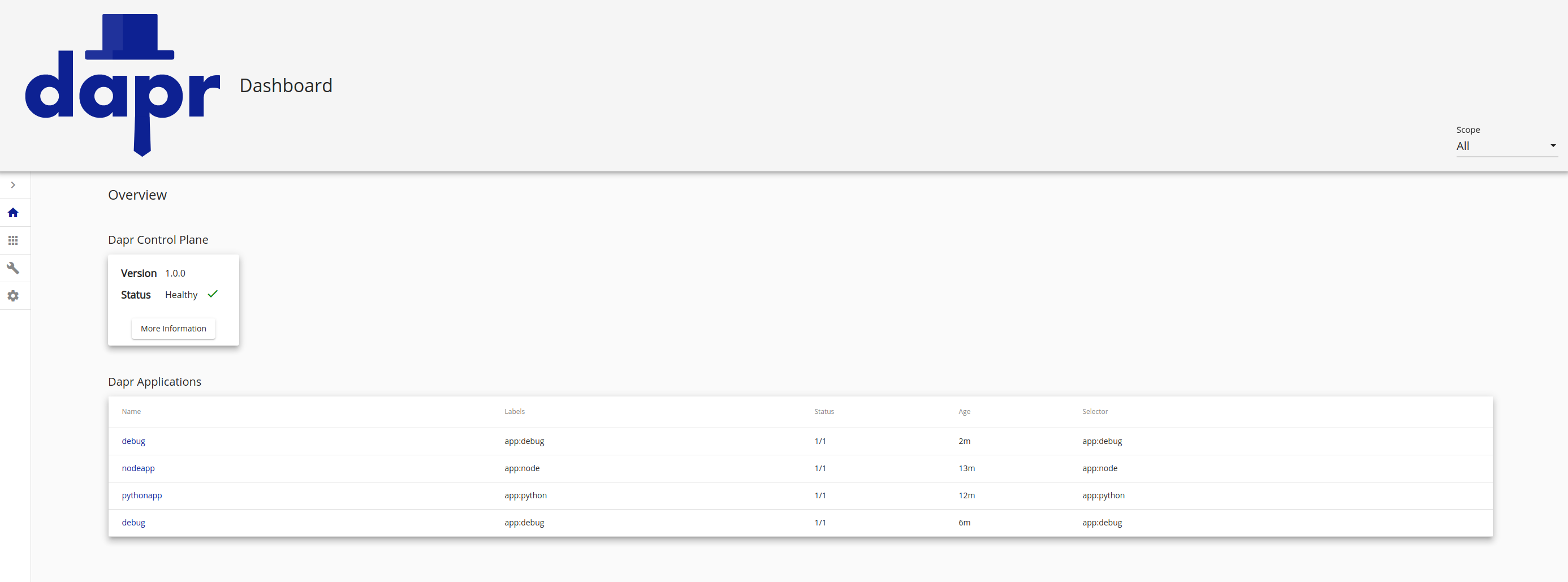

Dapr includes a web-based dashboard that I found quite useful. You can port-forward to it:

kubectl port-forward service/dapr-dashboard \

--namespace=${NAMESPACE} 8080:8080

And then browse http://localhost:8080

The Quickstart then has you install a Node.JS “server” app:

kubectl apply \

--filename=./deploy/node.yaml \

--namespace=${NAMESPACE}

NOTE Before proceeding, I recommend you check the

dapr-sidecar-injectorlogs at this point to determine whether you’re receiving the certificate error I mentioned above. If you are, deletenode.yaml, (helm) uninstall Dapr and try installing them both again. You do not need to delete Redis.

To confirm that the Deployment has succeeded including that Dapr has injected a sidecar into the Deployment’s Pods, you can:

kubectl get pods \

--selector=app=node \

--namespace=${NAMESPACE} \

--output=jsonpath="{.items[*].spec.containers[*].image}"

You should gets 2 containers in the result (albeit output on one line):

dapriosamples/hello-k8s-node:latest

docker.io/daprio/daprd:1.0.0

NOTE A side-effect of the injector is that the Deployment will not include

daprd; you can’tkubectl getorkubectl describethe Deployment to see thedaprdadded.

The server has a simple API:

| Path | Method | Description |

|---|---|---|

/order |

GET | Gets the most recent order |

/neworder |

POST | Adds a new order |

/ports |

GET | Gets Dapr’s HTTP |

Because the app’s Deployment includes Dapr annotations (see below), when applied to the cluster, Dapr detected the Deployment and injected a sidecar into the Pods:

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "nodeapp"

dapr.io/app-port: "3000"

NOTE In the above

appi-idofnodeappjust so happens to be the same name as the Kubernetes Deployment but it could be something entirely different. Theapp-porttells Dapr which port it should route traffic to (see below).

We can gets logs for the NodeJS app:

kubectl logs deployment/nodeapp \

--container=node \

--namespace=${NAMESPACE}

Node App listening on port 3000!

And, logs for the Dapr (daemon) sidecar with:

kubectl logs deployment/nodeapp \

--container=daprd \

--namespace=${NAMESPACE}

msg="starting Dapr Runtime -- version 1.0.0 -- commit 6314733"

msg="log level set to: info"

msg="metrics server started on :9090/"

msg="loading default configuration"

msg="kubernetes mode configured"

msg="app id: foo"

msg="mTLS enabled. creating sidecar authenticator"

msg="trust anchors and cert chain extracted successfully"

msg="authenticator created"

msg="Initialized name resolution to kubernetes"

msg="component loaded. name: kubernetes, type: secretstores.kubernetes/v1"

msg="waiting for all outstanding components to be processed"

msg="all outstanding components processed"

msg="enabled gRPC tracing middleware"

msg="enabled gRPC metrics middleware"

msg="API gRPC server is running on port 50001"

msg="enabled metrics http middleware"

msg="enabled tracing http middleware"

msg="http server is running on port 3500"

msg="The request body size parameter is: 4"

msg="enabled gRPC tracing middleware"

msg="enabled gRPC metrics middleware"

msg="sending workload csr request to sentry"

msg="certificate signed successfully"

msg="internal gRPC server is running on port 50002"

msg="application protocol: http. waiting on port 3000. This will block until the app is listening on that port."

msg="starting workload cert expiry watcher. current cert expires on: 2021-02-20 19:01:33 +0000 UTC"

msg="application discovered on port 3000"

msg="application configuration loaded"

msg="actor runtime started. actor idle timeout: 1h0m0s. actor scan interval: 30s"

msg="dapr initialized. Status: Running. Init Elapsed 28.082543ms"

msg="placement tables updated, version: 0"

What isn’t clear from the Quickstart, is that the Dapr daemon (daprd) is the conduit through which an application (in one Pod) talks to the Dapr system.

Here’s a simple proof:

echo '

apiVersion: apps/v1

kind: Deployment

metadata:

name: debug

labels:

app: debug

spec:

replicas: 1

selector:

matchLabels:

app: debug

template:

metadata:

labels:

app: debug

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "debug"

spec:

containers:

- name: busybox

image: radial/busyboxplus:curl

command:

- ash

- -c

- "while true; do sleep 30; done"

' | kubectl apply --filename=- \

--namespace=${NAMESPACE}

This creates a Pod for us that we can use to evaluate Dapr’s API:

kubectl exec deployment/debug \

--stdin --tty \

--container busybox \

--namespace=${NAMESPACE} \

-- ash

And then from within the shell:

curl \

--request GET \

http://localhost:3500/v1.0/invoke/nodeapp/method/ports

{"DAPR_HTTP_PORT":"3500","DAPR_GRPC_PORT":"50001"}

curl \

--request GET \

http://localhost:3500/v1.0/metadata

{"id":"ashapp","actors":[],"extended":{},"components":[{"name":"statestore","type":"state.redis","version":"v1"}]}

The API is documented here

NOTE The references to

localhostthat we’re using refer to the network namespace within the Pod. You’ll see that theDAPR_HTTP_PORTwas returned as3500helps us understand that we’re talking to thedaprdcontainer (Dapr) within the Pod to access this API. And it is able to access (proxy) e.g. other services (nodeapp) on our behalf.

NOTE I’m deploying

debugto Namespace${NAMESPACE}but, if you deploydebugto a different namespace (e.g.test), you will need to postfixnodeappwith the namespace (e.g./invoke/nodeapp.dapr-system/...) in order to access it with the API.

Per the Node.JS app’s API, we can also add a neworder using:

curl \

--request POST \

--header "Content-type: application/json" \

--data '{"data":{"orderId":1000}}' \

--write-out '%{response_code}' \

http://localhost:3500/v1.0/invoke/nodeapp/method/neworder

NOTE Because we’re POST’ing JSON to the endpoint, we must include the header

NOTE

request POSTis redundant but included to be exhaustive.

This should yield 200 (HTTP OK)

After deploying the Node.JS app, the Dapr dashboard will include the app on it’s homepage and you can check its metadata, configurations, logs etc.

There’s a Python app included in the Quickstart which repeatedly POSTs neworder:

kubectl apply --filename=./deploy/python.yaml \

--namespace=${NAMESPACE}

Initially, the Python app may log an error failed to establish connection but, it should soon connect via Dapr (!) to the Node.JS app:

kubectl logs deployment/pythonapp \

--container=python \

--namespace=${NAMESPACE}

HTTPConnectionPool(host='localhost', port=3500): Max retries exceeded with url: /v1.0/invoke/nodeapp/method/neworder (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f1935152cd0>: Failed to establish a new connection: [Errno 111] Connection refused'))

HTTP 500 => {"errorCode":"ERR_DIRECT_INVOKE","message":"invoke API is not ready"}

kubectl logs deployment/nodeapp \

--container=node \

--namespace=${NAMESPACE}

Node App listening on port 3000!

DAPR_HTTP_PORT: 3500

DAPR_GRPC_PORT: 50001

Got a new order! Order ID: 1000

Successfully persisted state.

Got a new order! Order ID: 3

Successfully persisted state.

Got a new order! Order ID: 4

Successfully persisted state.

Got a new order! Order ID: 5

Successfully persisted state.

...

NOTE The Python app is hard-coded to point to the Node.JS server in the same namespace:

dapr_port = os.getenv("DAPR_HTTP_PORT", 3500)

dapr_url = "http://localhost:{}/v1.0/invoke/nodeapp/method/neworder".format(dapr_port)

We can show cross-namespacing using the ash app:

NAMESPACE="different"

kubectl create namespace ${NAMESPACE}

echo '

apiVersion: apps/v1

kind: Deployment

metadata:

name: debug

labels:

app: debug

spec:

replicas: 1

selector:

matchLabels:

app: debug

template:

metadata:

labels:

app: debug

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "debug"

spec:

containers:

- name: busybox

image: radial/busyboxplus:curl

command:

- ash

- -c

- "while true; do sleep 30; done"

' | kubectl apply --filename=- \

--namespace=${NAMESPACE}

kubectl exec deployment/debug \

--stdin --tty \

--container busybox \

--namespace=${NAMESPACE} \

-- ash

For completeness, let’s call the Dapr API through this Pod’s daprd. Remember we’re in a ${DIFFERENT} namespace:

curl localhost:3500/v1.0/metadata

{"id":"debug","actors":[],"extended":{},"components":[{"name":"statestore","type":"state.redis","version":"v1"}]}

That still works.

Now let’s try our original (now incorrect) call against the Node.JS server’s API:

curl localhost:3500/v1.0/invoke/nodeapp/method/ports \

--max-time 2

curl: (28) Operation timed out after 2000 milliseconds with 0 bytes received

NOTE The

--max-timeis to ensure you don’t wait too long for what we know won’t work

This is because, nodeapp is not present in the ${DIFFERENT} namespace and thus inaccessible.

So, how do we access nodeapp in the ${NAMESPACE}==dapr-system namespace? We append the app’s namespace to its name: nodeapp –> nodeapp.dapr-system

curl localhost:3500/v1.0/invoke/nodeapp.dapr-system/method/ports \

--max-time 2

{"DAPR_HTTP_PORT":"3500","DAPR_GRPC_PORT":"50001"}

NOTE We don’t use the namespace when we Deploy(ment) the app only when we reference it.

The Node.JS app’s neworder function persists the data received (i.e. {"orderId":1000}) to the Dapr-configured state store (in this example, Redis). When we applied redis.yaml, this created a Component CRD called statestore that was configured to point to the Redis deployment that we helm install‘ed.

Now, from within Dapr applications (like the Node.JS app), we can use the Dapr API to persist (application) state.

This isn’t as easy to test using the Dapr API becuase state is scoped to the app.

Using the Debug Deployment:

# POST foo=bar

curl \

--request POST \

--header "Content-type: application/json" \

--data '[{"key":"foo","value":"bar"}]' \

http://localhost:3500/v1.0/state/statestore

# GET foo

curl \

--request GET \

http://localhost:3500/v1.0/state/statestore/foo

"bar"

And, accessing the Redis CLI (using the instructions provided by way of output from the Helm install command):

HGETALL debug||foo

1) "data"

2) "\"bar\""

3) "version"

4) "2"

NOTE The key value (

foo) is prefixed by the app’s namedebug

From the Redis client, I can get the Node.JS (nodeapp) order key value too:

HGETALL nodeapp||order

1) "data"

2) "{\"orderId\":3531}"

3) "version"

4) "3531"

Lastly, I wanted to experiment with the gRPC mechanism. I used the Dapr GO SDK’s example code to write an even simpler server and client.

NAMESPACE="example"

kubectl apply \

--filename=./kubernetes/server.yaml

--namespace=${NAMESPACE}

kubectl apply \

--filename=./kubernetes/client.yaml

--namespace=${NAMESPACE}

kubectl logs deployment/server \

--container=app \

--namespace=${NAMESPACE}

2021/02/22 21:28:26 [main] Entered (port: 50051)

2021/02/22 21:28:26 [main] Start gRPC service: :50051

2021/02/22 21:28:45 [main:echo] Entered

2021/02/22 21:28:45 [main:echo] ContentType:text/plain, Verb:POST, QueryString:map[], DataTypeURL:, Hello Freddie

2021/02/22 21:28:50 [main:echo] Entered

2021/02/22 21:28:50 [main:echo] ContentType:text/plain, Verb:POST, QueryString:map[], DataTypeURL:, Hello Freddie

kubectl logs deployment/client \

--container=app \

--namespace=${NAMESPACE}

2021/02/22 21:28:45 [main] Entered (appID: server)

dapr client initializing for: 127.0.0.1:50001

[main] Response: Hello Freddie

[main] Response: Hello Freddie

[main] Response: Hello Freddie

[main] Response: Hello Freddie

[main] Response: Hello Freddie

[main] Response: Hello Freddie

[main] Response: Hello Freddie

In this example, even though the server is created generically server, err := daprd.NewService(endpoint), the Kubernetes manifest annotation includes dapr.io/app-protocol: "grpc" and this configures Dapr to use gRPC for communication with the service. The server must be implemented to support gRPC messages:

server.AddServiceInvocationHandler("echo",

echo(ctx context.Context, in *common.InvocationEvent) (out *common.Content, err error) {

...

}

But, the client need only make generic call invocations:

client.InvokeMethodWithContent(ctx, *appID, "echo", "post", content)

And, we can therefore invoke the server’s method (echo) via REST too:

curl \

--request POST \

--data 'Hello Freddie!' \

localhost:3500/v1.0/invoke/server/method/echo

Yields:

Hello Freddie!

While you’re running a debug shell, you can check Dapr’s (Prometheus) metrics:

NOTE By default, metrics are exposed on

:9090but you can configure the port using one of Dapr’s Pod annotations, namelydapr.io/metrics-port.

curl --request GET \

http://localhost:9090/metrics

Or, if you’d prefer you can port-forward to a daprd in ${NAMESPACE} and view the metrics in a browser:

kubectl port-forward deployment/server \

--namespace=${NAMESPACE} \

9090:9090

That’s all!