Below you will find pages that utilize the taxonomy term “Google”

Configuring Envoy to proxy Google Cloud Run v2

I’m building an emulator for Cloud Run. As I considered the solution, I assumed (more later) that I could implement Google’s gRPC interface for Cloud Run and use Envoy to proxy HTTP/REST requests to the gRPC service using Envoy’s gRPC-JSON transcoder.

Google calls this process Transcoding HTTP/JSON to gRPC which I think it a better description.

Google’s Cloud Run v2 (v1 is no longer published to the googleapis repo) service.proto includes the following Services definition for CreateService:

gRPC Firestore `Listen` in Rust

Obsessing on gRPC Firestore Listen somewhat but it’s also a good learning opportunity for me to write stuff in Rust. This doesn’t work against Google’s public endpoint (possibly for the same reason that gRPCurl doesn’t work either) but this does work against the Go server described in the other post.

I’m also documenting here because I always encounter challenges using TLS with Rust (and this documents 2 working ways to do this with gRPC) as well as references two interesting (rust) examples that use Google services.

gRPC Firestore `Listen`

A question on Stack overflow Firestore gRPC Listen does not send deletions piqued by interest but created a second problem for me: I’m unable to get its Listen method to work using gRPCurl.

For what follows, you will need:

- a Google Project with Firestore enabled and a database (default:

(default)) containing a Collection (e.g.Dogs) with at least one Document (e.g.freddie) googleapiscloned locally in order to access Protobufs

Summary

| client | server | R | See |

|---|---|---|---|

gRPCurl |

firestore.googleapis.com:443 |

✅ | link |

| Go client | firestore.googleapis.com:443 |

✅ | link |

gRPCurl |

Go server | ✅ | link |

| Go client | Go server | ✅ | link |

I subsequently tried this using Postman and it also works (✅).

Google Cloud Translation w/ gRPC 3 ways

General

You’ll need a Google Cloud project with Cloud Translation (translate.googleapis.com) enabled and a Service Account (and key) with suitable permissions in order to run the following.

BILLING="..." # Your Billing ID (gcloud billing accounts list)

PROJECT="..." # Your Project ID

ACCOUNT="tester"

EMAIL="${ACCOUNT}@${PROJECT}.iam.gserviceaccount.com"

ROLES=(

"roles/cloudtranslate.user"

"roles/serviceusage.serviceUsageConsumer"

)

# Create Project

gcloud projects create ${PROJECT}

# Associate Project with your Billing Account

gcloud billing accounts link ${PROJECT} \

--billing-account=${BILLING}

# Enable Cloud Translation

gcloud services enable translate.googleapis.com \

--project=${PROJECT}

# Create Service Account

gcloud iam service-accounts create ${ACCOUNT} \

--project=${PROJECT}

# Create Service Account Key

gcloud iam service-accounts keys create ${PWD}/${ACCOUNT}.json \

--iam-account=${EMAIL} \

--project=${PROJECT}

# Update Project IAM permissions

for ROLE in "${ROLES[@]}"

do

gcloud projects add-iam-policy-binding ${PROJECT} \

--member=serviceAccount:${EMAIL} \

--role=${ROLE}

done

For the code, you’ll need to install protoc and preferably have it in your path.

Filtering metrics w/ Google Managed Prometheus

Google has published two, very good blog posts on cost management:

- How to identify and reduce costs of your Google Cloud observability in Cloud Monitoring

- Cloud Logging pricing for Cloud Admins: How to approach it & save cost

This post is about my application cost reductions for Cloud Monitoring for Ackal.

I’m pleased with Google Cloud Managed Service for Prometheus (hereinafter GMP). I’ve a strong preference for letting service providers run components of Ackal that I consider important but non-differentiating.

Maintaining Container Images

As I contemplate moving my “thing” into production, I’m anticipating aspects of the application that need maintenance and how this can be automated.

I’d been negligent in the maintenance of some of my container images.

I’m using mostly Go and some Rust as the basis of static(ally-compiled) binaries that run in these containers but not every container has a base image of scratch. scratch is the only base image that doesn’t change and thus the only base image that doesn’t require that container images buit FROM it, be maintained.

Delegate domain-wide authority using Golang

I’d not used Google’s Domain-wide Delegation from Golang and struggled to find example code.

Google provides Java and Python samples.

Google has a myriad packages implementing its OAuth security and it’s always daunting trying to determine which one to use.

As it happens, I backed into the solution through client.Options

ctx := context.Background()

// Google Workspace APIS don't use IAM do use OAuth scopes

// Scopes used here must be reflected in the scopes on the

// Google Workspace Domain-wide Delegate client

scopes := []string{ ... }

// Delegates on behalf of this Google Workspace user

subject := "a@google-workspace-email.com"

creds, _ := google.FindDefaultCredentialsWithParams(

ctx,

google.CredentialsParams{

Scopes: scopes,

Subject: subject,

},

)

opts := option.WithCredentials(creds)

service, _ := admin.NewService(ctx, opts)

In this case NewService applies to Google’s Golang Admin SDK API although the pattern of NewService(ctx) or NewService(ctx, opts) where opts is a option.ClientOption is consistent across Google’s Golang libraries.

Firestore Export & Import

I’m using Firestore to maintain state in my “thing”.

In an attempt to ensure that I’m able to restore the database, I run (Cloud Scheduler) scheduled backups (see Automating Scheduled Firestore Exports and I’ve been testing imports to ensure that the process works.

It does.

I thought I’d document an important but subtle consideration with Firestore exports (which I’d not initially understood).

Google facilitates that backup process with the sibling commands:

Automatic Certs w/ Golang gRPC service on Compute Engine

I needed to deploy a healthcheck-enabled gRPC TLS-enabled service. Fortunately, most (all?) of the SDKs include an implementation, e.g. Golang has grpc-go/health.

I learned in my travels that:

- DigitalOcean [App] platform does not (link) work with TLS-based gRPC apps.

- Fly has a regression (link) that breaks gRPC

So, I resorted to Google Cloud Platform (GCP). Although Cloud Run would be well-suited to running the gRPC app, it uses a proxy|sidecar to provision a cert for the app and I wanted to be able to (easily use a custom domain) and give myself a somewhat general-purpose solution.

Consul discovers Google Cloud Run

I’ve written a basic discoverer of Google Cloud Run services. This is for a project and it extends work done in some previous posts to Multiplex gRPC and Prometheus with Cloud Run and to use Consul for Prometheus service discovery.

This solution:

- Accepts a set of Google Cloud Platform (GCP) projects

- Trawls them for Cloud Run services

- Assumes that the services expose Prometheus metrics on

:443/metrics - Relabels the services

- Surfaces any discovered Cloud Run services’ metrics in Prometheus

You’ll need Docker and Docker Compose.

Multiplexing gRPC and HTTP (Prometheus) endpoints with Cloud Run

Google Cloud Run is useful but, each service is limited to exposing a single port. This caused me problems with a gRPC service that serves (non-gRPC) Prometheus metrics because customarily, you would serve gRPC on one port and the Prometheus metrics on another.

Fortunately, cmux provides a solution by providing a mechanism that multiplexes both services (gRPC and HTTP) on a single port!

TL;DR See the cmux Limitations and use:

grpcl := m.MatchWithWriters( cmux.HTTP2MatchHeaderFieldSendSettings("content-type", "application/grpc"))

Extending the example from the cmux repo:

Firestore Golang Timestamps & Merging

I’m using Google’s Golang SDK for Firestore. The experience is excellent and I’m quickly becoming a fan of Firestore. However, as a Golang Firestore developer, I’m feeling less loved and some of the concepts in the database were causing me a conundrum.

I’m still not entirely certain that I have Timestamps nailed but… I learned an important lesson on the auto-creation of Timestamps in documents and how to retain these values.

Cloud Firestore Triggers in Golang

I was pleased to discover that Google provides a non-Node.JS mechanism to subscribe to and act upon Firestore triggers, Google Cloud Firestore Triggers. I’ve nothing against Node.JS but, for the project i’m developing, everything else is written in Golang. It’s good to keep it all in one language.

I’m perplexed that Cloud Functions still (!) only supports Go 1.13 (03-Sep-2019). Even Go 1.14 (25-Feb-2020) was released pre-pandemic and we’re now running on 1.16. Come on Google!

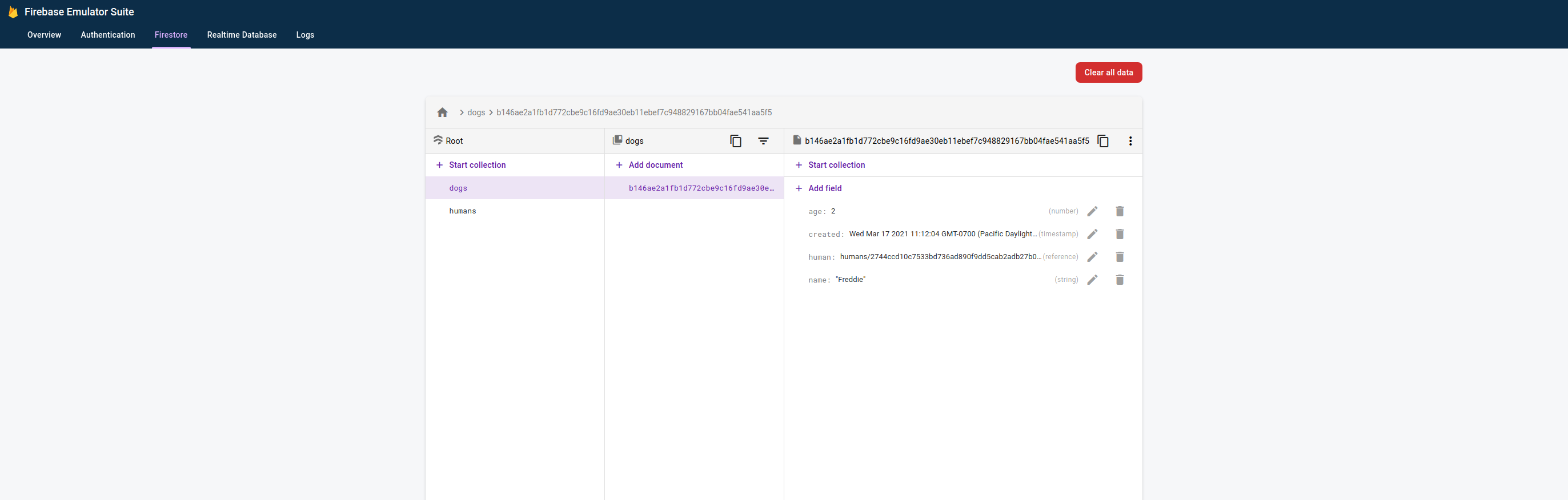

Using Golang with the Firestore Emulator

This works great but it wasn’t clearly documented for non-Firebase users. I assume it will work, as well, for any of the client libraries (not just Golang).

Assuming you have some (Golang) code (in this case using the Google Cloud Client Library) that interacts with a Firestore database. Something of the form:

package main

import (

"context"

"crypto/sha256"

"fmt"

"log"

"os"

"time"

"cloud.google.com/go/firestore"

)

func hash(s string) string {

h := sha256.New()

h.Write([]byte(s))

return fmt.Sprintf("%x", h.Sum(nil))

}

type Dog struct {

Name string `firestore:"name"`

Age int `firestore:"age"`

Human *firestore.DocumentRef `firestore:"human"`

Created time.Time `firestore:"created"`

}

func NewDog(name string, age int, human *firestore.DocumentRef) Dog {

return Dog{

Name: name,

Age: age,

Human: human,

Created: time.Now(),

}

}

func (d *Dog) ID() string {

return hash(d.Name)

}

type Human struct {

Name string `firestore:"name"`

}

func (h *Human) ID() string {

return hash(h.Name)

}

func main() {

ctx := context.Background()

project := os.Getenv("PROJECT")

client, err := firestore.NewClient(ctx, project)

if value := os.Getenv("FIRESTORE_EMULATOR_HOST"); value != "" {

log.Printf("Using Firestore Emulator: %s", value)

}

if err != nil {

log.Fatal(err)

}

defer client.Close()

me := Human{

Name: "me",

}

meDocRef := client.Collection("humans").Doc(me.ID())

if _, err := meDocRef.Set(ctx, me); err != nil {

log.Fatal(err)

}

freddie := NewDog("Freddie", 2, meDocRef)

freddieDocRef := client.Collection("dogs").Doc(freddie.ID())

if _, err := freddieDocRef.Set(ctx, freddie); err != nil {

log.Fatal(err)

}

}

Then you can interact instead with the Firestore Emulator.

Programmatically deploying Cloud Run services (Golang|Python)

Phew! Programmitcally deploying Cloud Run services should be easy but it didn’t find it so.

My issues were that the Cloud Run Admin (!) API is poorly documented and it uses non-standard endpoints (thanks Sal!). Here, for others who may struggle with this, is how I got this to work.

Goal

Programmatically (have Golang, Python, want Rust) deploy services to Cloud Run.

i.e. achieve this:

gcloud run deploy ${NAME} \

--image=${IMAGE} \

--platform=managed \

--no-allow-unauthenticated \

--region=${REGION} \

--project=${PROJECT}

TRICK

--log-httpis your friend

webmention

Let’s see if this works!?

I’ve added the following link to this site’s baseof.html so that it is now rendered for each page:

<link

href="https://us-central1-webmention.cloudfunctions.net/webmention"

rel="webmention" />

I discovered indieweb yesterday reading about webmention. Who knows what got me to webmention!?

The principles of both are sound. Instead of relying on come-go behemoths to run our digital world, indieweb seeks to provide technologies that enable us to achieve things without them. webmention is a cross-walled-garden mechanism to perform an evolved form of pingbacks. If X references one of Y’s posts, X’s server notifies Y’s server during publication through a webmention service and, Y can then decide to add reference counts of copies of the referring link to their page. Clever.

Hugo and Google Cloud Storage

I’m using Hugo as a static site generator for this blog. I’m using Firebase (for free) to host lefsilver.

I have other domains that I want to promote and decided to use Google Cloud Storage buckets for these sites. Using Google Cloud Storage for Hosting a static website and using Hugo to deploy sites to Google Cloud Storage (GCS) are documented but, I didn’t find a location where this is combined into a single tutorial and I wanted to add an explanation for ensuring your sites are included in Google’s and Bing’s search indexes.

Actions SDK Conversational Quickstart

Google’s tutorial didn’t work for me.

In this post, I’ll help you get this working.

https://developers.google.com/assistant/conversational/quickstart

Create and set up a project

This mostly works.

I recommend using the Actions Console as described to create the project.

I chose “Custom” and “Blank Project”

You need not enable Actions API as this is done automatically:

For the console work, I’m going to use Google’s excellent Cloud Shell. You may access this through the browser or through a terminal:

WASM Cloud Functions

Following on from waPC & Protobufs and a question on Stack Overflow about Cloud Functions, I was compelled to try running WASM on Cloud Functions no JavaScript.

I wanted to reuse the WASM waPC functions that I’d written in Rust as described in the other post. Cloud Functions does not (yet!?) provide a Rust runtime and so I’m using the waPC Host for Go in this example.

It works!

PARAMS=$(printf '{"a":{"real":39,"imag":3},"b":{"real":39,"imag":3}}' | base64)

TOKEN=$(gcloud auth print-identity-token)

echo "{

\"filename\":\"complex.wasm\",

\"function\":\"c:mul\",

\"params\":\"${PARAMS}\"

}" |\

curl \

--silent \

--request POST \

--header "Content-Type: application/json" \

--header "Authorization: Bearer ${TOKEN}" \

--data @- \

https://${REGION}-${PROJECT}.cloudfunctions.net/invoker

yields (correctly):

Rust Crate Transparency && Rust SDK for Google Trillian

I’m noodling the utility of a Transparency solution for Rust Crates. When developers push crates to Cargo, a bunch of metadata is associated with the crate. E.g. protobuf. As with Golang Modules, Python packages on PyPi etc., there appears to be utility in making tamperproof recordings of these publications. Then, other developers may confirm that a crate pulled from cates.io is highly unlikely to have been changed.

On Linux, Cargo stores downloaded crates under ${HOME}/.crates/registry. In the case of the latest version (2.12.0) of protobuf, on my machine, I have:

Google Trillian on Cloud Run

I’ve written previously (Google Trillian for Noobs) about Google’s interesting project Trillian and about some of the “personalities” (e.g. PyPi Transparency) that I’ve build using it.

Having gone slight cra-cra on Cloud Run and gRPC this week with Golang gRPC Cloud Run and gRPC, Cloud Run & Endpoints, I thought it’d be fun to deploy Trillian and a personality to Cloud Run.

It mostly (!) works :-)

At the end of the post, I’ve summarized creating a Cloud SQL instance to host the Trillian data(base).

gRPC, Cloud Run & Endpoints

<3 Google but there’s quite often an assumption that we’re all sitting around the engineering table and, of course, we’re not.

Cloud Endpoints is a powerful offering but – IMO – it’s super confusing to understand and complex to deploy.

If you’re familiar with the motivations behind service meshes (e.g. Istio), Cloud Endpoints fits in a similar niche (“neesh” or “nitch”?). The underlying ambition is that, developers can take existing code and by adding a proxy (or sidecar), general-purpose abstractions, security, logging etc. may be added.

Golang gRPC Cloud Run

Update: 2020-03-24: Since writing this post, I’ve contributed Golang and Rust samples to Google’s project. I recommend you start there.

Google explained how to run gRPC servers with Cloud Run. The examples are good but only Python and Node.JS:

Missing Golang…. until now ;-)

I had problems with 1.14 and so I’m using 1.13.

Project structure

I’ll tidy up my repo but the code may be found:

Accessing GCR repos from Kubernetes

Until today, I’d not accessed a Google Container Registry repo from a non-GKE Kubernetes deployment.

It turns out that it’s pretty well-documented (link) but, here’s an end-end example.

Assuming:

BILLING=[[YOUR-BILLING]]

PROJECT=[[YOUR-PROJECT]]

SERVER="us.gcr.io"

If not already:

gcloud projects create {$PROJECT}

gcloud beta billing projects link ${PROJECT} \

--billing-account=${BILLING}

gcloud services enable containerregistry.googleapis.com \

--project=${PROJECT}

Container Registry

IMAGE="busybox" # Or ...

docker pull ${IMAGE}

docker tag \

${IMAGE} \

${SERVER}/${PROJECT}/${IMAGE}

docker push ${SERVER}/${PROJECT}/${IMAGE}

gcloud container images list-tags ${SERVER}/${PROJECT}/${IMAGE}

Service Account

Create a service account that’s permitted to download (read-only) images from this project’s registry

Cloud Build wishlist: Mountable Golang Modules Proxy

I think it would be valuable if Google were to provide volumes in Cloud Build of package registries (e.g. Go Modules; PyPi; Maven; NPM etc.).

Google provides a mirror of a subset of Docker Hub. This confers several benefits: Google’s imprimatur; speed (latency); bandwidth; and convenience.

The same benefits would apply to package registries.

In the meantime, there’s a hacky way to gain some of the benefits of these when using Cloud Build.

In the following example, I’ll show an approach using Golang Modules and Google’s module proxy aka proxy.golang.org.