Below you will find pages that utilize the taxonomy term “GCP”

Authenticate PromLens to Google Managed Prometheus

I’m using Google Managed Service for Prometheus (GMP) and liking it.

Sometime ago, I tried using PromLens with GMP but GMP’s Prometheus HTTP API endpoint requires auth and I’ve battled Prometheus’ somewhat limited auth mechanism before (Scraping metrics exposed by Google Cloud Run services that require authentication).

Listening to PromCon EU 2022 videos, I learned that PromLens has been open sourced and contributed to the Prometheus project. Eventually, the functionality of PromLens should be combined into the Prometheus UI.

Prometheus Exporters for fly.io and Vultr

I’ve been on a roll building utilities this week. I developed a Service Health dashboard for my “thing”, a Prometheus Exporter for Fly.io and today, a Prometheus Exporter for Vultr. This is motivated by the fear that I will forget a deployed Cloud resource and incur a horrible bill.

I’ve no written several Prometheus Exporters for cloud platforms:

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Fly.io

- Prometheus Exporter for Vultr

Each of them monitors resource deployments and produces resource count metrics that can be scraped by Prometheus and alerted with Alertmanager. I have Alertmanager configured to send notifications to Pushover. Last week I wrote an integration between Google Cloud Monitoring to send notifications to Pushover too.

Using Google Monitoring Alerting to send Pushover notifications

Table of Contents

Artifacts

- GitHub:

go-gcp-pushover-notificationchannel - Image:

ghcr.io/dazwilkin/go-gcp-pushover-notificationchannel:220515

Pushover

Logging in to your Pushover account, you will be presented with a summary|dashboard page that includes Your User Key. Copy the value of this key into a variable called PUSHOVER_USER

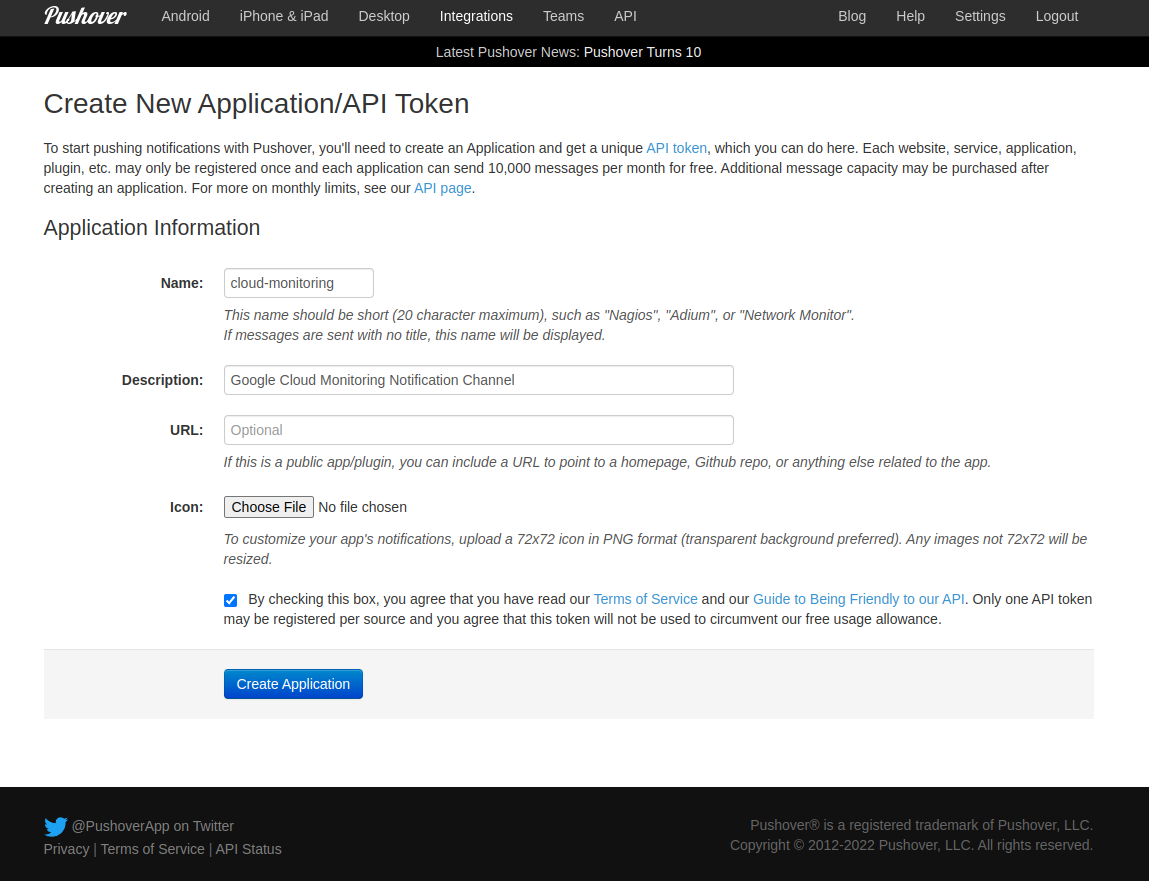

Create New Application|API Token

Pushover API has a Pushing Messages method. The documentation describes the format of the HTTP Request. It must be a POST using TLS (https://) to https://api.pushover.net/1/messages.json. The content-type should be application/json. In the JSON body of the message, we must include token (PUSHOVER_TOKEN), user (PUSHOVER_USER_KEY), device (we’ll use cloud-monitoring) and a title and a message

Cloud Run custom domain mappings

I have several Cloud Run services that I want to map to a domain.

During development, I create a Google Cloud Platform (GCP) project each day into which everything is deployed. This means that, every day, the Cloud Run services have newly non-inferable (to me) URLs. I thought this would be tedious to manage because:

- My DNS service isn’t programmable (I know!)

- Cloud Run services have non-inferable (by me) URLs

i.e. I thought I’d have to manually update the DNS entries each day.

Automating Scheduled Firestore Exports

For my “thing”, I use Firestore to persist state. I like Firestore a lot and, having been around Google for almost (!) a decade, I much prefer it to Datastore.

Firestore has a managed export|import service and I use this to backup Firestore collections|documents.

I’d been doing backups manually (using gcloud) and decided today to take the plunge and use Cloud Scheduler for the first time. I’d been reluctant to do this until now because I’d assumed incorrectly that I’d need to write a wrapping service to invoke the export.

Using Google's Public Certificate Authority with Golang autocert

Last year, I wrote about using Automatic Certs w/ Golang gRPC service on Compute Engine. That solution uses ACME with (the wonderful) Let’s Encrypt. Google is offering a private preview of Automate Public Certificates Lifecycle Management via RFC 8555 (ACME) and, because I’m using Google Cloud Platform extensively to build a “thing” and I think it would be useful to have a backup to Let’s Encrypt, I thought I’d give the solution a try. You’ll need to sign-up for the private preview, for what follows to work.

Infrastructure as Code

Problem

I’m building an application that comprises:

- Kubernetes¹

- Kubernetes Operator

- Cloud Firestore

- Cloud Functions

- Cloud Run

- Cloud Endpoints

- Stripe

- Firebase Authentication

¹ - I’m using Google Kubernetes Engine (GKE) but may include other managed Kubernetes offerings (e.g. Digital Ocean, Linode, Oracle). GKE clusters are manageable by

gcloudbut other platforms require other CLI tools. All are accessible from bash but are these supported by e.g. Terraform (see below)?

Many of the components are packaged as container images and, because I’m using GitHub to host the project’s repos (I’ll leave the monorepo discussion for another post), I’ve become inculcated and use GitHub Container Registry (GHCR) as the container repo.

Cloud Firestore Triggers in Golang

I was pleased to discover that Google provides a non-Node.JS mechanism to subscribe to and act upon Firestore triggers, Google Cloud Firestore Triggers. I’ve nothing against Node.JS but, for the project i’m developing, everything else is written in Golang. It’s good to keep it all in one language.

I’m perplexed that Cloud Functions still (!) only supports Go 1.13 (03-Sep-2019). Even Go 1.14 (25-Feb-2020) was released pre-pandemic and we’re now running on 1.16. Come on Google!

WASM Cloud Functions

Following on from waPC & Protobufs and a question on Stack Overflow about Cloud Functions, I was compelled to try running WASM on Cloud Functions no JavaScript.

I wanted to reuse the WASM waPC functions that I’d written in Rust as described in the other post. Cloud Functions does not (yet!?) provide a Rust runtime and so I’m using the waPC Host for Go in this example.

It works!

PARAMS=$(printf '{"a":{"real":39,"imag":3},"b":{"real":39,"imag":3}}' | base64)

TOKEN=$(gcloud auth print-identity-token)

echo "{

\"filename\":\"complex.wasm\",

\"function\":\"c:mul\",

\"params\":\"${PARAMS}\"

}" |\

curl \

--silent \

--request POST \

--header "Content-Type: application/json" \

--header "Authorization: Bearer ${TOKEN}" \

--data @- \

https://${REGION}-${PROJECT}.cloudfunctions.net/invoker

yields (correctly):

Setting up a GCE Instance as an Inlets Exit Node

The prolific Alex Ellis has a new project, Inlets.

Here’s a quick tutorial using Google Compute Platform’s (GCP) Compute Engine (GCE).

NB I’m using one of Google’s “Always free” f1-micro instances but you may still pay for network *gress and storage

Assumptions

I’m assuming you’ve a Google account, have used GCP and have a billing account established, i.e. the following returns at least one billing account:

gcloud beta billing accounts list

If you’ve only one billing account and it’s the one you wish to use, then you can:

Google Cloud Platform (GCP) Exporter

Earlier this week I discussed a Linode Prometheus Exporter.

I added metrics for Digital Ocean’s Managed Kubernetes service to @metalmatze’s Digital Ocean Exporter.

This left, metrics for Google Cloud Platform (GCP) which has, for many years, been my primary cloud platform. So, today I wrote Prometheus Exporter for Google Cloud Platform.

All 3 of these exporters follow the template laid down by @metalmatze and, because each of these services has a well-written Golang SDK, it’s straightforward to implement an exporter for each of them.