Using Google Monitoring Alerting to send Pushover notifications

Table of Contents

Artifacts

- GitHub:

go-gcp-pushover-notificationchannel - Image:

ghcr.io/dazwilkin/go-gcp-pushover-notificationchannel:220515

Pushover

Logging in to your Pushover account, you will be presented with a summary|dashboard page that includes Your User Key. Copy the value of this key into a variable called PUSHOVER_USER

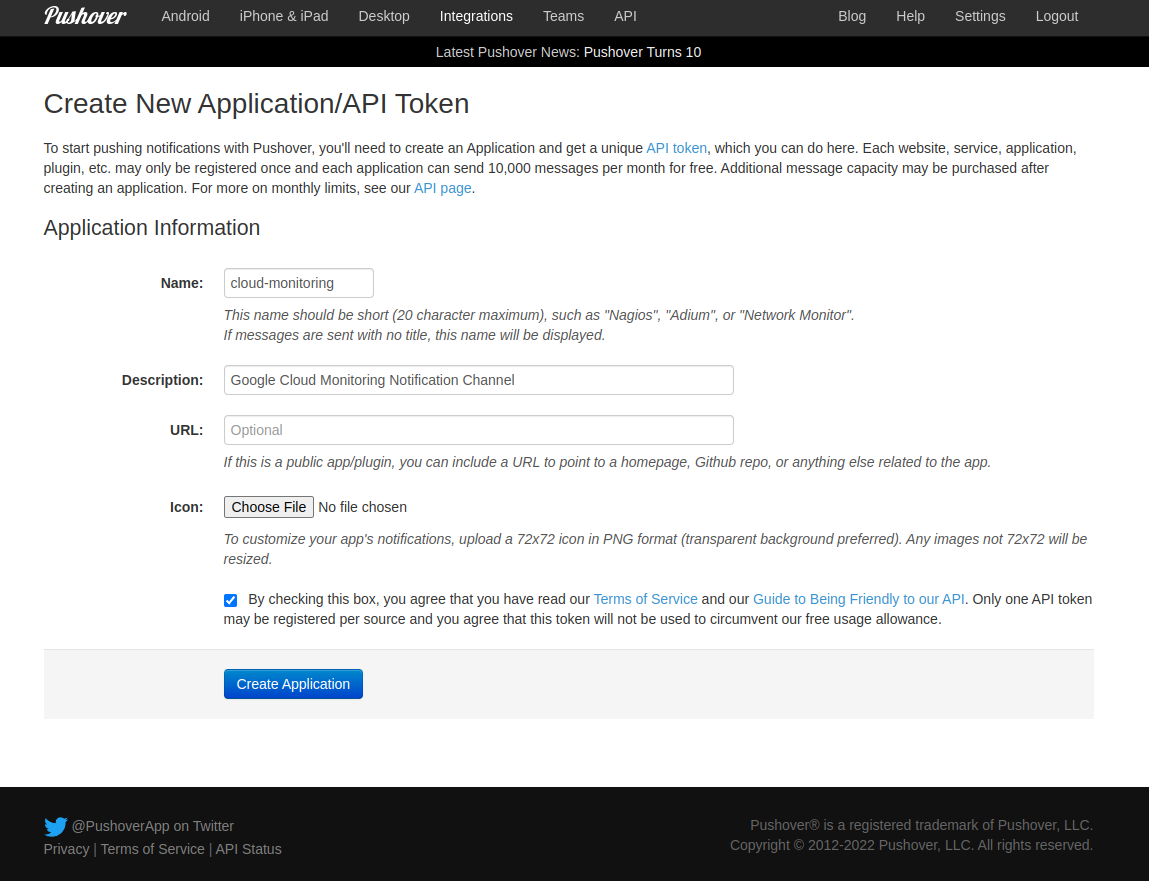

Create New Application|API Token

Pushover API has a Pushing Messages method. The documentation describes the format of the HTTP Request. It must be a POST using TLS (https://) to https://api.pushover.net/1/messages.json. The content-type should be application/json. In the JSON body of the message, we must include token (PUSHOVER_TOKEN), user (PUSHOVER_USER_KEY), device (we’ll use cloud-monitoring) and a title and a message

Cloud Run custom domain mappings

I have several Cloud Run services that I want to map to a domain.

During development, I create a Google Cloud Platform (GCP) project each day into which everything is deployed. This means that, every day, the Cloud Run services have newly non-inferable (to me) URLs. I thought this would be tedious to manage because:

- My DNS service isn’t programmable (I know!)

- Cloud Run services have non-inferable (by me) URLs

i.e. I thought I’d have to manually update the DNS entries each day.

Automating Scheduled Firestore Exports

For my “thing”, I use Firestore to persist state. I like Firestore a lot and, having been around Google for almost (!) a decade, I much prefer it to Datastore.

Firestore has a managed export|import service and I use this to backup Firestore collections|documents.

I’d been doing backups manually (using gcloud) and decided today to take the plunge and use Cloud Scheduler for the first time. I’d been reluctant to do this until now because I’d assumed incorrectly that I’d need to write a wrapping service to invoke the export.

Playing with GitHub Container Registry REST API

I’ve a day to catch up on blogging. I’m building a “thing” and getting this near to the finish line consumes my time and has meant that I’m not originating anything particularly new. However, there are a couple of tricks in my deployment process that may be of interest to others.

I’ve been a long-term using of Google’s [Cloud Build] and like the simplicity (everything’s a container, alot!). Because I’m using GitHub repos, I’ve been using GitHub Actions to (re)build containers on pushes and GitHub Container registry (GHCR) to store the results. I know that Google provides analogs for GitHub repos and (forces me to use) Artifact Registry (to deploy my Cloud Run services) but even though I dislike GitHub Actions, it’s really easy to do everything in one place.

Using Google's Public Certificate Authority with Golang autocert

Last year, I wrote about using Automatic Certs w/ Golang gRPC service on Compute Engine. That solution uses ACME with (the wonderful) Let’s Encrypt. Google is offering a private preview of Automate Public Certificates Lifecycle Management via RFC 8555 (ACME) and, because I’m using Google Cloud Platform extensively to build a “thing” and I think it would be useful to have a backup to Let’s Encrypt, I thought I’d give the solution a try. You’ll need to sign-up for the private preview, for what follows to work.

Prometheus HTTP Service Discovery of Cloud Run services

Some time ago, I wrote about using Prometheus Service Discovery w/ Consul for Cloud Run and also Scraping metrics exposed by Google Cloud Run services that require authentication. Both solutions remain viable but they didn’t address another use case for Prometheus and Cloud Run services that I have with a “thing” that I’ve been building.

In this scenario, I want to:

- Configure Prometheus to scrape Cloud Run service metrics

- Discover Cloud Run services dynamically

- Authenticate to Cloud Run using Firebase Auth ID tokens

These requirements and – one other – present several challenges:

Automatic Certs w/ Golang gRPC service on Compute Engine

I needed to deploy a healthcheck-enabled gRPC TLS-enabled service. Fortunately, most (all?) of the SDKs include an implementation, e.g. Golang has grpc-go/health.

I learned in my travels that:

- DigitalOcean [App] platform does not (link) work with TLS-based gRPC apps.

- Fly has a regression (link) that breaks gRPC

So, I resorted to Google Cloud Platform (GCP). Although Cloud Run would be well-suited to running the gRPC app, it uses a proxy|sidecar to provision a cert for the app and I wanted to be able to (easily use a custom domain) and give myself a somewhat general-purpose solution.

Firebase Auth authorized domains

I’m using Firebase Authentication in a project to authenticate users of various OAuth2 identity systems. Firebase Authentication requires a set of Authorized Domains.

The (web) app that interacts with Firebase Authentication is deployed to Cloud Run. The Authorized Domains list must include the app’s Cloud Run service URL.

Cloud Run service URLs vary by Project (ID). They are a combination of the service name, a hash (?) of the Project (ID) and .a.run.app.

Using `gcloud ... --format` with arbitrary returned data

If you use jq, you’ll know that, its documentation uses examples that you can try locally or using the excellent jqplay:

printf "[1,2,3]" | jq .[1:]

[

2,

3

]

And here

If you use Google Cloud Platform (GCP) CLI, gcloud, this powerful tool includes JSON output formatting of results (--format=json) and YAML (--format=yaml) etc. and includes a set of so-called projections that you can use to format the returned data.

There is a comparable slice projection that you may use with gcloud and the documentation even includes an example:

Golang Structured Logging w/ Google Cloud Logging (2)

UPDATE There’s an issue with my naive implementation of

RenderValuesHookas described in this post. I summarized the problem in this issue where I’ve outlined (hopefully) a more robust solution.

Recently, I described how to configure Golang logging so that user-defined key-values applied to the logs are parsed when ingested by Google Cloud Logging.

Here’s an example of what we’re trying to achieve. This is an example Cloud Logging log entry that incorporates user-defined labels (see dog:freddie and foo:bar) and a readily-querable jsonPayload: