`curl`'ing a Tailscale Webhook

[Tailscale] is really good. I’ve been using it as a virtual private network to span 2 home networks and to securely (!) access my hosts when I’m remote.

Recently Tailscale added Webhook functionality to permit processing subscribed-to (Tailscale) events. I’m always a sucker for a webhook ;-)

Here’s a curl command to send a test event to a Tailscale Webhook:

URL=""

# From Tailscale's docs

# https://tailscale.com/kb/1213/webhooks/#events-payload

BODY='

[

{

"timestamp": "2022-09-21T13:37:51.658918-04:00",

"version": 1,

"type": "test",

"tailnet": "example.com",

"message": "This is a test event",

"data": null

}

]

'

T=$(date +%s)

V=$(\

printf "${T}.${BODY}" \

| openssl dgst -sha256 -hmac "${SECRET}" -hex -r \

| head --bytes=64)

curl \

--request POST \

--header "Tailscale-Webhook-Signature:t=${T},v1=${V}" \

--header "Content-Type: application/json" \

--data "${BODY}" \

https://${URL}

There must be a better way of extracting the hashed value from the openssl output.

The curious cases of the `deleted:serviceaccount`

While testing Firestore export and import yesterday and checking the IAM permissions on a Cloud Storage Bucket, I noticed some Member (member) values (I think Google refers to these as Principals) were logical but unfamiliar to me:

deleted:serviceAccount:{email}?uid={uid}

I was using gsutil iam get gs://${BUCKET} because I’d realized (and this is another useful lesson) that, as I’ve been creating daily test projects, I’ve been binding each project’s Firestore Service Account (service-{project-number}@gcp-sa-firestore.iam.gserviceaccount.com) to a Bucket owned by another Project but I hadn’t been deleting the binding when I deleted the Project.

Firestore Export & Import

I’m using Firestore to maintain state in my “thing”.

In an attempt to ensure that I’m able to restore the database, I run (Cloud Scheduler) scheduled backups (see Automating Scheduled Firestore Exports and I’ve been testing imports to ensure that the process works.

It does.

I thought I’d document an important but subtle consideration with Firestore exports (which I’d not initially understood).

Google facilitates that backup process with the sibling commands:

Basic programmatic access to GitHub Issues

It’s been a while!

I’ve been spending time writing Bash scripts and a web site but neither has been sufficiently creative that I’ve felt worth a blog post.

As I’ve been finalizing the web site, I needed an Issue Tracker and decided to leverage GitHub(’s Issues).

As a former Googler, I’m familiar with Google’s (excellent) internal issue tracking tool (Buganizer) and it’s public manifestation Issue Tracker. Google documents Issue Tracker and its Issue type which I’ve mercilessly plagiarized in my implementation.

Secure (TLS) gRPC services with VKE

NOTE

cert-manageris a better solution to what follows.

I’ve a need to deploy a Vultr Kubernetes Engine (VKE) cluster on a daily basis (create and delete within a few hours) and expose (securely|TLS) a gRPC service.

I have an existing solution Automatic Certs w/ Golang gRPC service on Compute Engine that combines a gRPC Healthchecking and an ACME service and decided to reuse this.

In order for it work, we need:

Vultr CLI and JSON output

I’ve begun exploring Vultr after the company announced a managed Kubernetes offering Vultr Kubernetes Engine (VKE).

In my brief experience, it’s a decent platform and its CLI vultr-cli is mostly (!) good. The CLI has a limitation in that command output is text formatted and this makes it challenging to parse the output when scripting.

NOTE The Vultr developers have a branch

rewritethat includes a solution to this problem.

Example

ID 12345678-90ab-cdef-1234-567890abcdef

LABEL test

DATE CREATED 2022-01-01T00:00:00+00:00

CLUSTER SUBNET 255.255.255.255/16

SERVICE SUBNET 255.255.255.255/12

IP 255.255.255.255

ENDPOINT 12345678-90ab-cdef-1234-567890abcdef.vultr-k8s.com

VERSION v1.23.5+3

REGION mars

STATUS pending

NODE POOLS

ID 12345678-90ab-cdef-1234-567890abcdef

DATE CREATED 2022-01-01T00:00:00+00:00

DATE UPDATED 2022-01-01T00:00:00+00:00

LABEL nodepool

TAG foo

PLAN vc2-1c-2gb

STATUS pending

NODE QUANTITY 1

AUTO SCALER false

MIN NODES 1

MAX NODES 1

NODES

ID DATE CREATED LABEL STATUS

12345678-

Until that’s available, I’m lazy writing very simple bash scripts to parse vultr-cli command output as JSON. The repo is vultr-cli-format.

Automating HackMD documents

I was introduced to HackMD while working on an open-source project. It’s a collaborative editing tool for Markdown documents and there’s an API

I wanted to be able to programmatically edit one of my documents with a daily update. The API is easy-to-use and my only challenge was futzing with escape characters in bash strips representing the document Markdown content.

You’ll need an account with HackMD and an to Create API Token that I’ll refer to as TOKEN.

Prometheus Exporters for fly.io and Vultr

I’ve been on a roll building utilities this week. I developed a Service Health dashboard for my “thing”, a Prometheus Exporter for Fly.io and today, a Prometheus Exporter for Vultr. This is motivated by the fear that I will forget a deployed Cloud resource and incur a horrible bill.

I’ve no written several Prometheus Exporters for cloud platforms:

- Prometheus Exporter for GCP

- Prometheus Exporter for Linode

- Prometheus Exporter for Fly.io

- Prometheus Exporter for Vultr

Each of them monitors resource deployments and produces resource count metrics that can be scraped by Prometheus and alerted with Alertmanager. I have Alertmanager configured to send notifications to Pushover. Last week I wrote an integration between Google Cloud Monitoring to send notifications to Pushover too.

Using Google Monitoring Alerting to send Pushover notifications

Table of Contents

Artifacts

- GitHub:

go-gcp-pushover-notificationchannel - Image:

ghcr.io/dazwilkin/go-gcp-pushover-notificationchannel:220515

Pushover

Logging in to your Pushover account, you will be presented with a summary|dashboard page that includes Your User Key. Copy the value of this key into a variable called PUSHOVER_USER

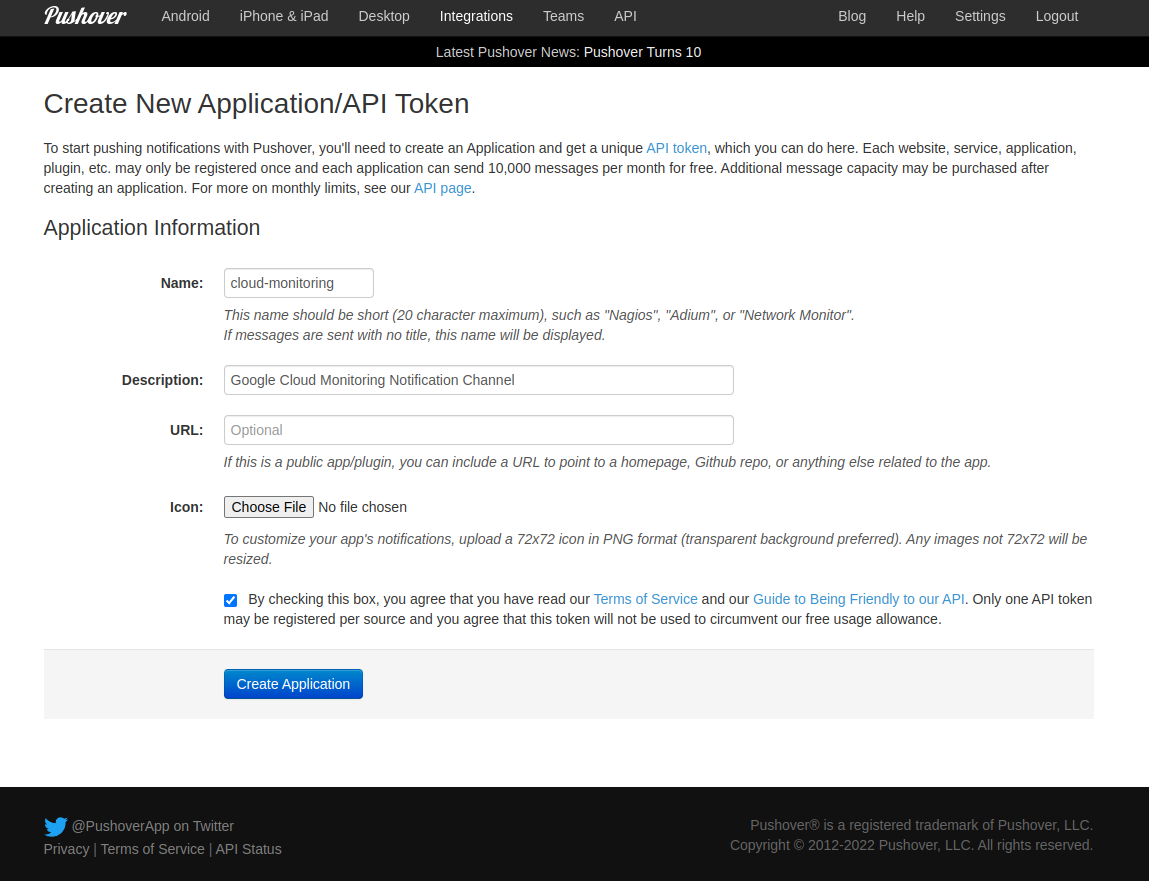

Create New Application|API Token

Pushover API has a Pushing Messages method. The documentation describes the format of the HTTP Request. It must be a POST using TLS (https://) to https://api.pushover.net/1/messages.json. The content-type should be application/json. In the JSON body of the message, we must include token (PUSHOVER_TOKEN), user (PUSHOVER_USER_KEY), device (we’ll use cloud-monitoring) and a title and a message

Cloud Run custom domain mappings

I have several Cloud Run services that I want to map to a domain.

During development, I create a Google Cloud Platform (GCP) project each day into which everything is deployed. This means that, every day, the Cloud Run services have newly non-inferable (to me) URLs. I thought this would be tedious to manage because:

- My DNS service isn’t programmable (I know!)

- Cloud Run services have non-inferable (by me) URLs

i.e. I thought I’d have to manually update the DNS entries each day.