Podman

- 6 minutes read - 1115 wordsI’ve read about Podman and been intrigued by it but never taken the time to install it and play around. This morning, walking with my dog, I listened to the almost-always-interesting Kubernetes Podcast and two of the principals behind Podman were on the show to discuss it.

I decided to install it and use it in this week’s project.

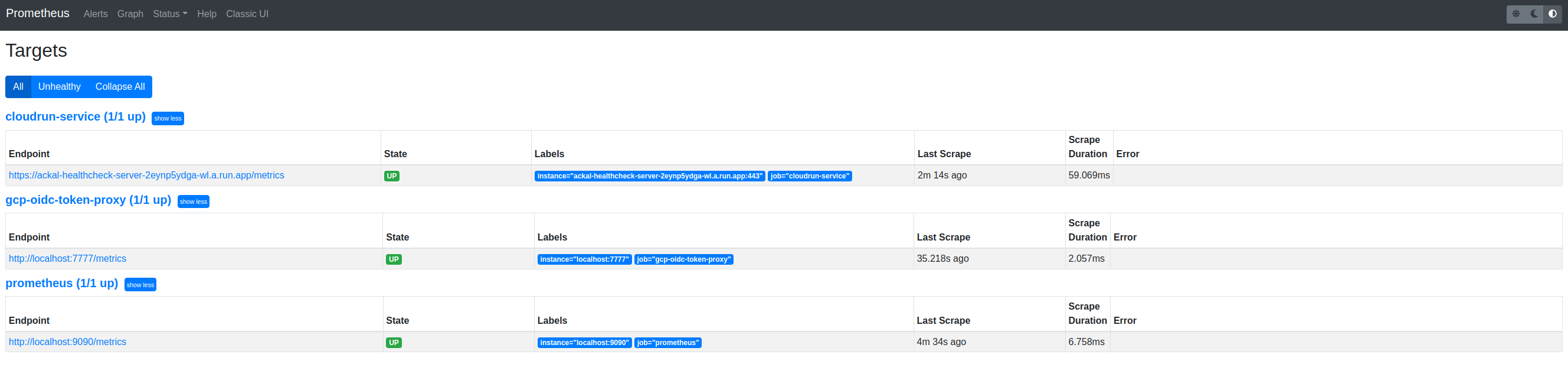

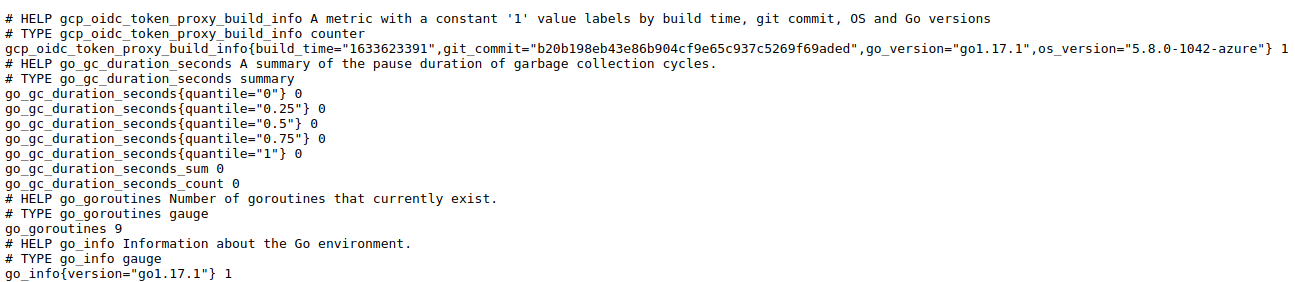

Here’s a working Podman deployment for gcp-oidc-token-proxy

ACCOUNT="..."

ENDPOINT="..."

# Can't match container name i.e. prometheus

POD="foo"

SECRET="${ACCOUNT}"

podman secret create ${SECRET} ${PWD}/${ACCOUNT}.json

# Pod publishes pod-port:container-port

podman pod create \

--name=${POD} \

--publish=9091:9090 \

--publish=7776:7777

PROMETHEUS=$(mktemp)

# Important

chmod go+r ${PROMETHEUS}

sed \

--expression="s|some-service-xxxxxxxxxx-xx.a.run.app|${ENDPOINT}|g" \

${PWD}/prometheus.yml > ${PROMETHEUS}

# Prometheus

# Requires --tty

# Can't include --publish but exposes 9090

podman run \

--detach --rm --tty \

--pod=${POD} \

--name=prometheus \

--volume=${PROMETHEUS}:/etc/prometheus/prometheus.yml \

docker.io/prom/prometheus:v2.30.2 \

--config.file=/etc/prometheus/prometheus.yml \

--web.enable-lifecycle

# GCP OIDC Token Proxy

# Can't include --publish but exposes 7777

podman run \

--detach --rm \

--pod=${POD} \

--name=gcp-oidc-token-proxy \

--secret=${SECRET} \

--env=GOOGLE_APPLICATION_CREDENTIALS=/run/secrets/${SECRET} \

ghcr.io/dazwilkin/gcp-oidc-token-proxy:ec8fa9d9ab1b7fa47448ff32e34daa0c3d211a8d \

--port=7777

The prometheus container includes a volume mount.

When the container is created, a volume is generated:

podman volume ls

DRIVER VOLUME NAME

local 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325

And if we query the container’s Mounts, we can see these match:

podman container inspect prometheus \

| jq -r '.[].Mounts[]|select(.Type=="volume").Name'

6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325

And:

Explore

Let’s explore more of what’s been created for us.

podman container ls \

--filter="pod=${POD}" \

--format="{{.ID}} {{.Image}}"

701f0f9ec469 k8s.gcr.io/pause:3.5

d5b73b5def12 docker.io/prom/prometheus:v2.30.2

85417e0e7ef2 ghcr.io/dazwilkin/gcp-oidc-token-proxy:ec8fa9d9ab1b7fa47448ff32e34daa0c3d211a8d

And importantly, the Pod’s publish ports are applied to each container in the Pod:

podman container ls \

--filter=pod=${POD} \

--format="{{.Ports}}\t{{.Names}}"

0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp 4396f02f6b1c-infra

0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp gcp-oidc-token-proxy

0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp prometheus

This makes sense because, the Pod publishes these ports but there’s no mapping between a Pod’s container port and the Pod’s port. However, this break when using generate kube (see below).

One (!?) way to enumerate the containers belonging to a Pod is:

podman container ls --filter="pod=${POD}"

And, of course:

podman container logs prometheus

And interestingly:

podman pod logs ${POD}

And slightly weirdly (since container names presumably are unique):

podman pod logs --container=prometheus ${POD}

Want I feel as though there should a way to create a pod with its containers and more specific port mappings with a single command.

Export (and Import?)

This doesn’t work although I expected it to do so.

I can export a Podman Pod a la Kubernetes Pod spec:

podman generate kube ${POD} > ${POD}.$(date +%y%m%d).yml

Yields:

apiVersion: v1

kind: Pod

metadata:

labels:

app: foo

name: foo

spec:

containers:

- name: gcp-oidc-token-proxy

image: ghcr.io/dazwilkin/gcp-oidc-token-proxy:ec8fa9d9ab1b7fa47448ff32e34daa0c3d211a8d

command:

- /proxy

args:

- --port=7777

env:

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /run/secrets/oidc-token-proxy

ports:

- containerPort: 9090

hostPort: 9091

protocol: TCP

- containerPort: 7777

hostPort: 7776

protocol: TCP

- name: prometheus

image: docker.io/prom/prometheus:v2.30.2

command:

- /bin/prometheus

args:

- --config.file=/etc/prometheus/prometheus.yml

- --web.enable-lifecycle

tty: true

volumeMounts:

- name: home-dazwilkin-Projects-gcp-oidc-token-proxy-prometheus.2110110850.tmp-host-0

mountPath: /etc/prometheus/prometheus.yml

- name: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325-pvc

mountPath: /prometheus

volumes:

- name: home-dazwilkin-Projects-gcp-oidc-token-proxy-prometheus.2110110850.tmp-host-0

hostPath:

path: /path/to/prometheus.tmp

type: File

- name: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325-pvc

persistentVolumeClaim:

claimName: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325

I’ve tidied this to more reflect how I write Podspec’s name is the key and I like to start lists with it particularly when collapsing.

I’ve removed some default values too (that I think aren’t required).

Curiosities

- The Pod has a Metadata name

${POD}and labelapp: ${POD} - The Secret, referenced by the

gcp-oidc-token-proxyappears to be unreferenced in the Pod config. - The

portssection (defined withpodman pod create) appears only once and under (in this case)gcp-oidc-token-proxy. - The Pod container for

prometheushas 2volumeMountsinstead of the one that was defined

Let’s delete the existing Pod and recreate it using the Pod spec:

# Reset

podman pod stop ${POD} && \

podman pod rm ${POD}

podman play kube ${POD}.$(date +%y%m%d).yml

NOTE The Volume is deleted when the Pod is stopped.

Yields:

Pod:

36a26ee79df25af2931425eefd6e8f51e9f4302710982684c8170769fddc8281

Containers:

321bcd5a3fb68c70352e2ebe5befda9fb253767cd47e5913cb11e8618c119367

4914e79214491f1f1ee3bf3d27a2254feaf9cab433605d47df0089d6952d53ff

Interestingly, the Metadata name ${POD} is now prefixed to the container’s name:

podman container ls \

--filter="pod=${POD}" \

--format="{{.Names}}"

1487f78f3a54-infra

foo-prometheus

foo-gcp-oidc-token-proxy

So we must ensure we update references to prometheus to foo-prometheus and gcp-oidc-token-proxy to foo-gcp-oidc-token-proxy. I assume this is to provide an additional aggregation of the containers to the Pod but it feels redundant and is a side-effect of the import when one appears unnecessary.

And:

podman container ls \

--filter="pod=${POD}" \

--format="{{.Image}}\t{{.Ports}}"

k8s.gcr.io/pause 0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp

ghcr.io/dazwilkin/gcp-oidc-token-proxy 0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp

docker.io/prom/prometheus 0.0.0.0:7776->7777/tcp, 0.0.0.0:9091->9090/tcp

Which is good (because it represents the original configuration) but it’s not what the configuration file described. In the following snippet, the ports are only defined for the container gcp-oidc-token-proxy. There are no ports under prometheus and yet the configuration play’s correctly.

I assume this is because, with podman there can only be one hostPort valued 9091. The host only has a single 9091 port. The podman create --publish provides insufficient information for the ports to be correctly smeared across containers and so, podman must aggregate the ports (as defined by the pod create) and then apply these to every container in the Pod.

apiVersion: v1

kind: Pod

spec:

containers:

- name: gcp-oidc-token-proxy

ports:

- containerPort: 9090

hostPort: 9091

protocol: TCP

- containerPort: 7777

hostPort: 7776

protocol: TCP

- name: prometheus

There is no obvious reference to the Secret that is associated with gcp-oidc-token-proxy. When play’d, it fails because the value for GOOGLE_APPLICATION_CREDENTIALS is expected to be mounted from the Secret (and there is none):

podman container logs foo-gcp-oidc-token-proxy

2021/10/11 17:00:08 handler: "caller"={"file":"main.go","line":116} "error"="google: error getting credentials using GOOGLE_APPLICATION_CREDENTIALS environment variable: open /run/secrets/oidc-token-proxy: no such file or directory"

So that’s a problem!

The volumeMounts which mount volumes are correctly defined as part of the prometheus container. However, there are 2 volumeMounts entries when only one volume was added to the original container:

apiVersion: v1

kind: Pod

spec:

containers:

- name: []

- name: prometheus

volumeMounts:

- name: path-to-prometheus.tmp-host-0

mountPath: /etc/prometheus/prometheus.yml

- name: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325-pvc

mountPath: /prometheus

volumes:

- name: path-to-prometheus.tmp-host-0

hostPath:

path: /path/to/prometheus.tmp

type: File

- name: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325-pvc

persistentVolumeClaim:

claimName: 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325

And:

podman volume ls

DRIVER VOLUME NAME

local 6ddf9599d09d43359d9636c7a44f57d691df86f0e55bdc6d5af61bdbe6afe325

I don’t understand why 2 volume[Mount]s are required. Let’s try deleting the Pod again and recreating without the persistentVolumeClaim:

podman container logs foo-prometheus

...

level=info ts=2021-10-11T16:59:35.999Z caller=main.go:794 msg="Server is ready to receive web requests."

Hmmm, it appears to work without the persistentVolumeClaim. This is understandable since the remaining volume[Mount] appear to correctly define a volume for prometheus.yml and then mount it into the container.

Unfortunately play‘ing this Pod config is unsuccessful and I’m unsure whether it could be corrected to work for this app.

Debug

podman run \

--name=busybox \

--detach --rm \

--secret=${ACCOUNT} \

--volume=${PROMETHEUS}:/etc/prometheus/prometheus.yml \

docker.io/busybox \

ash -c "while true; do sleep 30; done"

podman container exec \

--interactive --tty \

busybox ash

And:

more /etc/prometheus/prometheus.yml

global:

scrape_interval: 5m

# evaluation_interval: 5m

scrape_configs:

# Self

- job_name: "prometheus"

And:

more /run/secrets/oidc-token-proxy

{

"type": "service_account",

...

}