Fly.io

- 6 minutes read - 1100 wordsI spent some time over the weekend understanding Fly.io. It’s always fascinating to me how many smart people are building really neat solutions. Fly.io is subtly different to other platforms that I use (Kubernetes, GCP, DO, Linode) and I’ve found the Fly.io team to be highly responsive and helpful to my noob questions.

One of the team’s posts, Docker without Docker surfaced in my Feedly feed (hackernews) and it piqued my interest.

I’ve a basic implementation of gRPC healthchecking that I deployed to Fly.io. The documentation is mostly excellent and there’s a quickstart Speedrun that may serve your purposes. Coming from different platforms, I found some differences in how developers interact with Fly.io that I’ll document here.

I’m loathe to install anything on my machine and prefer to use containers or Snaps when possible. Fly.io’s CLI is called flyctl and can be run|aliased:

alias flyctl="\

docker run \

--interactive --tty --rm \

--volume=${PWD}/.fly:/.fly \

--volume=${PWD}/fly.toml:/fly.toml \

flyio/flyctl"

NOTE The volume mounts are for the CLI’s config (located in

.fly) and the default app config (fly.toml). Ensure you’re running the alias from the directory where you want these files created.

The CLI works great but the app flow is different than I’m used to. Rather than be able to spec an app and then deploy it, you’ll need to create an app before you can deploy it. This is, in part, in order for the app’s unique name to be created on the Fly.io service.

NOTE Be careful inadvertently overwriting an existing

fly.tomlwith this approach. The CLI will prompt before overwriting but you’ll need to overwrite in order to create a new app.

APP="..."

flyctl app create --app=${APP}

For simplicity, you can select None for the builder and accept the default port (8080).

The CLI should report:

New app created

Name = ${APP}

Organization = personal

Version = 0

Status =

Hostname = <empty>

App will initially deploy to [[somewhere]] region

Wrote config file fly.toml

NOTE The service locates the nearest data-center to your host. In my case, Seattle. YMMV.

I’ve then manually added config details to the fly.toml file:

app = "[[YOUR-APP]]"

kill_signal = "SIGINT"

kill_timeout = 5

[build]

image = "[[YOUR-IMAGE]]"

[experimental]

exec = [

"/server",

"--failure_rate=0.5",

"--changes_rate=15s",

"--grpc_endpoint=:50051",

"--prom_endpoint=:8080"

]

[[services]]

internal_port = 50051

protocol = "tcp"

[services.concurrency]

hard_limit = 25

soft_limit = 20

[[services.ports]]

handlers = []

port = "10001"

[[services]]

internal_port = 8080

protocol = "tcp"

[[services.ports]]

handlers = ["http"]

port = "80"

[[services.ports]]

handlers = ["http","tls"]

port = "443"

I’m deploying a container IMAGE but the service supports buildpacks, permitting you to provide Golang, Node|Deno, Python and Ruby sources to be built and deployed.

My image requires runtime flags so I’m using [experimental] and exec to define the runtime binary and args|flags.

My config has 2 [[services]] sections. The first defines the gRPC service. The second defines the Prometheus metrics.

For gRPC, the [[services]] value for internal_port of 50051 matches the container image’s flag --grpc_endpoint. The service is TCP and it exposes (publicly) one port 10001 which requires the raw TCP handler (handlers = []).

For Prometheus, the [[service]] value for internal_port of 8080 matches the container image’s flag --prom_endpoint. The service is TCP and it exposes (publicly) two ports 80 which is insecure HTTP and a secure port 443 which is HTTP/S (w/ TLS). In practice, the insecure port 80 should be inaccessible but it’s present for testing.

Once, the fly.toml is defined, the app can be deployed:

flyctl deploy --app=${APP}

Yields:

Deploying [[MY-APP]]

==> Validating app configuration

--> Validating app configuration done

Services

TCP 10001 ⇢ 50051

TCP 80 ⇢ 8080

Searching for image '[[MY-IMAGE]]' remotely...

image found: img_6nk3yvl35lvome79

Image: [[MY-IMAGE]]

Image size: 6.9 MB

==> Creating release

Release v0 created

You can detach the terminal anytime without stopping the deployment

Monitoring Deployment

1 desired, 1 placed, 1 healthy, 0 unhealthy

--> v0 deployed successfully

This can be confirmed through the CLI:

flyctl apps list

NAME OWNER STATUS LATEST DEPLOY

[[MY-APP]] personal running 1m ago

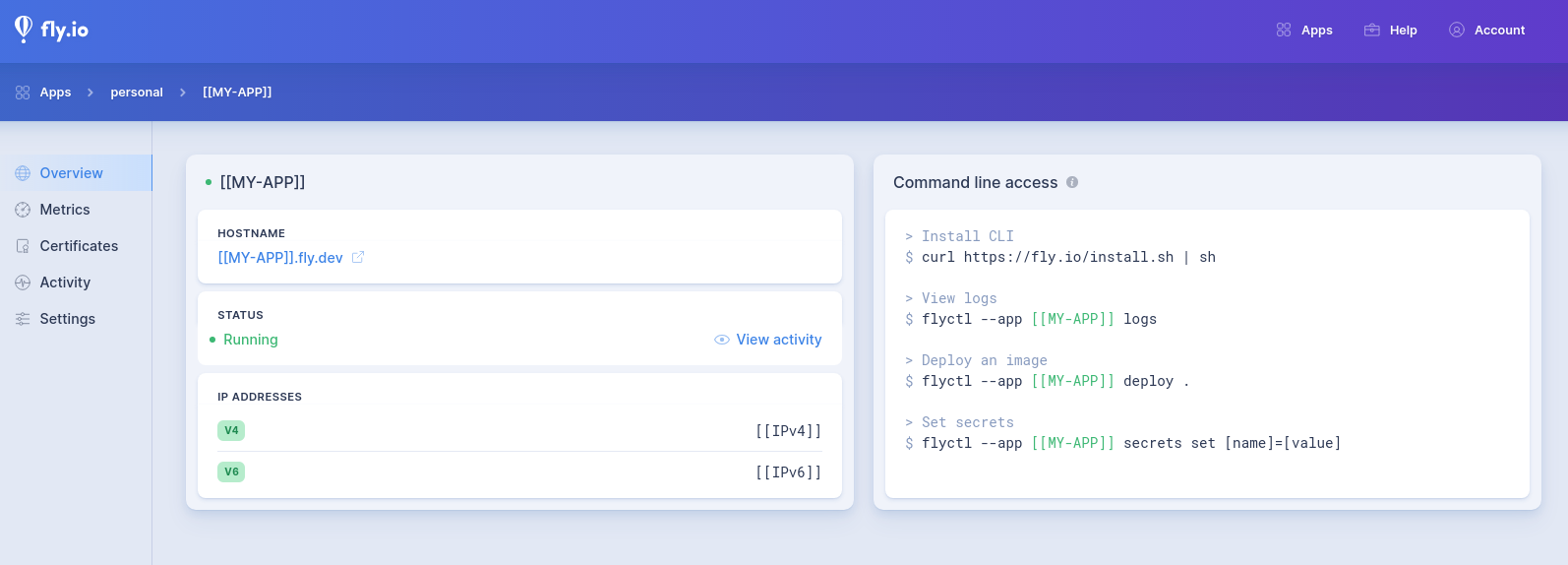

And through the service’s console:

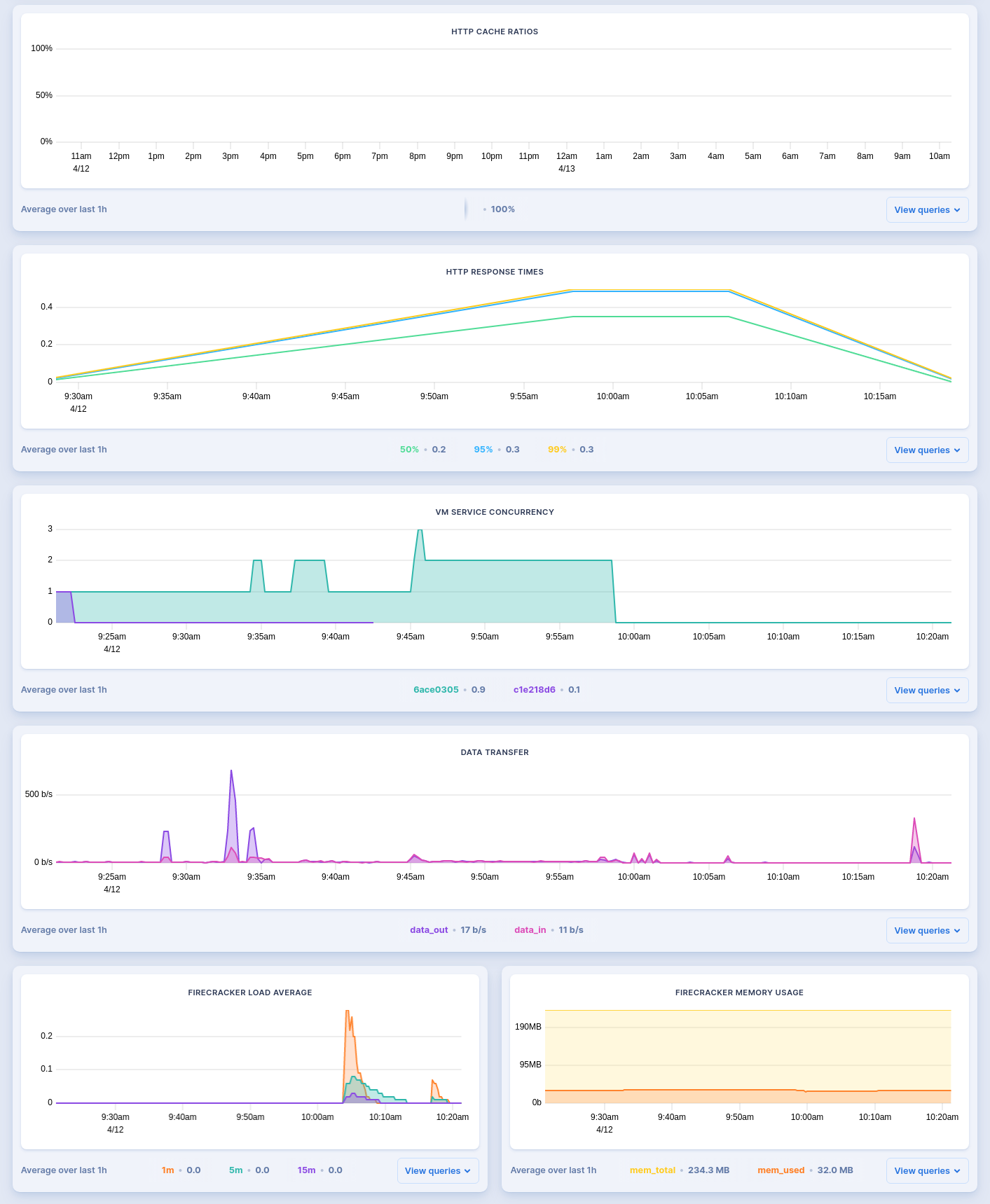

Which also provides useful (Prometheus-derived) metrics:

Unfortunately, the instance and scaling details aren’t part of the fly.toml config.

In order to confirm these values:

APP="..."

flyctl scale count 1 \

--app=${APP}

Count changed to 1

flyctl scale show \

--app=${APP}

VM Resources for ...

VM Size: shared-cpu-1x

VM Memory: 256 MB

Count: 1

NOTE IIUC these settings will keep my app to a single (

1)shared-cpu-1xinstance. Per pricing, this instance (unsure about bandwidth costs) should be free.

To grab the app’s IPs and fly.dev DNS name:

# Get IP v4

IP4=$(flyctl ips list \

--app=${APP} \

--json \

| jq -r '.[]|select(.Type=="v4").Address') && \

echo ${IP4}

213.188:...

# Get IP v6

IP6=$(flyctl ips list \

--app=${APP} \

--json \

| jq -r '.[]|select(.Type=="v6").Address') && \

echo ${IP6}

2a09:8280:1:...

# Get app's DNS

ENDPOINT=$(flyctl apps list \

--json \

| jq -r ".[]|select(.ID==\"${APP}\").Hostname") \

&& echo ${ENDPOINT}

${APP}.fly.dev

Then, in my case, I can curl the app’s Prometheus metrics:

# HTTP

curl http://${ENDPOINT}:80/metrics

go_gc_duration_seconds{quantile="0"} 0

go_gc_duration_seconds{quantile="0.25"} 0

go_gc_duration_seconds{quantile="0.5"} 0

go_gc_duration_seconds{quantile="0.75"} 0

go_gc_duration_seconds{quantile="1"} 0

go_gc_duration_seconds_sum 0

go_gc_duration_seconds_count 0

# HTTP/S (TLS)

curl \

--request GET \

https://${ENDPOINT}:443/metrics

go_gc_duration_seconds{quantile="0"} 0

go_gc_duration_seconds{quantile="0.25"} 0

go_gc_duration_seconds{quantile="0.5"} 0

go_gc_duration_seconds{quantile="0.75"} 0

go_gc_duration_seconds{quantile="1"} 0

go_gc_duration_seconds_sum 0

go_gc_duration_seconds_count 0

All good!

And then, for ease, I’m using the excellent gRPCurl:

# List service's methods

docker run \

--interactive --tty --rm \

--volume=${PWD}/health.proto:/protos/health.proto \

fullstorydev/grpcurl \

--proto protos/health.proto \

${ENDPOINT}:${GRPC} \

list grpc.health.v1.Health

grpc.health.v1.Health.Check

grpc.health.v1.Health.Watch

# Invoke Watch

docker run \

--interactive --tty --rm \

--volume=${PWD}/health.proto:/protos/health.proto \

fullstorydev/grpcurl \

--proto protos/health.proto ${ENDPOINT}:10001 \

grpc.health.v1.Health/Watch

{

"status": "SERVING"

}

{

"status": "SERVING"

}

{

"status": "NOT_SERVING"

}

Lastly, we can configure our app to use a custom domain.

flyctl certs add ${HOST}

# Check on it

flyctl certs check ${HOST}

You’ll need to add DNS entries to a domain you own. Either a CNAME of the form ${APP} –> ${APP}.fly.dev. or, more efficiently using A and AAAA records against the IPv4 and v6 addresses.

You can confirm your DNS changes with dig or nslookup. In my case, using Google Domains, I can avoid waiting on DNS propagation, with:

curl \

--dns-servers 8.8.8.8,8.8.4.4 \

--request GET \

https://${HOST}:443/metrics

go_gc_duration_seconds{quantile="0"} 0

go_gc_duration_seconds{quantile="0.25"} 0

go_gc_duration_seconds{quantile="0.5"} 0

go_gc_duration_seconds{quantile="0.75"} 0

go_gc_duration_seconds{quantile="1"} 0

go_gc_duration_seconds_sum 0

go_gc_duration_seconds_count 0

And eventually:

docker run \

--interactive --tty --rm \

--volume=${PWD}/health.proto:/protos/health.proto \

fullstorydev/grpcurl \

--proto protos/health.proto \

${HOST}:10001 grpc.health.v1.Health/Watch

When you’re done, you can either delete the app (resetting the entire process):

flyctl apps destroy --app=${APP}

NOTE I want there to be an

undeploybut there isn’t.

Or, suspend it (though I’m unsure whether you’ll still incure costs):

flyctl suspend --app=${APP}

That’s all!