akri

- 5 minutes read - 894 wordsI was very interested to read about Microsoft’s DeisLab’s latest (rust-based) Kubernetes project: akri. If I understand it correctly, it provides a mechanism to make any (IoT) device accessible to containers running within a cluster. I need to spend more time playing around with it so that I can fully understand it. I had some problems getting the End-to-End demo running on Google Compute Engine (and then I tried DigitalOcean droplet) instances. So, here’s a two-ways solution to get you going.

Google Cloud Platform (Compute Engine)

Install

Some environment variables:

PROJECT=[[YOUR-PROJECT]]

BILLING=[[YOUR-BILLING]]

INSTANCE="akri" # Or your preferred instance name

TYPE="e2-standard-2" # Or your preferred machine type

ZONE="us-west1-c" # Or your preferred zone

NOTE You may be able to find your billing account using

gcloud alpha billing accounts list --format="value(name)"

If necessary, create project, assign billing and enable compute engine:

gcloud projects create ${PROJECT}

gcloud beta billing projects link ${PROJECT} \

--billing-account=${BILLING}

gcloud services enable compute.googleapis.com \

--project=${PROJECT}

Here’s a gist containing an all-in-one startup script that represents the instructions given in the akri site.

Save the content of this file to e.g. startup.sh in your working directory.

Then, we can create a preemptible instance using the startup script:

gcloud compute instances create ${INSTANCE} \

--machine-type=${TYPE} \

--preemptible \

--tags=microk8s,akri \

--image-family=ubuntu-minimal-2004-lts \

--image-project=ubuntu-os-cloud \

--zone=${ZONE} \

--project=${PROJECT} \

--metadata-from-file=startup-script=./startup.sh

NOTE the startup script (

./startup.sh) must match the script you created previously

NOTE You will be billed for this machine type

The script takes a couple of minutes to complete.

Check (optional)

We may ssh in to the instance and check the state of the startup script.

gcloud compute ssh ${INSTANCE} --project=${PROJECT}

Then, either:

sudo journalctl --unit=google-startup-scripts.service --follow

Or:

tail -f /var/log/syslog

The script is complete when the following log line appears:

INFO startup-script: service/akri-video-streaming-app created

Access

We need to determine the NodePort of the service. You can either ssh in to the instance and then run the command to determine the NodePort. Or, you may combine the steps:

COMMAND="\

sudo microk8s.kubectl get service/akri-video-streaming-app \

--output=jsonpath='{.spec.ports[?(@.name==\"http\")].nodePort}'"

NODEPORT=$(\

gcloud compute ssh ${INSTANCE} \

--zone=${ZONE} \

--project=${PROJECT} \

--command="${COMMAND}") && echo ${NODEPORT}

The kubectl command gets the akri-video-stream-app service as JSON and filters the output to determine the NodePort (${NODEPORT}) that’s been assigned.

The gcloud compute ssh command runs the kubectl commands against the instance.

Then we can use ssh port-forwarding to forward one of our host’s (!) local ports (${HOSTPORT}) to the Kubernetes’ service’s NodePort ({NODEPORT}):

HOSTPORT=8888

gcloud compute ssh ${INSTANCE} \

--zone=${ZONE} \

--project=${PROJECT} \

--ssh-flag="-L ${HOSTPORT}:localhost:${NODEPORT}

NOTE the

HOSTPORTcan be the same asNODEPORTif this is available on your host.

NOTE the port-forwarding only continues while the ssh sessions is running.

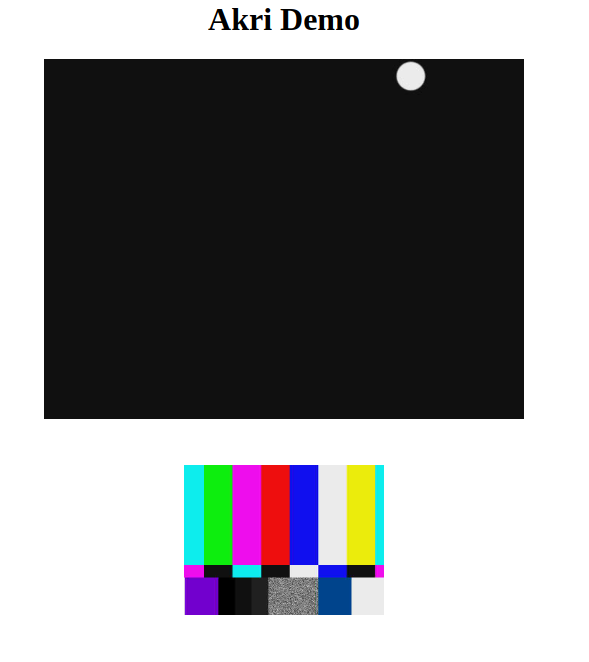

Then, just browse the HTTP endpoint locally: http://localhost:${HOSTPORT}/

NOTE The terminating

/is important

NOTE Yes, there should be 3 ‘screens’ shown on this image; the process may have failed!?

Tidy-Up

The simplest way to tidy-up is to delete the instance:

gcloud compute instances delete ${INSTANCE} \

--zone=${ZONE} \

--project=${PROJECT}

DigitalOcean

Install

Some environment variables:

INSTANCE="akri" # Or your preferred droplet name

REGION="sfo2" # Or your preferred region

SSHKEYID=[[YOUR-SSH-KEY-ID]] # doctl compute ssh-key list

Here’s a gist containing an all-in-one startup script that represents the instructions given in the akri site.

Save the content of this file to e.g. startup.sh in your working directory.

Then, we can create a droplet using the startup script:

doctl compute droplet create ${INSTANCE} \

--region ${REGION} \

--image ubuntu-20-04-x64 \

--size g-2vcpu-8gb \

--ssh-keys ${SSHKEYID} \

--tag-names microk8s,akri \

--user-data-file=./startup.sh

NOTE the startup script (

./startup.sh) must match the script you created previously.

NOTE You will be billed for this droplet.

The script takes a couple of minutes to complete.

Check

We may ssh in to the droplet and check the state of the startup script.

If you have jq installed:

IP=$(\

doctl compute droplet list \

--output=json | jq -r ".[]|select(.name==\"${INSTANCE}\")|.networks.v4[]|select(.type==\"public\")|.ip_address") && \

echo ${IP}

If not:

IP=$(doctl compute droplet list | grep ${INSTANCE} | awk '{print $3}') && \

echo ${IP}

Then you may ssh in to the droplet:

SSHKEY=[[/path/to/you/key]]

ssh -i ${SSHKEY} root@${IP}

Then, either:

sudo journalctl --unit=cloud-* --follow

NOTE I’m unsure how to only get the logs from the

cloud-initrunning the startup script!?

Or:

tail -f /var/log/syslog

The script will take a couple of minutes to complete. It is complete when the following log line appears:

service/akri-video-streaming-app created

Access

We need to determine the NodePort of the service. You can either ssh in to the droplet and then run the command to determine the NodePort. Or, from your host machine, you may combine the steps:

COMMAND="\

sudo microk8s.kubectl get service/akri-video-streaming-app \

--output=jsonpath='{.spec.ports[?(@.name==\"http\")].nodePort}'"

NODEPORT=$(\

ssh -i ${SSHKEY} root@${IP} "${COMMAND}") && \

echo ${NODEPORT}

The kubectl command gets the akri-video-stream-app service as JSON and filters the output to determine the NodePort (${NODEPORT}) that’s been assigned.

The ssh command runs the kubectl commands against the droplet.

Then we can use ssh port-forwarding to forward one of our host’s (!) local ports (${HOSTPORT}) to the Kubernetes’ service’s NodePort ({NODEPORT}):

HOSTPORT=8888

ssh -i ${SSHKEY} root@${IP} -L ${HOSTPORT}:localhost:${NODEPORT}

NOTE the

HOSTPORTcan be the same asNODEPORTif this is available on your host.

NOTE the port-forwarding only continues while the ssh sessions is running.

Then, just browse the HTTP endpoint locally: http://localhost:${HOSTPORT}/

NOTE The terminating

/is important

Tidy-up

The simplest way to tidy-up is to delete the droplet:

doctl compute droplet delete ${INSTANCE}

That’s all!