Adventures around BPF

- 6 minutes read - 1237 wordsI think (!?) this interesting learning experience started with Envoy Go Extensions.

IIUC, Cilium contributed this mechanism (Envoy Go Extensions) by which it’s possible to extend Envoy using Golang. The documentation references BPF. Cilium and BNF were both unfamiliar technologies to me and so began my learning. This part of the journey concludes with Weave Scope.

I learned that Cilium is a CNI implementation that may be used with Kubernetes. GKE defaults (but is not limited to) to Google’s own CNI implementation (link). From a subsequent brief exploration on CNI, I believe Digital Ocean’s managed Kubernetes service uses Cillium and Linode Kubernetes Engine uses Calico.

One of Cilium’s differentiators is that it uses BNF. “What is Cilium?” provides an interesting explanation of the limitations of iptables programming with references to some further explanation of BPF.

So, munged from the above, BPF is an in-(Linux)-kernel virtual machine that runs (guaranteed to be) safe bytecode. BPF – as the name suggests – was born to facilitate packet filtering but its utility is broader e.g. seccomp uses it too. Google, FACEBOOK (!?), Cloudflare and others use it. Developers write solutions in higher-level languages (often C) that can be compiled (using LLVM and GCC) into bytecode that runs in the VM but makes data accessible (via maps?) to userspace code. An excellent source of material for BPF is The IO Visor Project.

I tried to work through the BPF Compiler Collection (BCC) hoping to use the Golang to write BPF programs (gopbf) but, unfortunately, the Ubuntu (19.10 and 18.04) packages aren’t working correctly and I was reluctant to install and build everything to use it. If you know of a working OS|package configuration, please let me know so that I may explore gobpf.

go test -tags integration -v ./...

# github.com/iovisor/gobpf/bcc

bcc/module.go:32:10: fatal error: bcc/bcc_common.h: No such file or directory

32 | #include <bcc/bcc_common.h>

| ^~~~~~~~~~~~~~~~~~

compilation terminated.

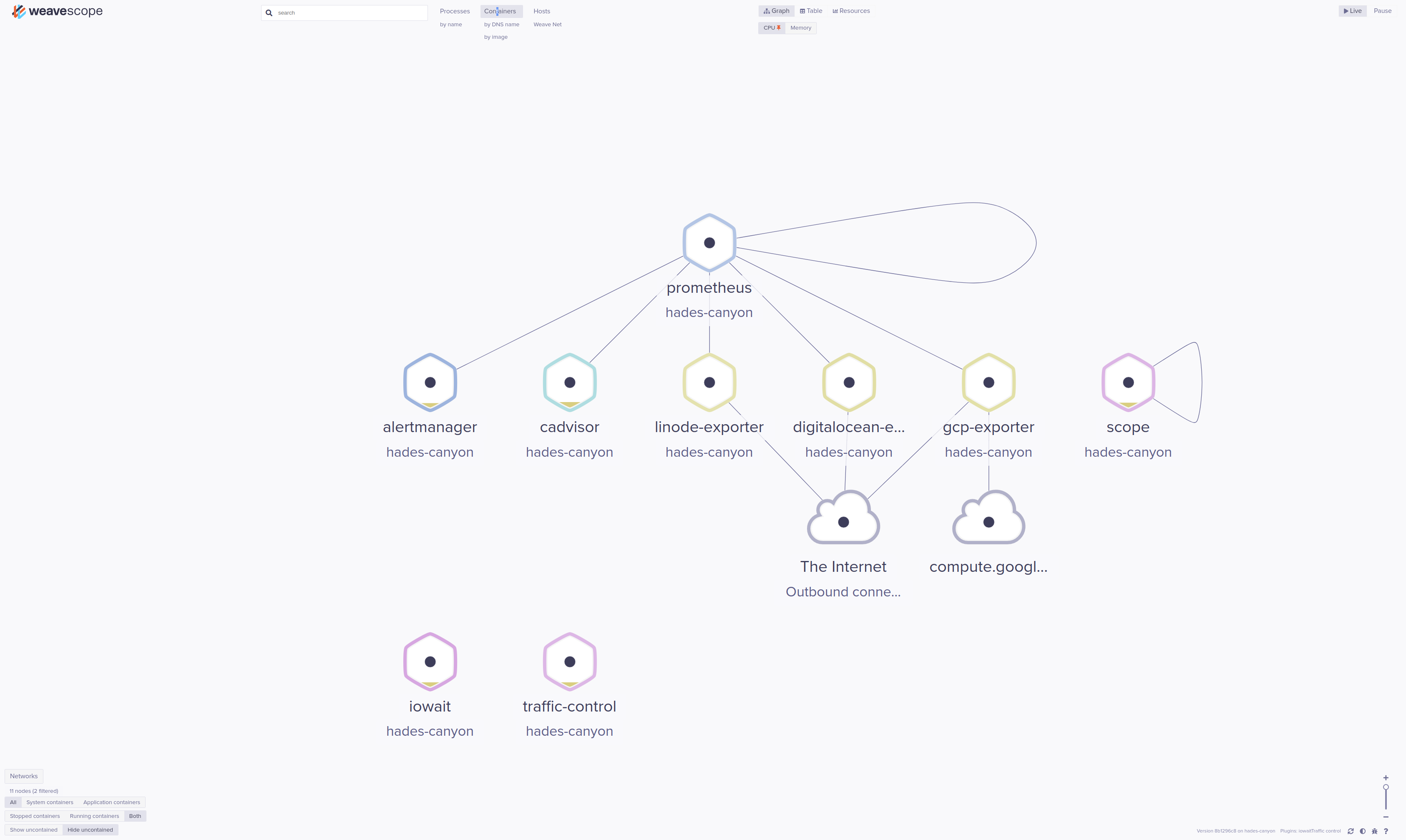

I then stumbled upon Weave Scope perhaps through this post “Improving performance and reliability in Weave Scope with eBPF”. I’d not used Weave Scope and find it rather excellent. I’ve used cAdvisor for somewhat similar goals but will now use both. The rest of this post is about playing around with Weave Scope.

Running it on my local machine:

Docker Compose is easiest. The following runs Weave Scope and 2 plugins: iowait and traffic-control:

version: "3"

services:

scope:

image: weaveworks/scope:1.12.0

container_name: scope

network_mode: host

pid: host

privileged: true

labels:

- "works.weave.role=system"

volumes:

- /var/run/docker.sock:/var/run/docker.sock:rw

- /var/run/scope/plugins:/var/run/scope/plugins

command:

- "--probe.docker=true"

- "--weave=false"

iowait:

image: weaveworksplugins/scope-iowait:latest

container_name: iowait

network_mode: host

volumes:

- /var/run/scope/plugins/iowait:/var/run/scope/plugins/iowait

Commmand:

- "--name=weaveworksplugins-scope-iowait"

traffic-control:

image: weaveworksplugins/scope-traffic-control:latest

container_name: traffic-control

network_mode: host

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/run/scope/plugins/traffic-control:/var/run/scope/plugins/traffic-control

command:

- "--name=weaveworksplugins-scope-traffic-control"

But, if you’d prefer to run the containers separately, then:

docker run \

--rm --interactive --tty \

--net=host \

--pid=host \

--privileged \

--label="works.weave.role=system" \

--volume=/var/run/scope/plugins:/var/run/scope/plugins \

--name=weaveworksplugins-scope-iowait \

weaveworksplugins/scope:1.12.0 \

--probe.docker=true \

--weave=false

results:

INFO[0000] publishing to: 127.0.0.1:4040

<probe> INFO: 2019/12/30 22:07:37.671756 Basic authentication disabled

<app> INFO: 2019/12/30 22:07:37.745592 app starting, version 8b1296c8, ID 7d5aaf6dd9ffe7a3

<app> INFO: 2019/12/30 22:07:37.745645 command line args: --mode=app --probe.docker=true --weave=false

<app> INFO: 2019/12/30 22:07:37.749749 Basic authentication disabled

<app> INFO: 2019/12/30 22:07:37.751858 listening on :4040

<probe> INFO: 2019/12/30 22:07:37.764026 command line args: --mode=probe --probe.docker=true --weave=false

<probe> INFO: 2019/12/30 22:07:37.764122 probe starting, version 8b1296c8, ID 2be64150e1ad3d8f

<probe> INFO: 2019/12/30 22:07:37.775699 Control connection to 127.0.0.1 starting

<probe> WARN: 2019/12/30 22:07:37.796262 Error setting up the eBPF tracker, falling back to proc scanning: error while loading map "maps/tcp_event_ipv4": operation not permitted

NB Because I don’t have BCC installed (correctly) on my local machine, Weave Scope is unable to use eBPF and reverts to parsing /proc

NB Remove --interactive --tty is you wish to run both containers from the same shell

and:

docker run \

--rm --interactive --tty \

--net=host \

--volume=/var/run/scope/plugins/iowait:/var/run/scope/plugins/iowait \

weaveworksplugins/scope-iowait:latest \

--name=weaveworksplugins-scope-iowait

results in:

2019/12/30 22:16:18 Starting on hades-canyon...

2019/12/30 22:16:18 Listening on: unix:///var/run/scope/plugins/iowait/iowait.sock

2019/12/30 22:16:19 /report?api_version=1&probe_id=2619977d3df6c5d8

2019/12/30 22:16:20 /report?api_version=1&probe_id=2619977d3df6c5d8

2019/12/30 22:16:21 /report?api_version=1&probe_id=2619977d3df6c5d8

2019/12/30 22:16:22 /report?api_version=1&probe_id=2619977d3df6c5d8

and:

docker run \

--rm --interactive --tty \

--net=host \

--volume=/var/run/docker.sock:/var/run/docker.sock \

--volume=/var/run/scope/plugins/traffic-control:/var/run/scope/plugins/traffic-control \

weaveworksplugins/scope-traffic-control:latest \

--name=weaveworksplugins-scope-traffic-control

results in:

INFO[0000] Listening on: unix:///var/run/scope/plugins/traffic-control/traffic-control.sock

INFO[0000] Listening...

You’ll need to have something to monitor. I’ve been playing around with Prometheus Exporters and so have several running to monitor.

The Weave Scope UI should be available on: http://localhost:4040

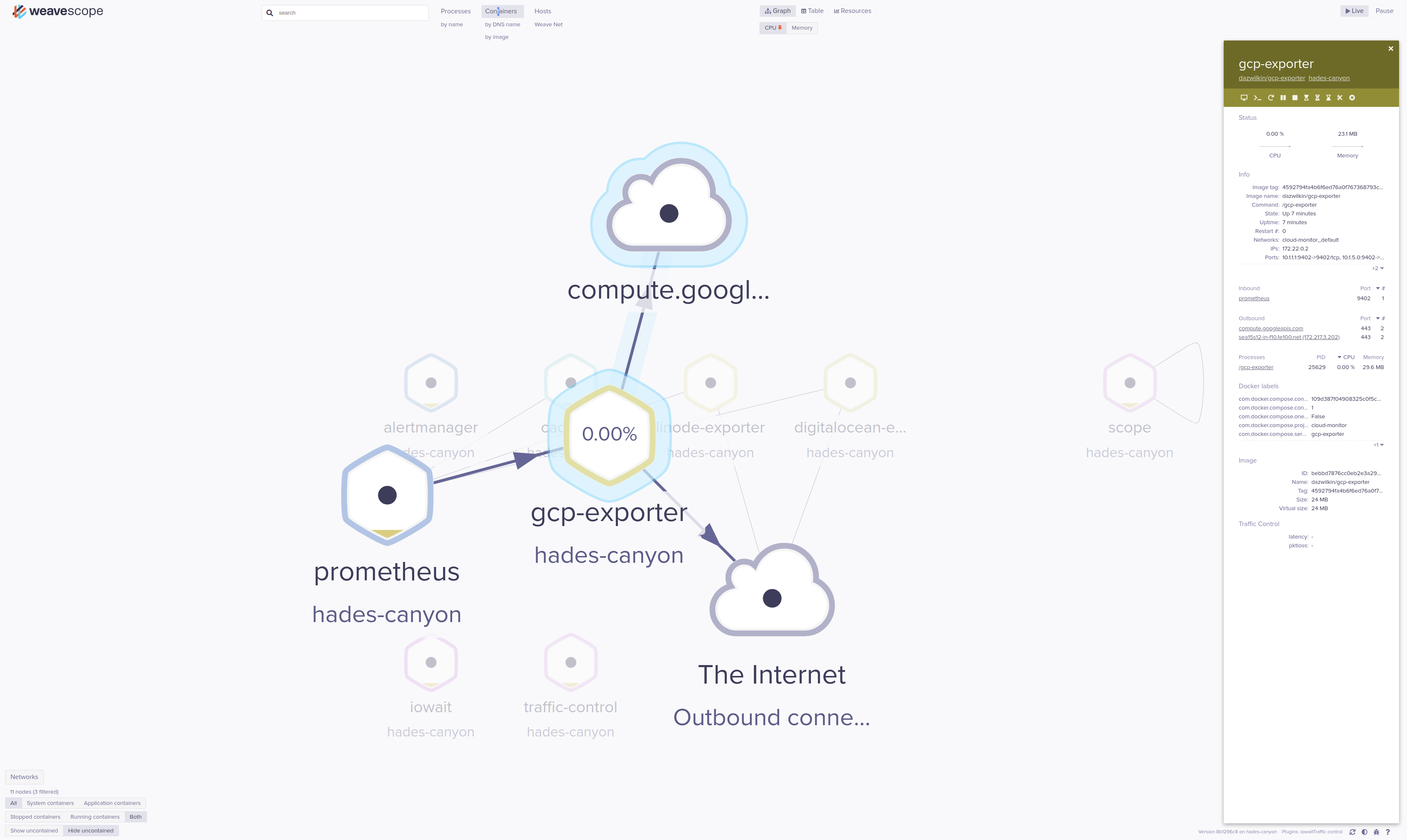

And, if I drill down into e.g. gcp-exporter:

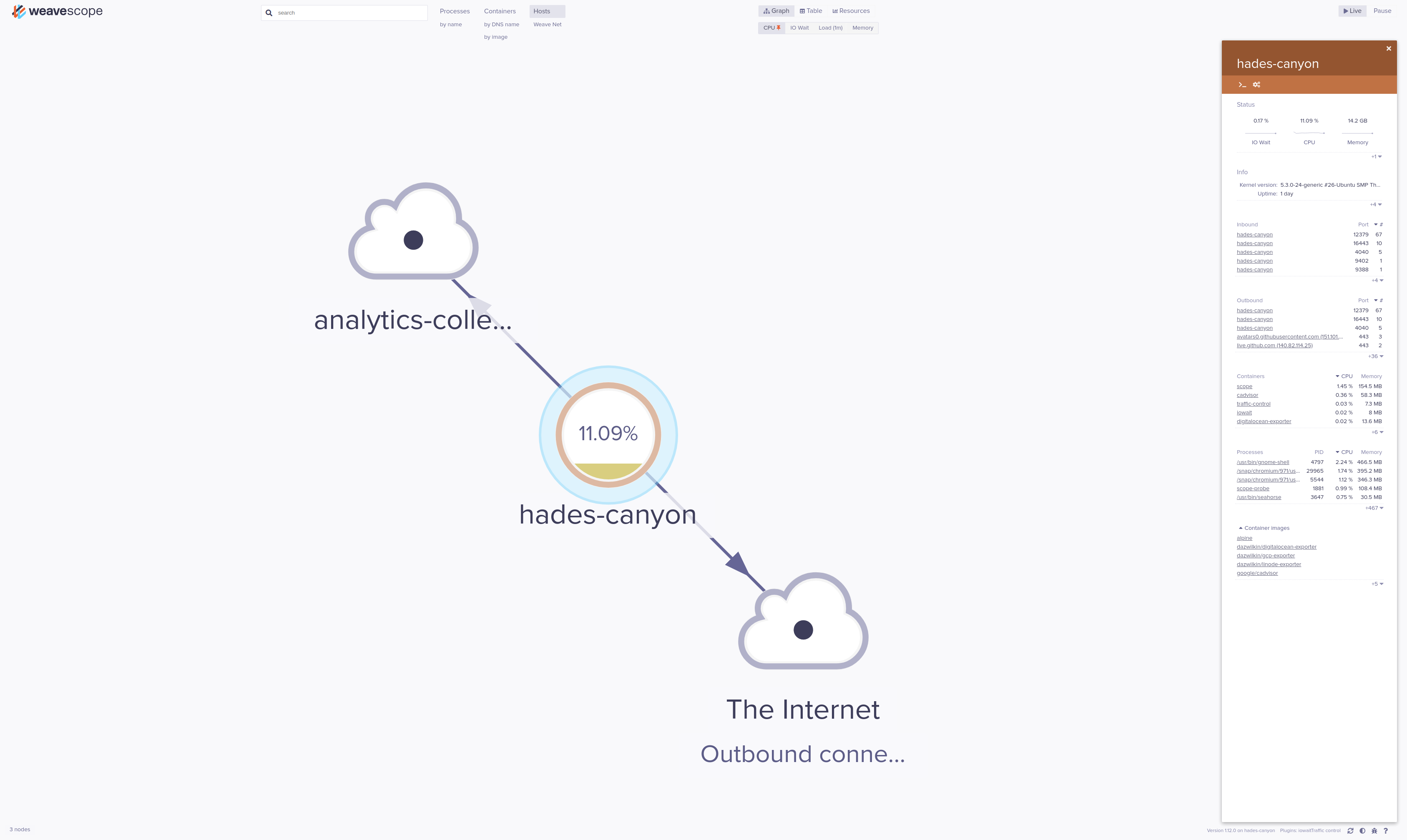

Switching from “Containers” to “Hosts”, I’m also able to view the results of iowait:

Each of the plugins is scraped by Weave Scope using an API exposed on the UNIX socket. In the case of scope-iowait, this can be checked with:

sudo curl \

--silent \

--unix-socket /var/run/scope/plugins/iowait/iowait.sock \

--request GET \

http://localhost/report \

| jq .

and returns:

{

"Host": {

"nodes": {

"hades-canyon;<host>": {

"metrics": {

"idle": {

"samples": [

{

"date": "2019-12-30T22:31:01.626848079Z",

"value": 92.68

}

],

"min": 0,

"max": 100

}

},

"latestControls": {

"switchToIOWait": {

"timestamp": "2019-12-30T22:31:01.626878701Z",

"value": {

"dead": false

}

},

"switchToIdle": {

"timestamp": "2019-12-30T22:31:01.626878701Z",

"value": {

"dead": true

}

}

}

}

},

"metric_templates": {

"idle": {

"id": "idle",

"label": "Idle",

"format": "percent",

"priority": 0.1

}

},

"controls": {

"switchToIOWait": {

"id": "switchToIOWait",

"human": "Switch to IO wait",

"icon": "fa-clock-o",

"rank": 1

},

"switchToIdle": {

"id": "switchToIdle",

"human": "Switch to idle",

"icon": "fa-gears",

"rank": 1

}

}

},

"Plugins": [

{

"id": "iowait",

"label": "iowait",

"description": "Adds a graph of CPU IO Wait to hosts",

"interfaces": [

"reporter",

"controller"

],

"api_version": "1"

}

]

}

NB The /report returns Hosts.nodes.hades-canyon confirming that this plugin is by “Hosts” (not “Containers”)

Hosts.nodes.hades-canyon.metrics.idle provides metric data.

This data is rendered into the UI thanks to the (matching) definition in metric_templates.idle

Similarly, for traffic-control you may:

sudo curl \

--silent \

--unix /var/run/scope/plugins/traffic-control/traffic-control.sock \

--request GET \

http://localhost/report \

| jq .

The output for this is edited for clarity as it is more lengthy:

{

"Container": {

"nodes": {

"26b59de3ea355da095623112b25d57d9dca9e07d365a1d2cdd66f03e79b95169;<container>": {

"latestControls": {

"traffic-control-table-clear": {

"timestamp": "2019-12-31T20:41:51.005774828Z",

"value": {

"dead": false

}

},

...

},

"latest": {

"traffic-control-table-latency": {

"timestamp": "2019-12-31T20:41:51.005774828Z",

"value": "-"

},

"traffic-control-table-pktloss": {

"timestamp": "2019-12-31T20:41:51.005774828Z",

"value": "-"

}

}

},

...

},

"controls": {

"traffic-control-table-clear": {

"id": "traffic-control-table-clear",

"human": "Clear traffic control settings",

"icon": "fa-times-circle",

"rank": 24

},

...

},

"metadata_templates": {

"traffic-control-latency": {

"id": "traffic-control-latency",

"label": "Latency",

"priority": 13.5,

"from": "latest"

},

"traffic-control-pktloss": {

"id": "traffic-control-pktloss",

"label": "Packet Loss",

"priority": 13.6,

"from": "latest"

}

},

"table_templates": {

"traffic-control-table": {

"id": "traffic-control-table",

"label": "Traffic Control",

"prefix": "traffic-control-table-"

}

}

},

"Plugins": [

{

"id": "traffic-control",

"label": "Traffic control",

"description": "Adds traffic controls to the running Docker containers",

"interfaces": [

"reporter",

"controller"

],

"api_version": "1"

}

]

}

NB The /report returns Containers.nodes confirming that this plugin is by “Containers” (not “Hosts”)

Container.nodes.26b5... contains latestControls and latest

We can see how e.g. traffic-control-table-latency appears in Container.nodes.26b5... with values and it appears to be constituted by table_template.traffic-control-table and e.g. metadata_templates.traffic-control-latency. I need to read more about how this works.

After cloning traffic-control from GitHub, it appears to be hard-coded against eth0. On my Ubuntu machine, the default ethernet connection is called enp5s0 and so the plugin appears to not work. I tried replacing eth0 with enp5s0 in the plugin and rebuilding but without success.

While working on the clone, I replaced the Glide configuration with Go Modules. More is required than is shown below but, I had to:

- Use the old version of

github.com/containernetworking/cni(v0.3.0) - Rename imports from

Sirupsentosirupsen

module github.com/weaveworks-plugins/scope-traffic-control

go 1.13

require (

github.com/containernetworking/cni v0.3.0

github.com/sirupsen/logrus v1.4.2

)

That’s all for now!